1. Emergence of problems

Today, connect hive with dbeaver and test several SQL executed on the hive client yesterday. There are custom UDF, udtf, udaf, etc. in the SQL, but when the execute button is pressed in dbeaver, an error is reported, saying that it is an invalid function. But it has been registered as a permanent function in hive and has been run. How can it be invalid in dbeaver?

2. Settle

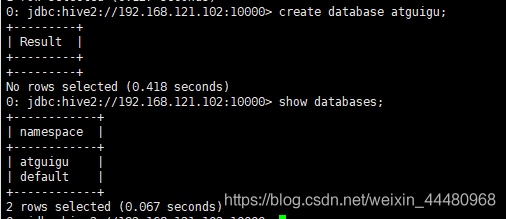

1.Put the create permanent function statement executed at the hive command line into Dbeaver and execute it again

(1) The statement to create a permanent function is as follows:

create function testudf as 'test.CustomUDF' using jar 'hdfs://cls:8020/user/hive/warehouse/testudf/TESTUDF.jar';

3.Cause (not carefully verified)

1. Because my hive client uses hive commands to connect and register functions, and because Dbeaver connects to hive with hiveserver2 service, which is beeline connection. It is said that hive client registration hiveserver2 cannot be used.

2. In the actual operation process, when I execute the instruction to register the permanent function in Dbeaver, the execution result reports that the function already exists, and it will be fine when I execute the sql statement again. So I think it’s possible that the function information was refreshed, because the function was reported to be invalid at the beginning of the execution, indicating that the sql was also executed.