Version comparison detection principle: check whether the current system version of spring-security-web is within the scope of the vulnerability version|version comparison detection results: – spring-security-web

Current installed version: 5.2.1.RELEASE

Need to upgrade to 5.5.7, 5.6.4 and above, because the pom can not find a direct reference to the location, so add the following dependencies to spring-security-web version forced to upgrade to 5.5.7

<!-- Fix spring-security-web version vulnerability -->

<dependency>

<groupId>org.springframework.security</groupId>

<artifactId>spring-security-web</artifactId>

<version>5.5.7</version>

</dependency>

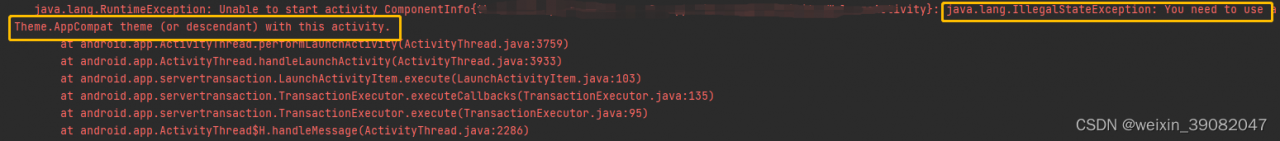

An error is reported during startup. The error contents are as follows:

***************************

APPLICATION FAILED TO START

***************************

Description:

An attempt was made to call a method that does not exist. The attempt was made from the following location:

org.springframework.security.web.util.matcher.OrRequestMatcher.<init>(OrRequestMatcher.java:43)

The following method did not exist:

org.springframework.util.Assert.noNullElements(Ljava/util/Collection;Ljava/lang/String;)V

The method's class, org.springframework.util.Assert, is available from the following locations:

jar:file:/C:/Users/sutpc/.m2/repository/org/springframework/spring-core/5.1.18.RELEASE/spring-core-5.1.18.RELEASE.jar!/org/springframework/util/Assert.class

It was loaded from the following location:

file:/C:/Users/sutpc/.m2/repository/org/springframework/spring-core/5.1.18.RELEASE/spring-core-5.1.18.RELEASE.jar

2. Analysis of the cause

Theoretically, spring-security-web is introduced in a jar, and the version of spring-security-web is changed separately, resulting in the incompatibility of the supporting code in this jar.

3. Solutions

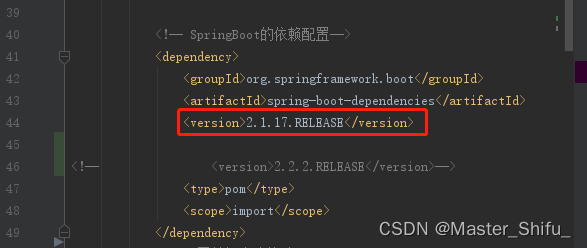

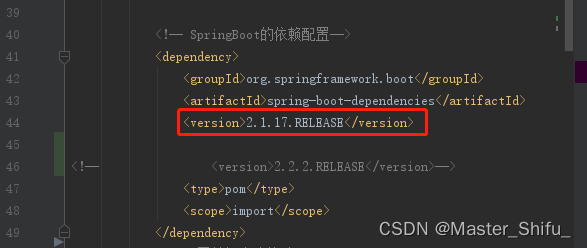

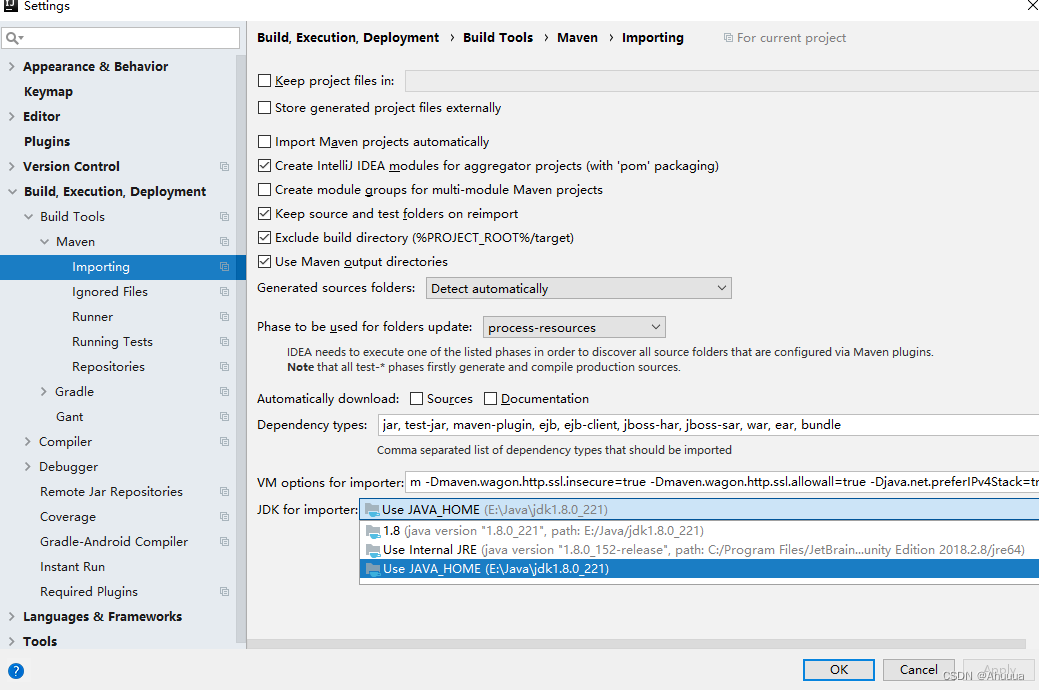

Upgrade spring-boot-dependencies from 2.1.17.RELEASE to 2.2.2.RELEASE

<! -- SpringBoot dependency configuration-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<!-- <version>2.1.17.RELEASE</version>-->

<version>2.2.2.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

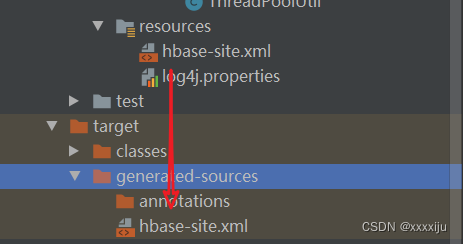

Just use the 5.5.7 mandatory override of spring-security-web at the end of the pom

<! -- Fix spring-security-web version vulnerability -->

<dependency>

<groupId>org.springframework.security</groupId>

<artifactId>spring-security-web</artifactId>

<version>5.5.7</version>

</dependency>

4. solution ideas:

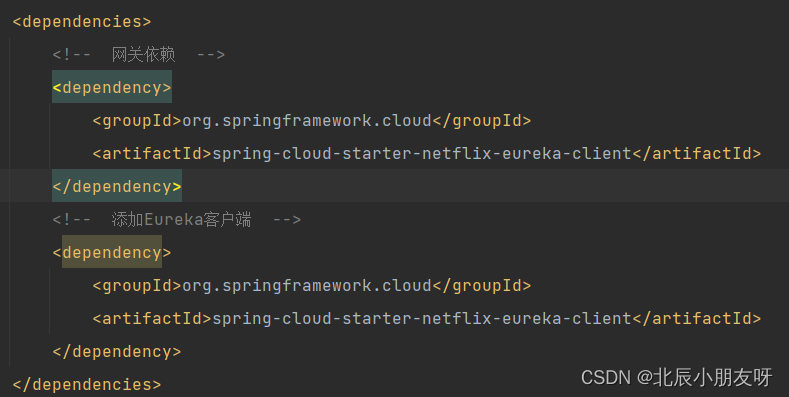

Because a separate change in the version of spring-security-web, resulting in this jar incompatible with the code caused by the problem, so the primary problem need to find spring-security-web by which jar introduced

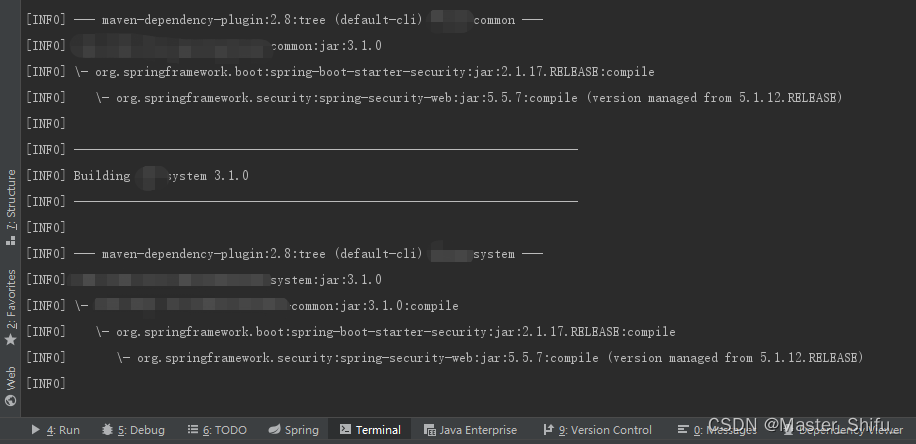

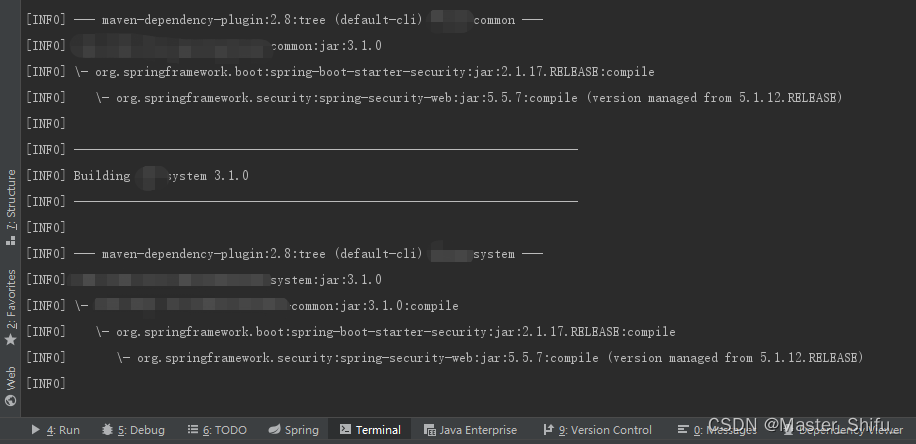

4.1 Query the Maven project to find out which dependency the jar package is introduced by

Use mvn dependency:tree directly to see the complete dependency tree of the project.

Command format

mvn dependency:tree -Dverbose -Dincludes=Content to be queried(groupId:artifactId)

-dependency:tree:Indicates a tree-like display.

-Dverbose:Indicates that all references can be displayed, including those that are ignored because of multiple reference duplicates.

-Dincludes: you can formulate query conditions

The groupId and artifactId of spring-security-web are:

groupId: org.springframework.security

artifactId: spring-security-web

so the command is as below:

mvn dependency:tree -Dverbose -Dincludes=org.springframework.security:spring-security-web

4.2 The dependency hierarchy after execution in the idea’s Teminal is shown in the figure below

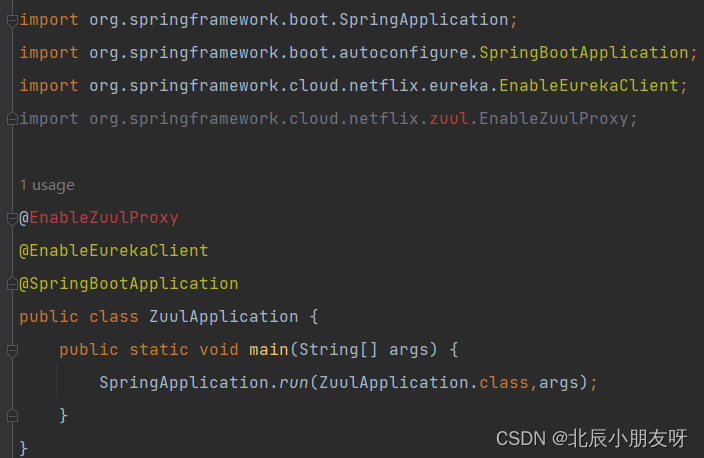

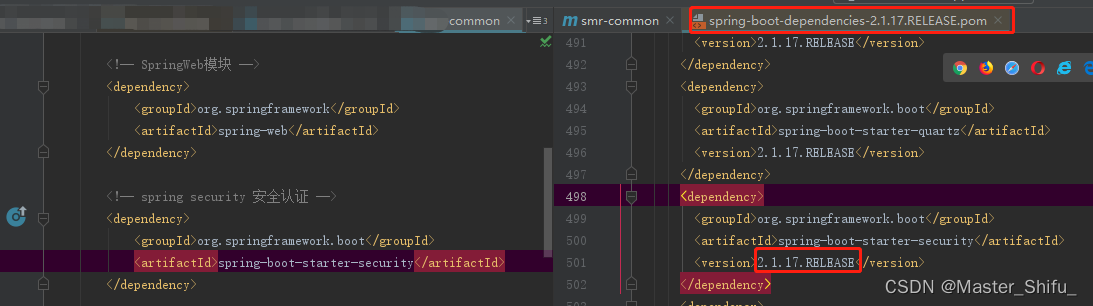

4.3spring-security-web is introduced by spring-boot-starter-security

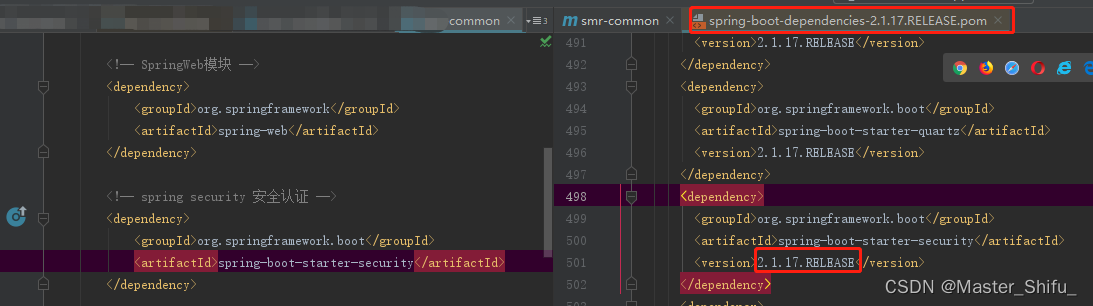

spring-security-web is introduced by spring-boot-starter-security, version 2.1.17.RELEASE, search spring-boot-starter-security and find that it uses spring-boot- RELEASE.pom version

4.3spring-boot-starter-security version is the version that inherits spring-boot-dependencies

In the global search spring-boot-dependencies version, found really is 2.1.17.RELEASE, so far, all the dependency levels are found, that began to guess, is not spring-boot-dependencies version is too low, spring-security-web version is too high incompatible, spring-security-web version can not be adjusted down, can only upgrade the version of spring-boot-dependencies, in the maven repository to find the version of spring-boot-dependencies, level by level test, found 2.2.2. The problem is solved.