docker: Error response from daemon: driver failed programming external connectivity on endpoint tomcat01 (00028237b8dd7b21dbce757be3bf2df0e0fcfa6c3987cac68c42d2fb6603b42d):

(iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 49162 -j DNAT --to-destination 172.17.0.2:8080 ! -i docker0: iptables: No chain/target/match by that name.

(exit status 1)).

When starting a docker container or doing docker configuration, setting a configuration such as restart for the firewall

will clear the relevant configuration of docker, resulting in the query firewall rules when the docker chain is not displayed

The specific reason is that you deleted the chain in iptables

There are many ways to remove the links

restart firewalld firewall can be cleared, firewalld is centos7 or more, iptables is centos6 or less will have, and the underlying firewalld is involved in the iptables, so when it comes to firewall firewalld commands or commands in the iptables Be careful to remove the chain that involves docker

The solution is to restart the Docker engine

systemctl restart docker

Query the chain of the docker againiptables -L

or use this command to queryiptables -t nat - nL

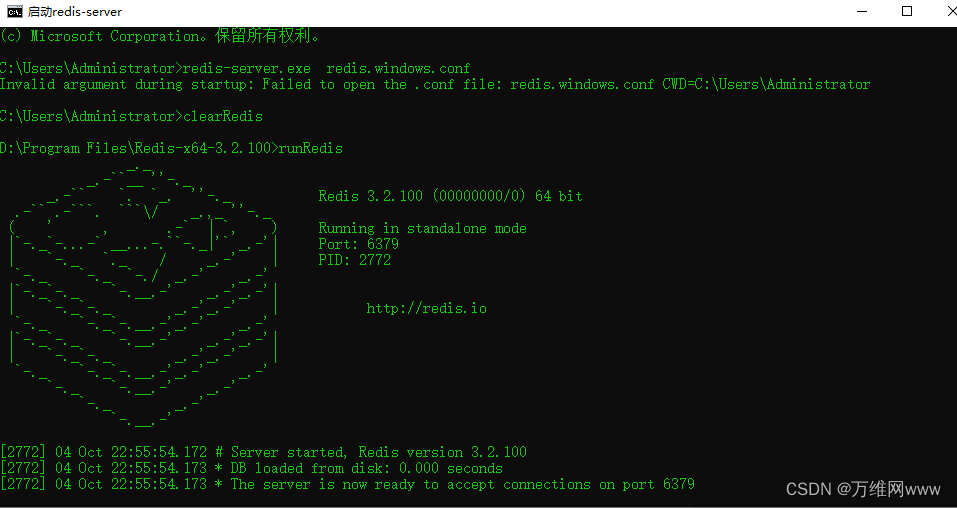

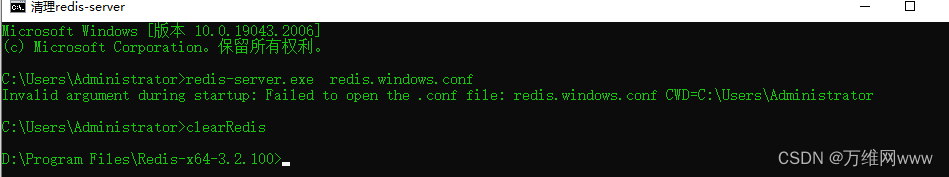

runRedis

runRedis