Recently I’ve been developing projects using scrapy.

scrapy project, using a proxy.

Then it runs locally, everything is fine, the data can be crawled normally.

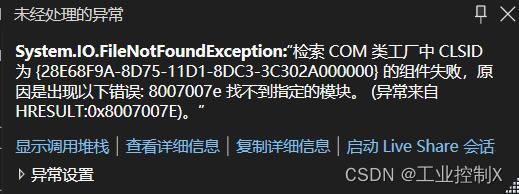

After deploying it to the online gerapy and running it, it reports an error and the logs show that:

2022-10-08 17:03:24 [scrapy.downloadermiddlewares.retry] ERROR: Gave up retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): User timeout caused connection failure: Getting https://www.xxx.com/en/product?o=100&p=100 took longer than 180.0 seconds..

2022-10-08 17:03:24 [xxx] ERROR: <twisted.python.failure.Failure twisted.internet.error.TimeoutError: User timeout caused connection failure: Getting https://www.xxx.com/en/product?o=100&p=100 took longer than 180.0 seconds..>

2022-10-08 17:03:24 [xxx] ERROR: TimeoutError on https://www.xxx.com/en/product?o=100&p=100

I thought it was a proxy problem, then I removed the proxy and it worked fine locally

After deploying to the online gerapy and running, the error was reported again and the logs showed that:

2022-10-09 10:39:45 [scrapy.downloadermiddlewares.retry] DEBUG: Retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): [<twisted.python.failure.Failure twisted.internet.error.ConnectionLost: Connection to the other side was lost in a non-clean fashion: Connection lost.>]

2022-10-09 10:39:56 [scrapy.downloadermiddlewares.retry] ERROR: Gave up retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): [<twisted.python.failure.Failure twisted.internet.error.ConnectionLost: Connection to the other side was lost in a non-clean fashion: Connection lost.>]

Find a solution from stackoverflow:

Add a method in spider:

# Citation needed

from scrapy.spidermiddlewares.httperror import HttpError

from twisted.internet.error import DNSLookupError

from twisted.internet.error import TimeoutError

# How to use

yield scrapy.Request(url=url, meta={'dont_redirect': True, dont_filter=True, callback=self.parse_list, errback=self.errback_work)

# Definition Method

def errback_work(self, failure):

self.logger.error(repr(failure))

if failure.check(HttpError):

response = failure.value.response

self.logger.error('HttpError on %s', response.url)

elif failure.check(DNSLookupError):

request = failure.request

self.logger.error('DNSLookupError on %s', request.url)

elif failure.check(TimeoutError):

request = failure.request

self.logger.error('TimeoutError on %s', request.url)

After deploying it to the online gerapy and running it again, it still reported errors again.

Looking at the version of scrapy, I found that it was version 2.6.1, so I changed it to version 2.5.1.

pip3 install scrapy==2.5.1

Run it locally and report an error:

AttributeError: module ‘OpenSSL. SSL’ has no attribute ‘SSLv3_ METHOD’

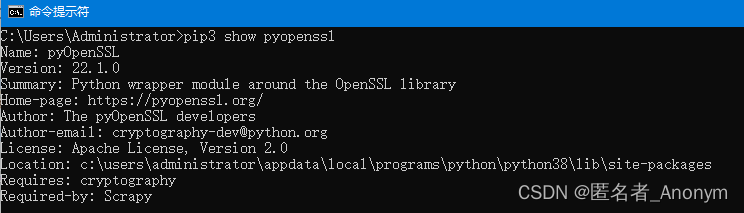

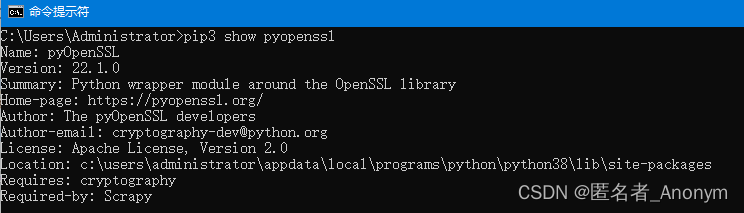

Look at the version of the pyopenssl library:

pip3 show pyopenssl

I find that the version is incorrect.

Then change the library version to 22.0.0

pip3 install pyopenssl==22.0.0

Run it locally again, it is normal!

Then deploy it to online gerapy to run, and it is normal!