An error is reported when the jeecg boot project connects to the MySQL database running on docker. The error information is:

2021-08-23 11:39:45.271 [MyScheduler_QuartzSchedulerThread] ERROR druid.sql.Statement:149 - {conn-10004, pstmt-20010} execute error. SELECT TRIGGER_NAME, TRIGGER_GROUP, NEXT_FIRE_TIME, PRIORITY FROM QRTZ_TRIGGERS WHERE SCHED_NAME = 'MyScheduler' AND TRIGGER_STATE = ?AND NEXT_FIRE_TIME <= ?AND (MISFIRE_INSTR = -1 OR (MISFIRE_INSTR != -1 AND NEXT_FIRE_TIME >= ?)) ORDER BY NEXT_FIRE_TIME ASC, PRIORITY DESC

java.sql.SQLSyntaxErrorException: Table 'water-cloud-dev.QRTZ_TRIGGERS' doesn't exist

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:120)

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:97)

at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:122)

at com.mysql.cj.jdbc.ClientPreparedStatement.executeInternal(ClientPreparedStatement.java:953)

at com.mysql.cj.jdbc.ClientPreparedStatement.executeQuery(ClientPreparedStatement.java:1003)

at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:3240)

at com.alibaba.druid.filter.FilterEventAdapter.preparedStatement_executeQuery(FilterEventAdapter.java:465)

at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:3237)

at com.alibaba.druid.wall.WallFilter.preparedStatement_executeQuery(WallFilter.java:647)

at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:3237)

at com.alibaba.druid.filter.FilterEventAdapter.preparedStatement_executeQuery(FilterEventAdapter.java:465)

at com.alibaba.druid.filter.FilterChainImpl.preparedStatement_executeQuery(FilterChainImpl.java:3237)

at com.alibaba.druid.proxy.jdbc.PreparedStatementProxyImpl.executeQuery(PreparedStatementProxyImpl.java:181)

at com.alibaba.druid.pool.DruidPooledPreparedStatement.executeQuery(DruidPooledPreparedStatement.java:227)

at org.quartz.impl.jdbcjobstore.StdJDBCDelegate.selectTriggerToAcquire(StdJDBCDelegate.java:2613)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.acquireNextTrigger(JobStoreSupport.java:2844)

at org.quartz.impl.jdbcjobstore.JobStoreSupport$41.execute(JobStoreSupport.java:2805)

at org.quartz.impl.jdbcjobstore.JobStoreSupport$41.execute(JobStoreSupport.java:2803)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.executeInNonManagedTXLock(JobStoreSupport.java:3864)

at org.quartz.impl.jdbcjobstore.JobStoreSupport.acquireNextTriggers(JobStoreSupport.java:2802)

at org.quartz.core.QuartzSchedulerThread.run(QuartzSchedulerThread.java:287)

Error reason: the MySQL database running on docker is case sensitive

Solution (I):

Delete the MySQL container and re create a new container with the case sensitive parameter lower_ case_ table_ Names = 1, for example:

docker run --name mysql -p 3306:3306 -e MYSQL_ROOT_PASSWORD=123456 -d mysql --lower_case_table_names=1

Solution (II):

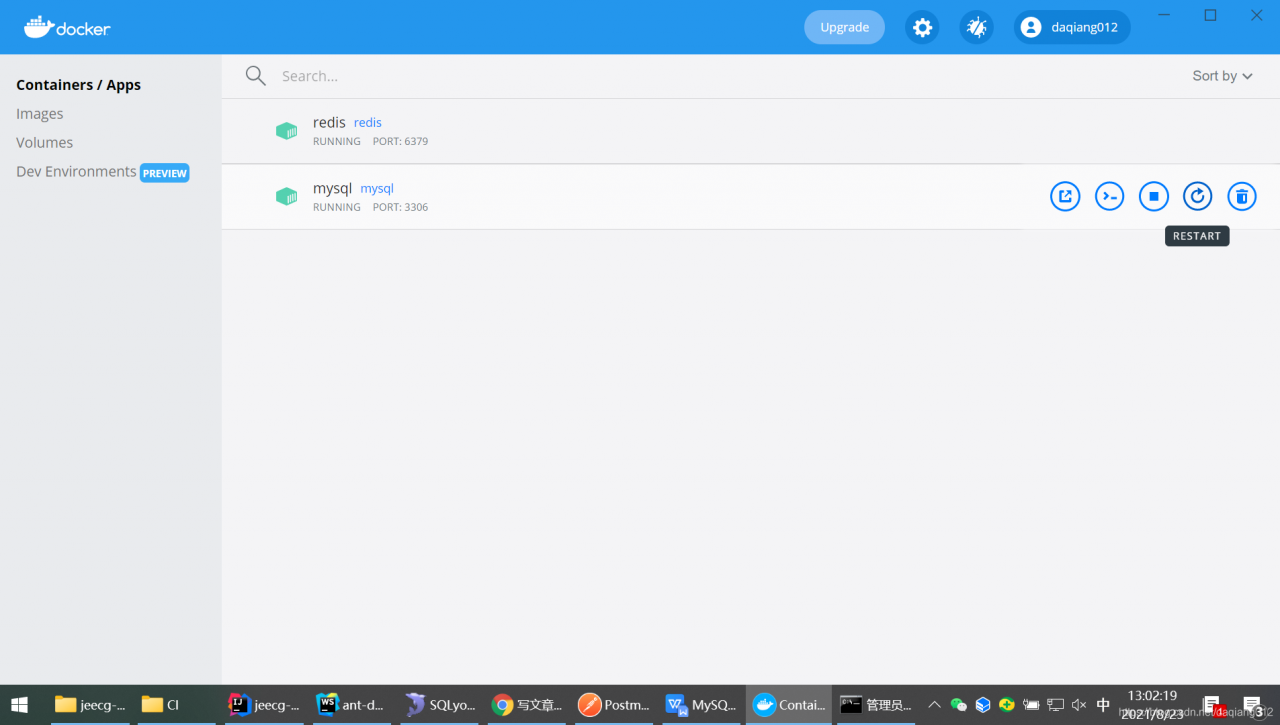

1. Run the docker PS command to view the MySQL container ID:

C:\Users\Administrator>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

86136bf6eebe redis "docker-entrypoint.s…" 3 days ago Up 54 minutes 0.0.0.0:6379->6379/tcp, :::6379->6379/tcp redis

5721f675c819 mysql "docker-entrypoint.s…" 4 days ago Up 54 minutes 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp, 33060/tcp mysql

2. Run the docker exec command to enter the MySQL container:

C:\Users\Administrator>docker exec -it 5721f675c819 bash

root@5721f675c819:/#

3. Run the apt get update command to update the software:

root@5721f675c819:/#apt-get update

Get:1 http://deb.debian.org/debian buster InRelease [122 kB]

Get:2 http://security.debian.org/debian-security buster/updates InRelease [65.4 kB]

Get:3 http://repo.mysql.com/apt/debian buster InRelease [21.5 kB]

Get:4 http://repo.mysql.com/apt/debian buster/mysql-8.0 amd64 Packages [8341 B]

Get:5 http://deb.debian.org/debian buster-updates InRelease [51.9 kB]

Get:6 http://deb.debian.org/debian buster/main amd64 Packages [7907 kB]

Get:7 http://security.debian.org/debian-security buster/updates/main amd64 Packages [301 kB]

80% [6 Packages 6149 kB/7907 kB 78%] 28.3 kB/s 1min 2s^Get:8 http://deb.debian.org/debian buster-updates/main amd64 Packages [15.2 kB]

Fetched 8492 kB in 7min 56s (17.8 kB/s)

Reading package lists... Done

4. Run the apt get install VIM command to install the VIM editor:

root@5721f675c819:/#apt-get install vim

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

vim-common vim-runtime xxd

Suggested packages:

ctags vim-doc vim-scripts

The following NEW packages will be installed:

vim vim-common vim-runtime xxd

0 upgraded, 4 newly installed, 0 to remove and 0 not upgraded.

Need to get 7390 kB of archives.

After this operation, 33.7 MB of additional disk space will be used.

Do you want to continue?[Y/n] y

Get:1 http://deb.debian.org/debian buster/main amd64 xxd amd64 2:8.1.0875-5 [140 kB]

Get:2 http://deb.debian.org/debian buster/main amd64 vim-common all 2:8.1.0875-5 [195 kB]

Get:3 http://deb.debian.org/debian buster/main amd64 vim-runtime all 2:8.1.0875-5 [5775 kB]

53% [3 vim-runtime 3612 kB/5775 kB 63%] 16.6 kB/s 3min 27s

Get:4 http://deb.debian.org/debian buster/main amd64 vim amd64 2:8.1.0875-5 [1280 kB]

83% [4 vim 152 kB/1280 kB 12%] 84% [4 vim 233 kB/1280 kB 18%] Fetched 7390 kB in 5min 35s (22.0 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package xxd.

(Reading database ... 9284 files and directories currently installed.)

Preparing to unpack .../xxd_2%3a8.1.0875-5_amd64.deb ...

Unpacking xxd (2:8.1.0875-5) ...

Selecting previously unselected package vim-common.

Preparing to unpack .../vim-common_2%3a8.1.0875-5_all.deb ...

Unpacking vim-common (2:8.1.0875-5) ...

Selecting previously unselected package vim-runtime.

Preparing to unpack .../vim-runtime_2%3a8.1.0875-5_all.deb ...

Adding 'diversion of /usr/share/vim/vim81/doc/help.txt to /usr/share/vim/vim81/doc/help.txt.vim-tiny by vim-runtime'

Adding 'diversion of /usr/share/vim/vim81/doc/tags to /usr/share/vim/vim81/doc/tags.vim-tiny by vim-runtime'

Unpacking vim-runtime (2:8.1.0875-5) ...

Selecting previously unselected package vim.

Preparing to unpack .../vim_2%3a8.1.0875-5_amd64.deb ...

Unpacking vim (2:8.1.0875-5) ...

Setting up xxd (2:8.1.0875-5) ...

Setting up vim-common (2:8.1.0875-5) ...

Setting up vim-runtime (2:8.1.0875-5) ...

Setting up vim (2:8.1.0875-5) ...

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vim (vim) in auto mode

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vimdiff (vimdiff) in auto mode

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/rvim (rvim) in auto mode

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/rview (rview) in auto mode

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/vi (vi) in auto mode

update-alternatives: warning: skip creation of /usr/share/man/da/man1/vi.1.gz because associated file /usr/share/man/da/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/de/man1/vi.1.gz because associated file /usr/share/man/de/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/fr/man1/vi.1.gz because associated file /usr/share/man/fr/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/it/man1/vi.1.gz because associated file /usr/share/man/it/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ja/man1/vi.1.gz because associated file /usr/share/man/ja/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/pl/man1/vi.1.gz because associated file /usr/share/man/pl/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ru/man1/vi.1.gz because associated file /usr/share/man/ru/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/man1/vi.1.gz because associated file /usr/share/man/man1/vim.1.gz (of link group vi) doesn't exist

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/view (view) in auto mode

update-alternatives: warning: skip creation of /usr/share/man/da/man1/view.1.gz because associated file /usr/share/man/da/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/de/man1/view.1.gz because associated file /usr/share/man/de/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/fr/man1/view.1.gz because associated file /usr/share/man/fr/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/it/man1/view.1.gz because associated file /usr/share/man/it/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ja/man1/view.1.gz because associated file /usr/share/man/ja/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/pl/man1/view.1.gz because associated file /usr/share/man/pl/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ru/man1/view.1.gz because associated file /usr/share/man/ru/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/man1/view.1.gz because associated file /usr/share/man/man1/vim.1.gz (of link group view) doesn't exist

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/ex (ex) in auto mode

update-alternatives: warning: skip creation of /usr/share/man/da/man1/ex.1.gz because associated file /usr/share/man/da/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/de/man1/ex.1.gz because associated file /usr/share/man/de/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/fr/man1/ex.1.gz because associated file /usr/share/man/fr/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/it/man1/ex.1.gz because associated file /usr/share/man/it/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ja/man1/ex.1.gz because associated file /usr/share/man/ja/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/pl/man1/ex.1.gz because associated file /usr/share/man/pl/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ru/man1/ex.1.gz because associated file /usr/share/man/ru/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/man1/ex.1.gz because associated file /usr/share/man/man1/vim.1.gz (of link group ex) doesn't exist

update-alternatives: using /usr/bin/vim.basic to provide /usr/bin/editor (editor) in auto mode

update-alternatives: warning: skip creation of /usr/share/man/da/man1/editor.1.gz because associated file /usr/share/man/da/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/de/man1/editor.1.gz because associated file /usr/share/man/de/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/fr/man1/editor.1.gz because associated file /usr/share/man/fr/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/it/man1/editor.1.gz because associated file /usr/share/man/it/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ja/man1/editor.1.gz because associated file /usr/share/man/ja/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/pl/man1/editor.1.gz because associated file /usr/share/man/pl/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/ru/man1/editor.1.gz because associated file /usr/share/man/ru/man1/vim.1.gz (of link group editor) doesn't exist

update-alternatives: warning: skip creation of /usr/share/man/man1/editor.1.gz because associated file /usr/share/man/man1/vim.1.gz (of link group editor) doesn't exist

root@5721f675c819:/etc/mysql# apt-get install vim

Reading package lists... Done

Building dependency tree

Reading state information... Done

vim is already the newest version (2:8.1.0875-5).

0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

root@5721f675c819:/#

5. Enter MySQL configuration folder:

root@5721f675c819:/# cd /etc/mysql

root@5721f675c819:/etc/mysql# ls

conf.d my.cnf my.cnf.fallback

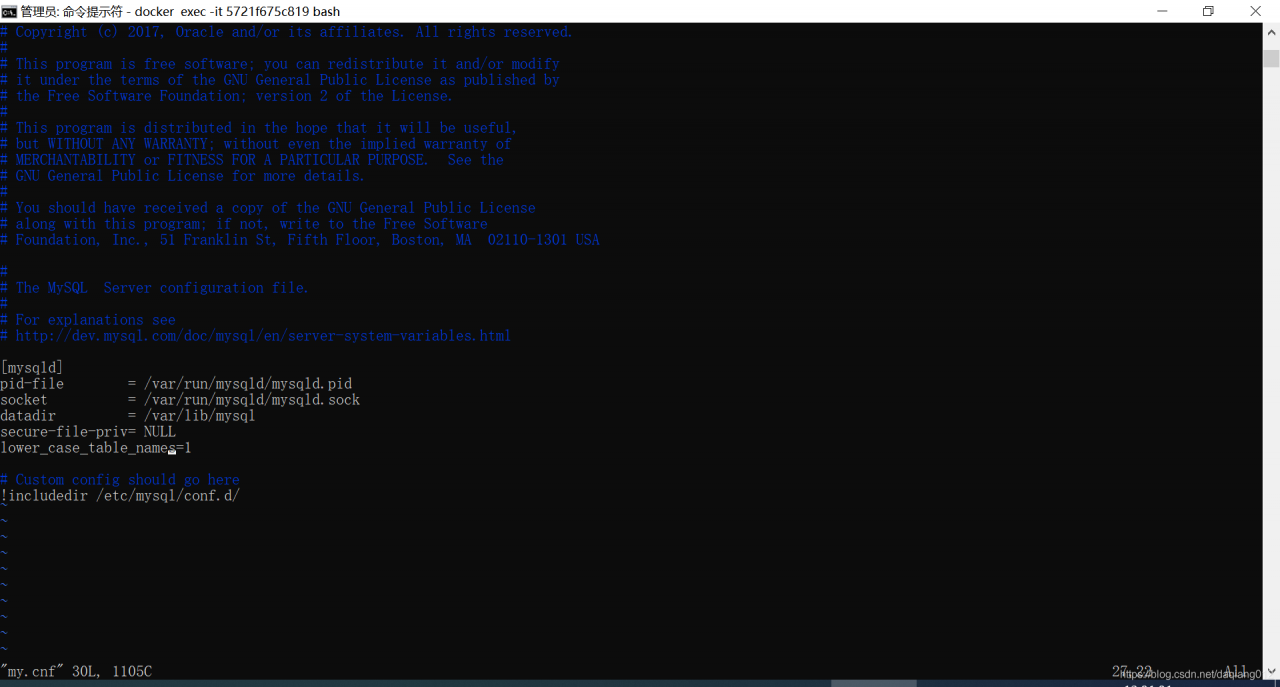

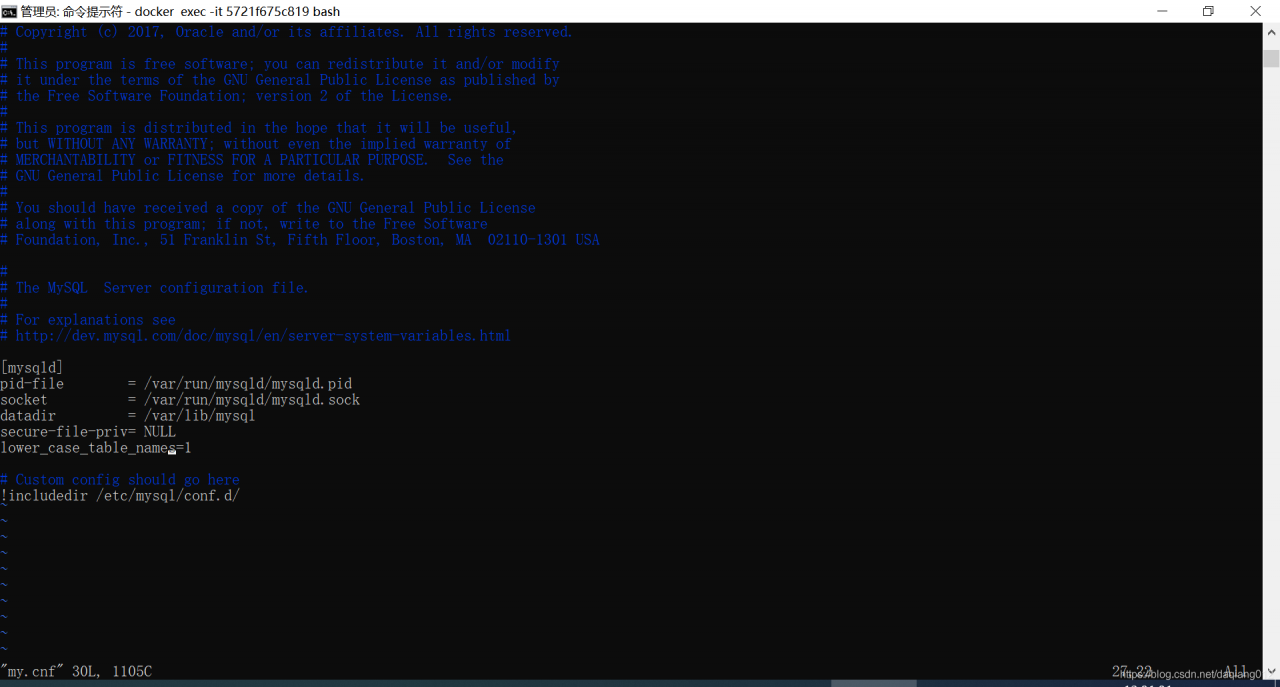

6. Modify my.cnf configuration file:

root@5721f675c819:/etc/mysql# vi my.cnf

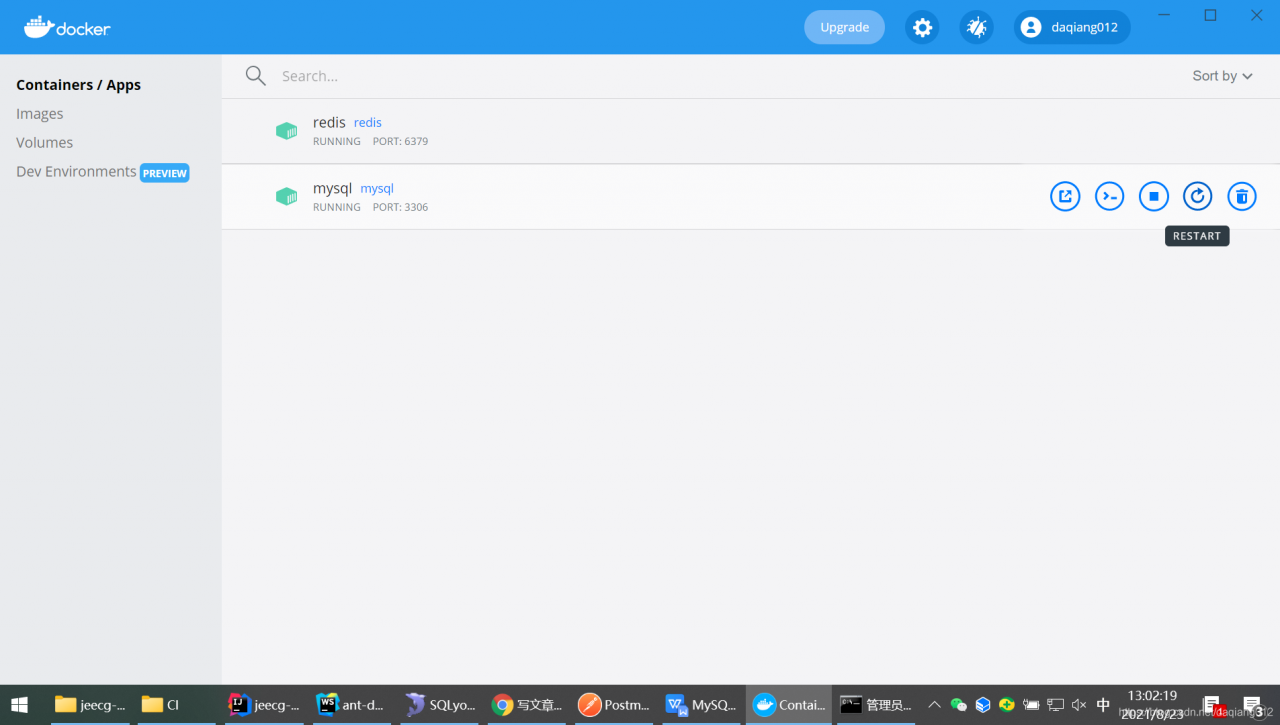

6. Restart MySQL service:

Note: restart may fail.

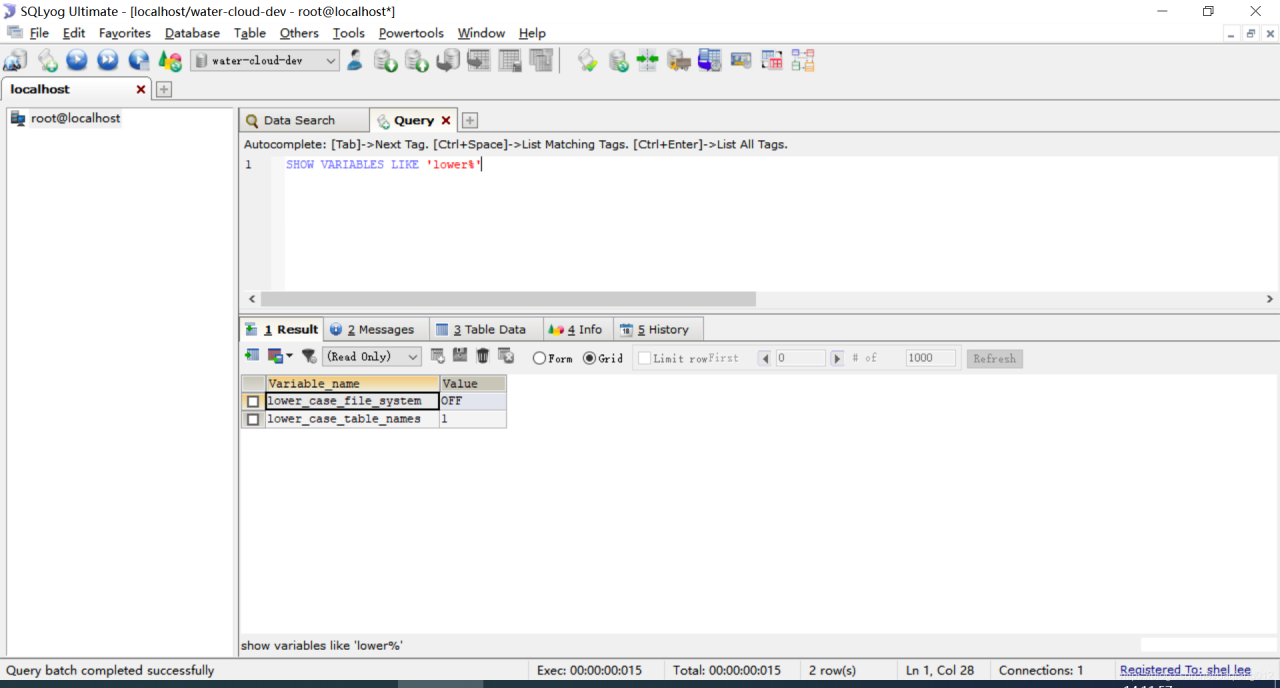

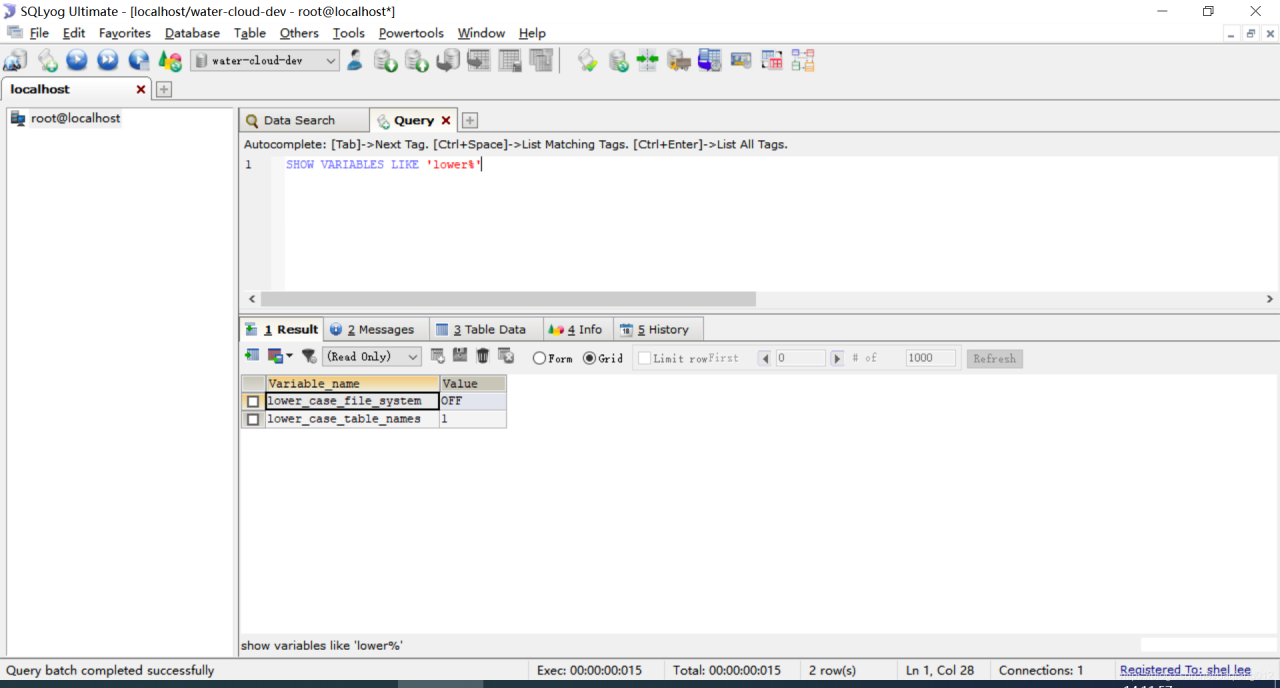

7. View modification results: