1.1 – The core problem solved by OpenFlow statistics (Counters, Timestamps) : how to count the duration of a flow

- How to implement an operation to be executed only once when the data packet first appears, and never again: set the default operation to update the duration. The first time the timestamp is read from the register to the metadata, it is 0, set a flow table, and the matching key of the corresponding field in the metadata is 0, and then the action executed is to record the current timestamp and modify the metadata of this field Data is the current timestamp. From then on, the corresponding field of this metadata is no longer 0. This operation also achieves the purpose of executing only the first time the data packet appears.

- How to record the timestamp of the last packet of a flow. The timestamp of each data packet of the flow is updated when it arrives, and the last saved timestamp must be the timestamp of the last data packet.

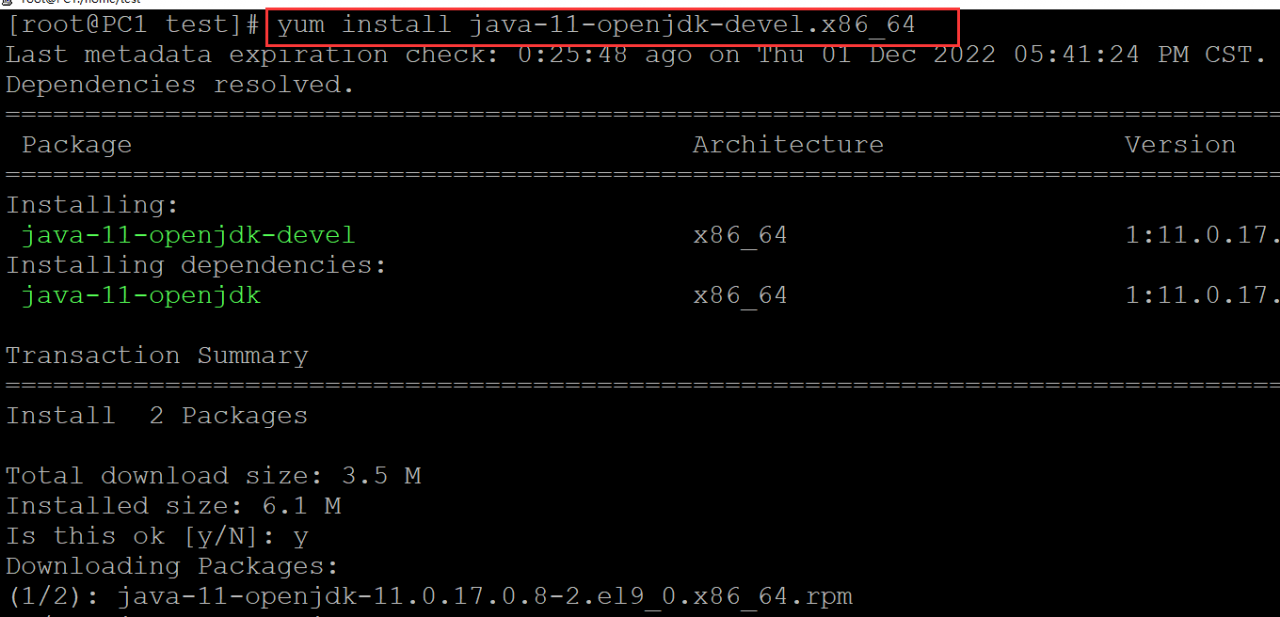

mafia-sdn/p4demos/demos/1-openflow/1.1-statistics/commands.txt

table_set_default counter_table _no_op

table_add counter_table do_count 10.0.0.1 10.0.0.2 => 0

table_add counter_table do_count 10.0.0.1 10.0.0.3 => 1

table_add counter_table do_count 10.0.0.2 10.0.0.1 => 2

table_add counter_table do_count 10.0.0.2 10.0.0.3 => 3

table_add counter_table do_count 10.0.0.3 10.0.0.1 => 4

table_add counter_table do_count 10.0.0.3 10.0.0.2 => 5

table_set_default duration_table update_duration (2. Each time after that, the default update duration operation is performed)

table_add duration_table update_start_ts 0 => (1. Only the first time you can match this flow table, then perform the operation of storing the initial time)

mafia-sdn/p4demos/demos/1-openflow/1.1-statistics/p4src/includes/counters.p4

#define TABLE_INDEX_WIDTH 3 // Number of bits to index the duration register

#define N_FLOWS_ENTRIES 8 // Number of entries for flow (2^3)

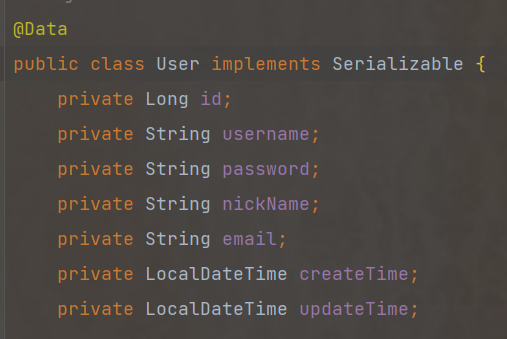

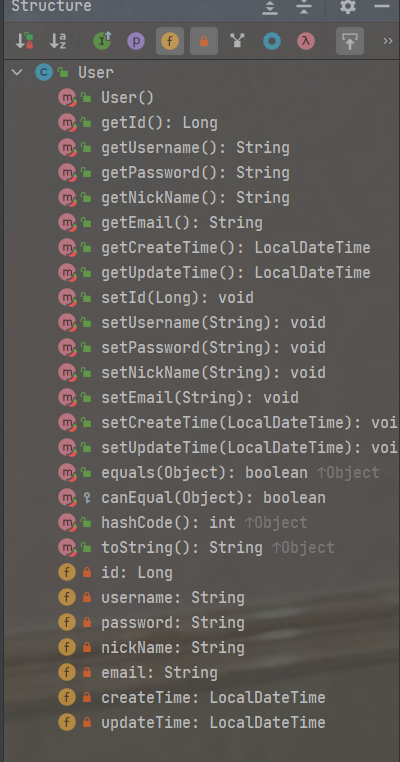

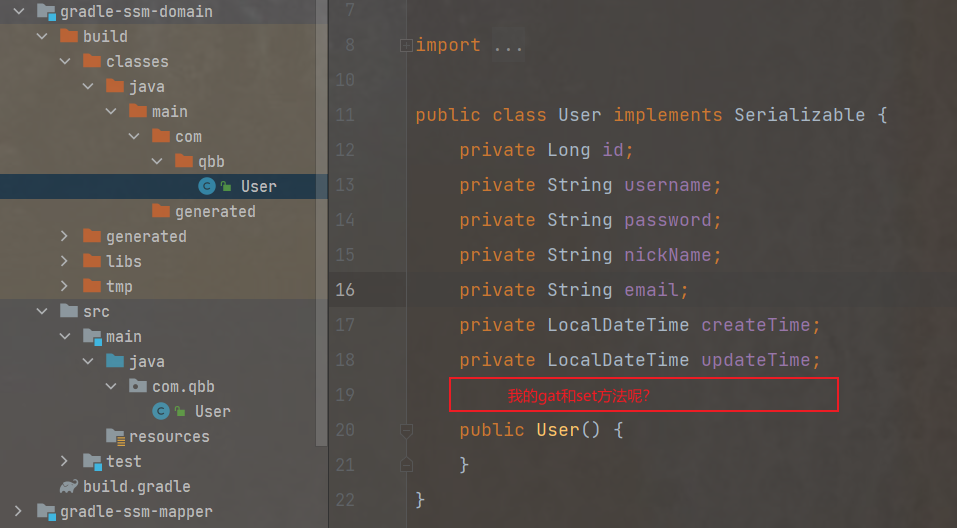

header_type my_metadata_t {

fields {

nhop_ipv4: 32;

pkt_ts: 48; // Loaded with intrinsic metadata: ingress_global_timestamp

tmp_ts: 48; // Temporary variable to load start_ts

pkt_count: 32;

byte_count: 32;

register_index: TABLE_INDEX_WIDTH;

}

}

metadata my_metadata_t my_metadata;

register my_byte_counter {

width: 32;

instance_count: N_FLOWS_ENTRIES;

}

register my_packet_counter {

width: 32;

instance_count: N_FLOWS_ENTRIES;

}

register start_ts{

width: 48;

instance_count: N_FLOWS_ENTRIES;

}

register last_ts{

width: 48;

instance_count: N_FLOWS_ENTRIES;

}

register flow_duration{ // Optional register...Duration can be derived from the two timestamp

width: 48;

static: duration_table;

instance_count: N_FLOWS_ENTRIES;

}

mafia-sdn/p4demos/demos/1-openflow/1.1-statistics/p4src/of.p4

#include "includes/headers.p4"

#include "includes/parser.p4"

#include "includes/counters.p4"

action _no_op() {

drop();

}

action _drop() {

drop();

}

// Action and table to count packets and bytes. Also load the start_ts to be matched against next table.

action do_count(entry_index) {

modify_field(my_metadata.register_index, entry_index); // Save the register index

modify_field(my_metadata.pkt_ts, intrinsic_metadata.ingress_global_timestamp); // Load packet timestamp in custom metadata

// Update packet counter (read + add + write)Update Package Counter

register_read(my_metadata.pkt_count, my_packet_counter, my_metadata.register_index);

add_to_field(my_metadata.pkt_count, 1);

register_write(my_packet_counter, my_metadata.register_index, my_metadata.pkt_count);

// Update byte counter (read + add + write)Update byte counter

register_read(my_metadata.byte_count, my_byte_counter, my_metadata.register_index);

add_to_field(my_metadata.byte_count, standard_metadata.packet_length);

register_write(my_byte_counter, my_metadata.register_index, my_metadata.byte_count);

// Cant do the following if register start_ts is associated to another table (eg: duration_table)...

// Semantic error: "static counter start_ts assigned to table duration_table cannot be referenced in an action called by table counter_table"Read the value in the register into my_metadata.tmp_ts, the first read is 0, so it can match the upstream table, all subsequent reads are not 0. So only the default operation is performed.

register_read(my_metadata.tmp_ts, start_ts, entry_index); // Read the start ts for the flow

}

table counter_table {

reads {

ipv4.srcAddr : exact;

ipv4.dstAddr : exact;

}

actions {

do_count;

_no_op;

}

size : 1024;

}

// Action and table to update the start and end timestamp of the flow.

// Optionally, the duration can as well be stored in a register.

// Action is called only when start_ts=0 (value loaded in my_metadata from my_count action)

action update_start_ts(){

register_write(start_ts, my_metadata.register_index, my_metadata.pkt_ts); // Update start_ts

update_duration();

}

// Default action: only update the timestamp for the last matched packet and the duration default operation to get the timestamp and current duration of the last packet.

action update_duration(){

register_write(last_ts, my_metadata.register_index, my_metadata.pkt_ts); // Update ts of the last seen packet

subtract_from_field(my_metadata.pkt_ts, my_metadata.tmp_ts); // Calculate duration

register_write(flow_duration, my_metadata.register_index, my_metadata.pkt_ts); // Optional: save duration in stateful register

}

table duration_table{

reads{

my_metadata.tmp_ts : exact;

}

actions{

update_start_ts;

update_duration;

}

}

control ingress {

apply(counter_table);

apply(duration_table);

}

table table_drop {

actions {

_drop;

}

}

control egress {

apply(table_drop);

}