Error when starting Zeppelin Zeppelin process died [FAILED]

That’s because your environment variable/etc/profile Zeppelin is configured in_ Home, but the path is wrong

Zeppelin documentation:

Zeppelin · YuQue

Error when starting Zeppelin Zeppelin process died [FAILED]

That’s because your environment variable/etc/profile Zeppelin is configured in_ Home, but the path is wrong

Zeppelin documentation:

Zeppelin · YuQue

Question:

Start downloading

curl -L ” https://github.com/docker/compose/releases/download/1.25.0/docker-compose- $(uname -s)-$(uname -m)” -o /usr/local/bin/docker-compose

function

docker-compose –version

report errors

Cannot open self /usr/local/bin/docker-compose or archive /usr/local/bin/docker-compose.pkg

reason:

Find out that the downloaded files are missing due to the network

Solution:

Download the source file from GitHub on the local machine, and then upload it to the Linux server

The nodejs request interface on the container docker server reports error: getaddrinfo ENOTFOUND xxx.xxxx.com xxx.xxxx.com:443.

Reason:

The current server cannot be connected to the request you want to request.

Solution:

1. Ping xxx.xxxx.com on the current server to see if it can be pinged (If the ping fails, it is a proxy problem, add xxxx xxx.xxxx.com in the hosts file.)

2. If it can be pinged, use the curl command to request the path to see if it can be called

3. If you are using the ip path interface, the call may be unsuccessful or the default port is not opened (80 is the default port of http, 443 is https)

4. If it can be pinged and the interface can be successfully called by using the curl command on the server, but it cannot be called in the project, it may be that the hosts file has been modified but did not take effect. Solution for this situation: add hosts in the container or inherit the hosts when starting the container (inherit hosts: when starting the docker container, add a sentence $(cat /etc/hosts|awk -F ”'{if(NR>2 ){print “–add-host “$2”:”$1}}’), you can integrate the host’s hosts file)

docker run --restart=always $(cat /etc/hosts|awk -F ' ' '{if(NR>2){print "--add-host "$2":"$1}}') -d -p 主机端口:容器端口 --name 指定容器名字 仓库/容器

Recently, I encountered a problem. When I redeployed a service in docker, I failed to start the service after executing the SH start.sh command or docker compose up – D command, and reported a device or resource busy error.

Then I docker PS a look, good guy, not only failed to start, the service is not available yet

this is because when docker is deployed, all the original things will be RM repeated and then rebuilt. Well, the deletion was successful, but the deployment was not started successfully.

Take a look at the error reported at that time:

failed to remove root filesystem for 9b13c255d03757c456651506ada5566a5b5e5a87b851e3621dcejf823: remove /var/lib/docker/overlay/9b13c255d03757c456651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d/merged: device or resource busy

Device or resource busy, which means that the device or resource is busy and you can’t delete it.

Then I went to the/var/lib/docker/overlay/9b13c255d03757c756651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d/merged directory. It was empty and could not be deleted manually.

terms of settlement

1. Find the processes occupying this directory

Command: grep ID/proc/*/mounts

examples are as follows:

grep 9b13c255d0375757c456651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d/proc/*/mounts

After executing the command, it is obvious that two processes are using it:

/proc/25086/mounts:overlay /data0/docker/overlay2/9b13c255d03757c456651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d /merged overlayrw,relatime,lowerdir=/data0/docker/overlay2/l/F4BQHNVPHGRKWXFRUIHXUMAHZ2:/data0/docker/overlay2/l/.....

/proc/25065/mounts:overlay /data0/docker/overlay2/9b13c255d03757c456651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d /merged overlayrw,relatime,lowerdir=/data0/docker/overlay2/l/......

2. Kill the process

Command: Kill – 9 process ID

examples are as follows:

kill – 9 25086

kill – 9 25065

Note: it is important to see whether some processes can be deleted directly. If they are deleted all at once, it will be bad in case other services are affected.

3. Continue to delete the directory. The operation is successful

Command: RM – RF error file path

example:

RM – RF/var/lib/docker/overlay/9b13c255d03757c456651506adaa2fb660f75a5b5e5a87b851e3621dce0a368d/merged

After deleting the directory file, re execute the SH start.sh or docker compose up – D command to start the service, and the start is successful!

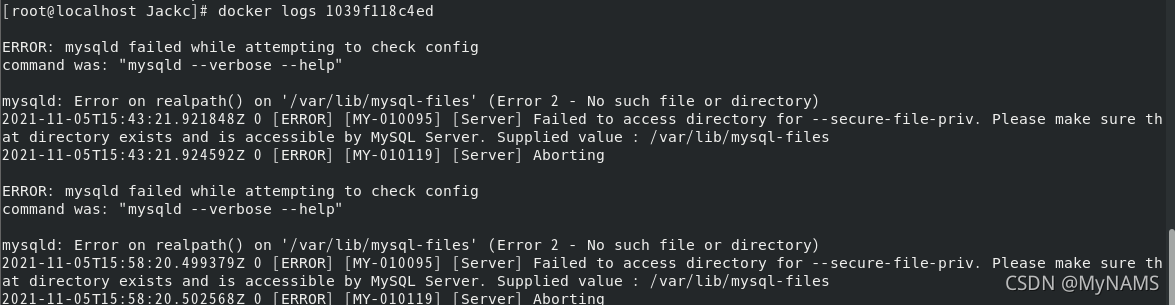

Problem Description:

the reason for the pit I encountered in learning docker is that the MySQL image used in the video is 5.7, and I use more than 8.0 (8.0.16) </ font>

Error code

Cause analysis:

Start from the third step, analyze step by step, and directly look at the solutions

1. Pull image

I pulled more than 8.0, so I encountered this pit

docker pull mysql:8.0.16

2. View mirror

docker images

3. Run container

docker run -p 3306:3306 --name mysql \

-v /mydata/mysql/log:/var/log/mysql \

-v /mydata/mysql/data:/var/lib/mysql \

-v /mydata/mysql/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root -d mysql:8.0.16

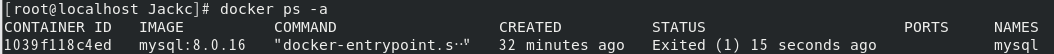

After running, you can see the container ID, but it is empty when viewed with docker PS

4. View container

docker ps

![]()

5. View all containers

docker ps -a

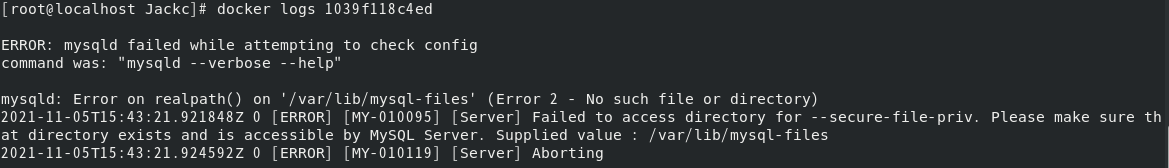

6. View log analysis

docker logs 容器id

7. Cause

The configuration location of MySQL is wrong when running the container. The configuration location of MySQL 5.7 is/etc/MySQL. The configuration location above MySQL 8.0 is/etc/MySQL/conf.d. just modify the configuration location according to the MySQL version

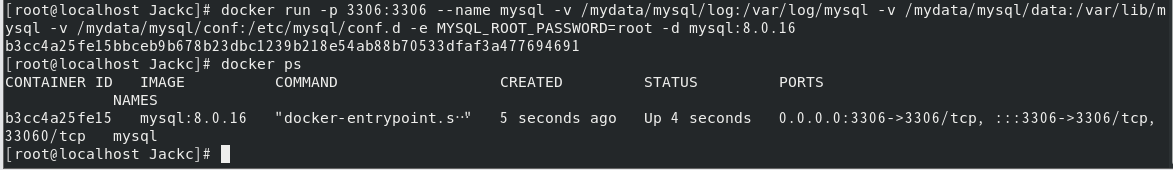

Solution:

1. Delete container

docker rm Container id or NAME

2. Modify the configuration of the run container

The conf configuration is modified as shown in the figure below

/mydata/mysql/conf:/etc/mysql

||

||

||

\/

/mydata/mysql/conf:/etc/mysql/conf.d

3. Rerun

Summary:

Mysql8.0 and above configuration

docker run -p 3306:3306 --name mysql \

-v /mydata/mysql/log:/var/log/mysql \

-v /mydata/mysql/data:/var/lib/mysql \

-v /mydata/mysql/conf:/etc/mysql/conf.d \

-e MYSQL_ROOT_PASSWORD=root -d mysql:8.0.16

Mysql5.7 configuration

docker run -p 3306:3306 --name mysql \

-v /mydata/mysql/log:/var/log/mysql \

-v /mydata/mysql/data:/var/lib/mysql \

-v /mydata/mysql/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root -d mysql:5.7

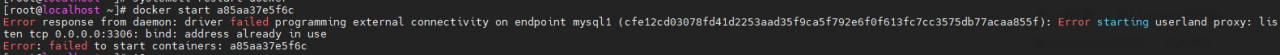

docker: Error response from daemon: driver failed programming external connectivity on endpoint mysql (3d8d89f260c9258467f589d4d7d0c27e33ab72d732d1115d1eb42d708606edc4):

So far, the container is occupied

# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a57959032cc9 mysql:5.7 "docker-entrypoint.s…" About a minute ago Created mysql

# docker rm a57959032cc9

a57959032cc9

Error starting userland proxy: listen tcp4 0.0.0.0:3306: bind: address already in use.

the following is the occupied port number

# netstat -tanlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 948/sshd

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 9217/mysqld

tcp 0 0 172.18.7.111:39412 100.100.45.106:443 TIME_WAIT -

tcp 0 0 172.18.7.111:22 58.100.92.78:9024 ESTABLISHED 6899/sshd: root@pts

See for yourself which is occupied, and then delete it with kill + port number

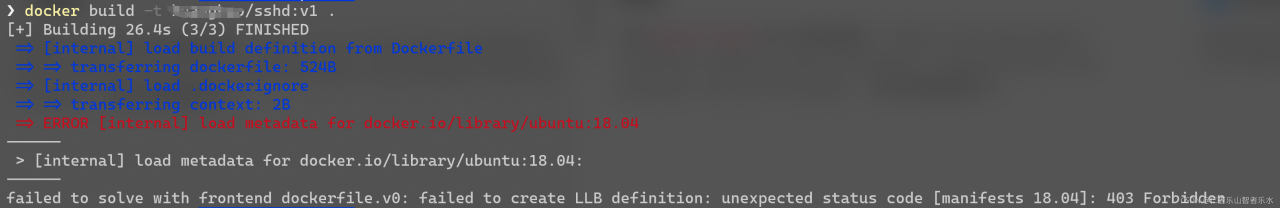

preface

Use the docker build command to build a mirror: “failed to solve with frontend dockerfile. V0: failed to create LLB definition: unexpected status code [manifests 18.04]: 403 Forbidden”. The specific screenshot of the error is as follows:

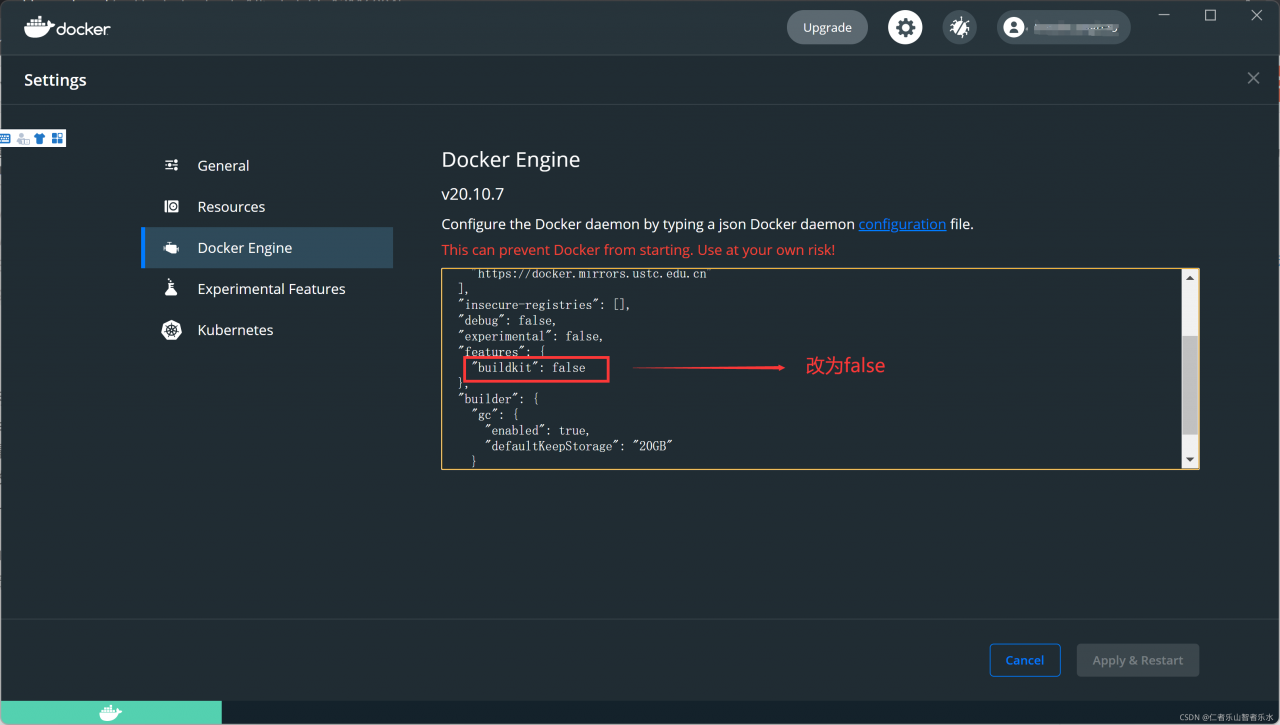

resolvent

It happens during the build process. It is an error in the buildkit, considering that the buildkit is still unstable. If you use the docker desktop on MAC/windows, you may also have to disable it in the “docker engine” JSON configuration

docker desktop – & gt; Settings – & gt; Docker engine – & gt; “Features”: {buildkit: true} will “features”: {buildkit: false}

Note that this is not a fix, it is a solution until someone in the docker team implements the correct fix. Please try again when the buildkit is more stable

Reference articles

github issues

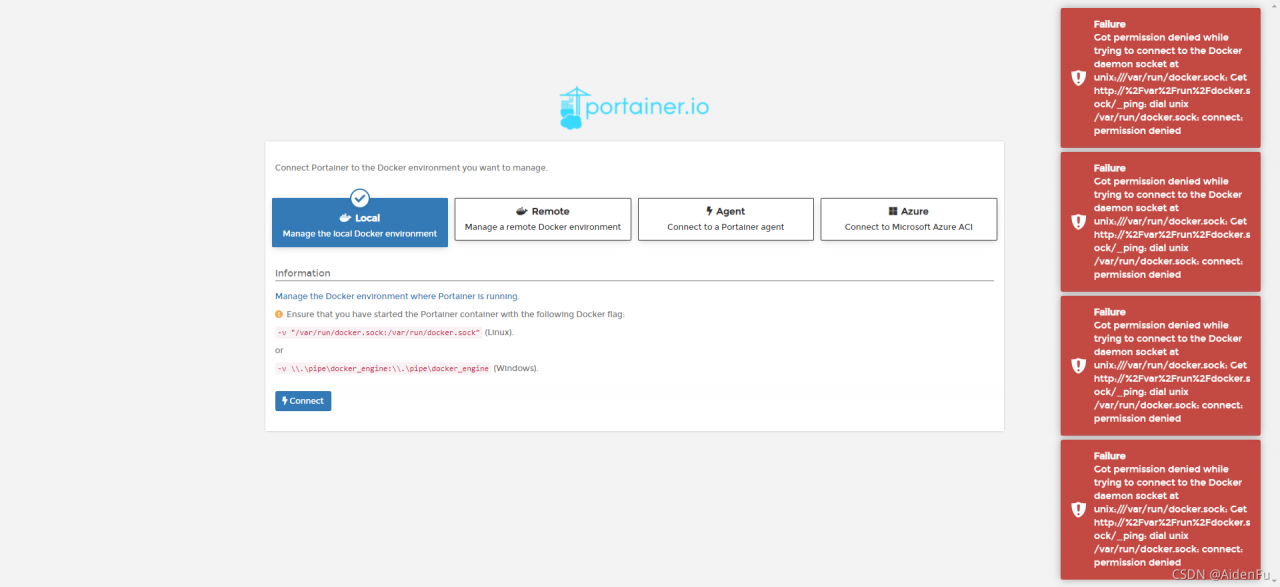

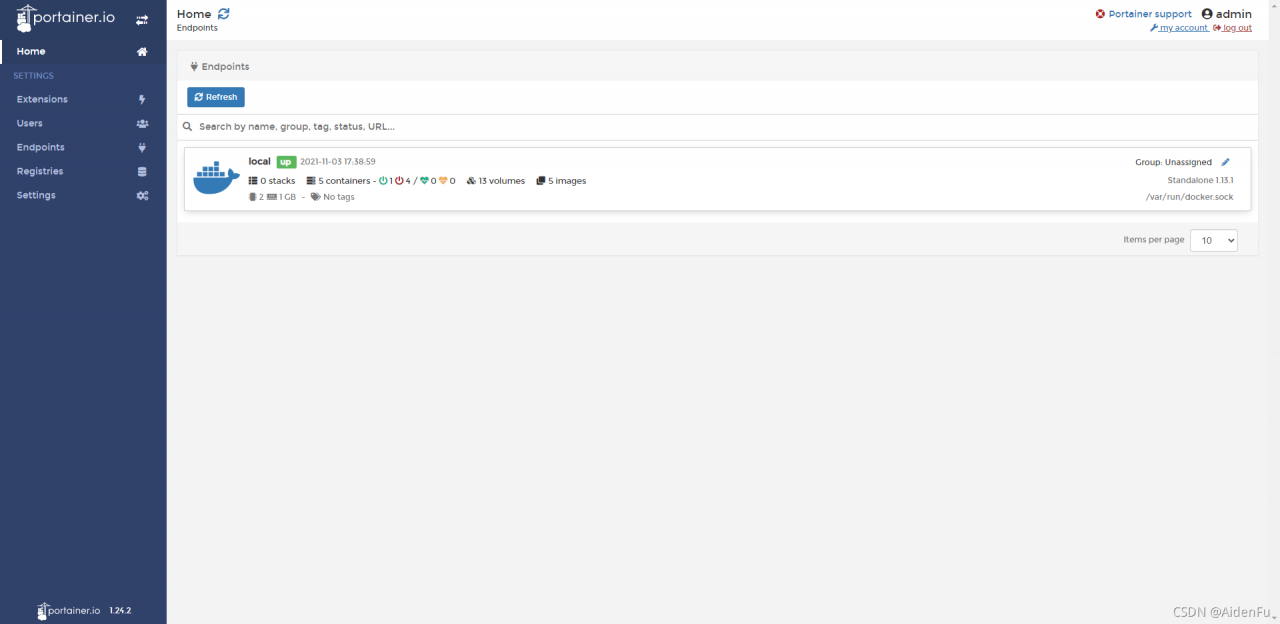

Container startup

[root@shusheng run]# docker run -d -p 9000:9000 --restart=always -v /var/run/docker.sock:/var/run/docker.sock --name prtainer-test portainer/portainer

Portal interface access

Connect error resolution

Many Google articles talk about permission, but I use root to start it

until later

[root@shusheng run]# setenforce 0

Successfully solved

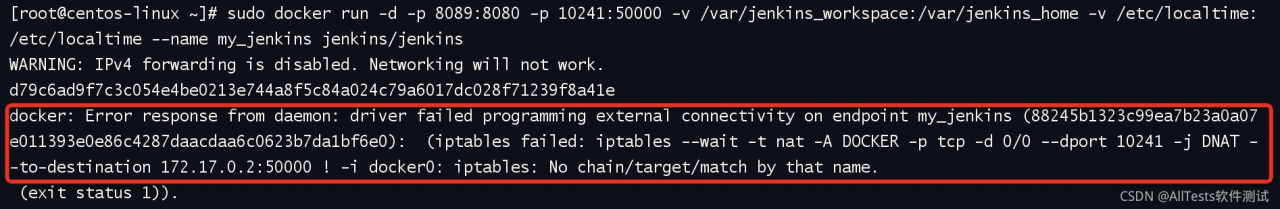

Start docker to report error content:

iptables failed: iptables –wait -t nat -A DOCKER -p tcp -d 0/0 –dport 10241 -j DNAT –to-destination 172.17.0.2:50000 ! -i docker0: iptables: No chain/target/match by that name.

(exit status 1)

Solution: Restart docker

systemctl restart dockerPhenomenon

Previously, an nginx image was run with docker without any error, but when the image was started with k8s, the error “nginx: [emerg] mkdir()”/var/cache/Nginx/client_temp “failed (13: permission denied)” was reported This error occurs only under a specific namespace. The normal docker version is 17.03.3-ce the abnormal docker version is docker 19.03.4 which uses the overlay 2 storage driver

reflection

According to the error message, it is obvious that it is a user permission problem. Similar nginx permission problems have been encountered before, but they are caused by the setting of SELinux. After closing SELinux, it returns to normal. For the setting method, refer to “CentOS 7. X closing SELinux”

to find k8s startup or failure, I also saw a blog “unable to run Nginx docker due to” 13: permission denied “to delete the container by executing the following command_t added to SELinux, but failed

semanage permissive -a container_t

semodule -l | grep permissive

other

In addition, I try to solve this problem by configuring the security context for pod or container. yaml the configuration of security context is

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsUser: 1000

runAsNonRoot: true

last

In the end, you can only directly make an nginx image started by a non root user. Follow https://github.com/nginxinc/docker-nginx-unprivileged Create your own image for the project

first view the user ID and group ID of your starting pod. You can use ID <User name>, for example:

[deploy@host ~]$ id deploy

uid=1000(deploy) gid=1000(deploy) Team=1000(deploy),980(docker)

You need to modify the uid and GID in the dockerfile in the project to the corresponding ID of your user. My user ID and group ID are 1000

I also added a line of settings for using alicloud image, otherwise it will be particularly slow to build the image. You can also add some custom settings yourself, It should be noted that the image exposes the 8080 port instead of the 80 port. Non root users cannot directly start the 80port

Dockerfile:

#

# NOTE: THIS DOCKERFILE IS GENERATED VIA "update.sh"

#

# PLEASE DO NOT EDIT IT DIRECTLY.

#

ARG IMAGE=alpine:3.13

FROM $IMAGE

LABEL maintainer="NGINX Docker Maintainers <[email protected]>"

ENV NGINX_VERSION 1.20.1

ENV NJS_VERSION 0.5.3

ENV PKG_RELEASE 1

ARG UID=1000

ARG GID=1000

RUN set -x \

&& sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories \

# create nginx user/group first, to be consistent throughout docker variants

&& addgroup -g $GID -S nginx \

&& adduser -S -D -H -u $UID -h /var/cache/nginx -s /sbin/nologin -G nginx -g nginx nginx \

&& apkArch="$(cat /etc/apk/arch)" \

&& nginxPackages=" \

nginx=${NGINX_VERSION}-r${PKG_RELEASE} \

nginx-module-xslt=${NGINX_VERSION}-r${PKG_RELEASE} \

nginx-module-geoip=${NGINX_VERSION}-r${PKG_RELEASE} \

nginx-module-image-filter=${NGINX_VERSION}-r${PKG_RELEASE} \

nginx-module-njs=${NGINX_VERSION}.${NJS_VERSION}-r${PKG_RELEASE} \

" \

&& case "$apkArch" in \

x86_64|aarch64) \

# arches officially built by upstream

set -x \

&& KEY_SHA512="e7fa8303923d9b95db37a77ad46c68fd4755ff935d0a534d26eba83de193c76166c68bfe7f65471bf8881004ef4aa6df3e34689c305662750c0172fca5d8552a *stdin" \

&& apk add --no-cache --virtual .cert-deps \

openssl \

&& wget -O /tmp/nginx_signing.rsa.pub https://nginx.org/keys/nginx_signing.rsa.pub \

&& if [ "$(openssl rsa -pubin -in /tmp/nginx_signing.rsa.pub -text -noout | openssl sha512 -r)" = "$KEY_SHA512" ]; then \

echo "key verification succeeded!"; \

mv /tmp/nginx_signing.rsa.pub /etc/apk/keys/; \

else \

echo "key verification failed!"; \

exit 1; \

fi \

&& apk del .cert-deps \

&& apk add -X "https://nginx.org/packages/alpine/v$(egrep -o '^[0-9]+\.[0-9]+' /etc/alpine-release)/main" --no-cache $nginxPackages \

;; \

*) \

# we're on an architecture upstream doesn't officially build for

# let's build binaries from the published packaging sources

set -x \

&& tempDir="$(mktemp -d)" \

&& chown nobody:nobody $tempDir \

&& apk add --no-cache --virtual .build-deps \

gcc \

libc-dev \

make \

openssl-dev \

pcre-dev \

zlib-dev \

linux-headers \

libxslt-dev \

gd-dev \

geoip-dev \

perl-dev \

libedit-dev \

mercurial \

bash \

alpine-sdk \

findutils \

&& su nobody -s /bin/sh -c " \

export HOME=${tempDir} \

&& cd ${tempDir} \

&& hg clone https://hg.nginx.org/pkg-oss \

&& cd pkg-oss \

&& hg up ${NGINX_VERSION}-${PKG_RELEASE} \

&& cd alpine \

&& make all \

&& apk index -o ${tempDir}/packages/alpine/${apkArch}/APKINDEX.tar.gz ${tempDir}/packages/alpine/${apkArch}/*.apk \

&& abuild-sign -k ${tempDir}/.abuild/abuild-key.rsa ${tempDir}/packages/alpine/${apkArch}/APKINDEX.tar.gz \

" \

&& cp ${tempDir}/.abuild/abuild-key.rsa.pub /etc/apk/keys/ \

&& apk del .build-deps \

&& apk add -X ${tempDir}/packages/alpine/ --no-cache $nginxPackages \

;; \

esac \

# if we have leftovers from building, let's purge them (including extra, unnecessary build deps)

&& if [ -n "$tempDir" ]; then rm -rf "$tempDir"; fi \

&& if [ -n "/etc/apk/keys/abuild-key.rsa.pub" ]; then rm -f /etc/apk/keys/abuild-key.rsa.pub; fi \

&& if [ -n "/etc/apk/keys/nginx_signing.rsa.pub" ]; then rm -f /etc/apk/keys/nginx_signing.rsa.pub; fi \

# Bring in gettext so we can get `envsubst`, then throw

# the rest away. To do this, we need to install `gettext`

# then move `envsubst` out of the way so `gettext` can

# be deleted completely, then move `envsubst` back.

&& apk add --no-cache --virtual .gettext gettext \

&& mv /usr/bin/envsubst /tmp/ \

\

&& runDeps="$( \

scanelf --needed --nobanner /tmp/envsubst \

| awk '{ gsub(/,/, "\nso:", $2); print "so:" $2 }' \

| sort -u \

| xargs -r apk info --installed \

| sort -u \

)" \

&& apk add --no-cache $runDeps \

&& apk del .gettext \

&& mv /tmp/envsubst /usr/local/bin/ \

# Bring in tzdata so users could set the timezones through the environment

# variables

&& apk add --no-cache tzdata \

# Bring in curl and ca-certificates to make registering on DNS SD easier

&& apk add --no-cache curl ca-certificates \

# forward request and error logs to docker log collector

&& ln -sf /dev/stdout /var/log/nginx/access.log \

&& ln -sf /dev/stderr /var/log/nginx/error.log \

# create a docker-entrypoint.d directory

&& mkdir /docker-entrypoint.d

# implement changes required to run NGINX as an unprivileged user

RUN sed -i 's,listen 80;,listen 8080;,' /etc/nginx/conf.d/default.conf \

&& sed -i '/user nginx;/d' /etc/nginx/nginx.conf \

&& sed -i 's,/var/run/nginx.pid,/tmp/nginx.pid,' /etc/nginx/nginx.conf \

&& sed -i "/^http {/a \ proxy_temp_path /tmp/proxy_temp;\n client_body_temp_path /tmp/client_temp;\n fastcgi_temp_path /tmp/fastcgi_temp;\n uwsgi_temp_path /tmp/uwsgi_temp;\n scgi_temp_path /tmp/scgi_temp;\n" /etc/nginx/nginx.conf \

# nginx user must own the cache and etc directory to write cache and tweak the nginx config

&& chown -R $UID:0 /var/cache/nginx \

&& chmod -R g+w /var/cache/nginx \

&& chown -R $UID:0 /etc/nginx \

&& chmod -R g+w /etc/nginx

COPY docker-entrypoint.sh /

COPY 10-listen-on-ipv6-by-default.sh /docker-entrypoint.d

COPY 20-envsubst-on-templates.sh /docker-entrypoint.d

COPY 30-tune-worker-processes.sh /docker-entrypoint.d

RUN chmod 755 /docker-entrypoint.sh \

&& chmod 755 /docker-entrypoint.d/*.sh

ENTRYPOINT ["/docker-entrypoint.sh"]

EXPOSE 8080

STOPSIGNAL SIGQUIT

USER $UID

CMD ["nginx", "-g", "daemon off;"]

10-listen-on-ipv6-by-default.sh:

#!/bin/sh

# vim:sw=4:ts=4:et

set -e

ME=$(basename $0)

DEFAULT_CONF_FILE="etc/nginx/conf.d/default.conf"

# check if we have ipv6 available

if [ ! -f "/proc/net/if_inet6" ]; then

echo >&3 "$ME: info: ipv6 not available"

exit 0

fi

if [ ! -f "/$DEFAULT_CONF_FILE" ]; then

echo >&3 "$ME: info: /$DEFAULT_CONF_FILE is not a file or does not exist"

exit 0

fi

# check if the file can be modified, e.g. not on a r/o filesystem

touch /$DEFAULT_CONF_FILE 2>/dev/null || { echo >&3 "$ME: info: can not modify /$DEFAULT_CONF_FILE (read-only file system?)"; exit 0; }

# check if the file is already modified, e.g. on a container restart

grep -q "listen \[::]\:8080;" /$DEFAULT_CONF_FILE && { echo >&3 "$ME: info: IPv6 listen already enabled"; exit 0; }

if [ -f "/etc/os-release" ]; then

. /etc/os-release

else

echo >&3 "$ME: info: can not guess the operating system"

exit 0

fi

echo >&3 "$ME: info: Getting the checksum of /$DEFAULT_CONF_FILE"

case "$ID" in

"debian")

CHECKSUM=$(dpkg-query --show --showformat='${Conffiles}\n' nginx | grep $DEFAULT_CONF_FILE | cut -d' ' -f 3)

echo "$CHECKSUM /$DEFAULT_CONF_FILE" | md5sum -c - >/dev/null 2>&1 || {

echo >&3 "$ME: info: /$DEFAULT_CONF_FILE differs from the packaged version"

exit 0

}

;;

"alpine")

CHECKSUM=$(apk manifest nginx 2>/dev/null| grep $DEFAULT_CONF_FILE | cut -d' ' -f 1 | cut -d ':' -f 2)

echo "$CHECKSUM /$DEFAULT_CONF_FILE" | sha1sum -c - >/dev/null 2>&1 || {

echo >&3 "$ME: info: /$DEFAULT_CONF_FILE differs from the packaged version"

exit 0

}

;;

*)

echo >&3 "$ME: info: Unsupported distribution"

exit 0

;;

esac

# enable ipv6 on default.conf listen sockets

sed -i -E 's,listen 8080;,listen 8080;\n listen [::]:8080;,' /$DEFAULT_CONF_FILE

echo >&3 "$ME: info: Enabled listen on IPv6 in /$DEFAULT_CONF_FILE"

exit 0

20-envsubst-on-templates.sh:

#!/bin/sh

set -e

ME=$(basename $0)

auto_envsubst() {

local template_dir="${NGINX_ENVSUBST_TEMPLATE_DIR:-/etc/nginx/templates}"

local suffix="${NGINX_ENVSUBST_TEMPLATE_SUFFIX:-.template}"

local output_dir="${NGINX_ENVSUBST_OUTPUT_DIR:-/etc/nginx/conf.d}"

local template defined_envs relative_path output_path subdir

defined_envs=$(printf '${%s} ' $(env | cut -d= -f1))

[ -d "$template_dir" ] || return 0

if [ ! -w "$output_dir" ]; then

echo >&3 "$ME: ERROR: $template_dir exists, but $output_dir is not writable"

return 0

fi

find "$template_dir" -follow -type f -name "*$suffix" -print | while read -r template; do

relative_path="${template#$template_dir/}"

output_path="$output_dir/${relative_path%$suffix}"

subdir=$(dirname "$relative_path")

# create a subdirectory where the template file exists

mkdir -p "$output_dir/$subdir"

echo >&3 "$ME: Running envsubst on $template to $output_path"

envsubst "$defined_envs" < "$template" > "$output_path"

done

}

auto_envsubst

exit 0

30-tune-worker-processes.sh:

#!/bin/sh

# vim:sw=2:ts=2:sts=2:et

set -eu

LC_ALL=C

ME=$( basename "$0" )

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

[ "${NGINX_ENTRYPOINT_WORKER_PROCESSES_AUTOTUNE:-}" ] || exit 0

touch /etc/nginx/nginx.conf 2>/dev/null || { echo >&2 "$ME: error: can not modify /etc/nginx/nginx.conf (read-only file system?)"; exit 0; }

ceildiv() {

num=$1

div=$2

echo $(( (num + div - 1)/div ))

}

get_cpuset() {

cpusetroot=$1

cpusetfile=$2

ncpu=0

[ -f "$cpusetroot/$cpusetfile" ] || return 1

for token in $( tr ',' ' ' < "$cpusetroot/$cpusetfile" ); do

case "$token" in

*-*)

count=$( seq $(echo "$token" | tr '-' ' ') | wc -l )

ncpu=$(( ncpu+count ))

;;

*)

ncpu=$(( ncpu+1 ))

;;

esac

done

echo "$ncpu"

}

get_quota() {

cpuroot=$1

ncpu=0

[ -f "$cpuroot/cpu.cfs_quota_us" ] || return 1

[ -f "$cpuroot/cpu.cfs_period_us" ] || return 1

cfs_quota=$( cat "$cpuroot/cpu.cfs_quota_us" )

cfs_period=$( cat "$cpuroot/cpu.cfs_period_us" )

[ "$cfs_quota" = "-1" ] && return 1

[ "$cfs_period" = "0" ] && return 1

ncpu=$( ceildiv "$cfs_quota" "$cfs_period" )

[ "$ncpu" -gt 0 ] || return 1

echo "$ncpu"

}

get_quota_v2() {

cpuroot=$1

ncpu=0

[ -f "$cpuroot/cpu.max" ] || return 1

cfs_quota=$( cut -d' ' -f 1 < "$cpuroot/cpu.max" )

cfs_period=$( cut -d' ' -f 2 < "$cpuroot/cpu.max" )

[ "$cfs_quota" = "max" ] && return 1

[ "$cfs_period" = "0" ] && return 1

ncpu=$( ceildiv "$cfs_quota" "$cfs_period" )

[ "$ncpu" -gt 0 ] || return 1

echo "$ncpu"

}

get_cgroup_v1_path() {

needle=$1

found=

foundroot=

mountpoint=

[ -r "/proc/self/mountinfo" ] || return 1

[ -r "/proc/self/cgroup" ] || return 1

while IFS= read -r line; do

case "$needle" in

"cpuset")

case "$line" in

*cpuset*)

found=$( echo "$line" | cut -d ' ' -f 4,5 )

break

;;

esac

;;

"cpu")

case "$line" in

*cpuset*)

;;

*cpu,cpuacct*|*cpuacct,cpu|*cpuacct*|*cpu*)

found=$( echo "$line" | cut -d ' ' -f 4,5 )

break

;;

esac

esac

done << __EOF__

$( grep -F -- '- cgroup ' /proc/self/mountinfo )

__EOF__

while IFS= read -r line; do

controller=$( echo "$line" | cut -d: -f 2 )

case "$needle" in

"cpuset")

case "$controller" in

cpuset)

mountpoint=$( echo "$line" | cut -d: -f 3 )

break

;;

esac

;;

"cpu")

case "$controller" in

cpu,cpuacct|cpuacct,cpu|cpuacct|cpu)

mountpoint=$( echo "$line" | cut -d: -f 3 )

break

;;

esac

;;

esac

done << __EOF__

$( grep -F -- 'cpu' /proc/self/cgroup )

__EOF__

case "${found%% *}" in

"/")

foundroot="${found##* }$mountpoint"

;;

"$mountpoint")

foundroot="${found##* }"

;;

esac

echo "$foundroot"

}

get_cgroup_v2_path() {

found=

foundroot=

mountpoint=

[ -r "/proc/self/mountinfo" ] || return 1

[ -r "/proc/self/cgroup" ] || return 1

while IFS= read -r line; do

found=$( echo "$line" | cut -d ' ' -f 4,5 )

done << __EOF__

$( grep -F -- '- cgroup2 ' /proc/self/mountinfo )

__EOF__

while IFS= read -r line; do

mountpoint=$( echo "$line" | cut -d: -f 3 )

done << __EOF__

$( grep -F -- '0::' /proc/self/cgroup )

__EOF__

case "${found%% *}" in

"")

return 1

;;

"/")

foundroot="${found##* }$mountpoint"

;;

"$mountpoint")

foundroot="${found##* }"

;;

esac

echo "$foundroot"

}

ncpu_online=$( getconf _NPROCESSORS_ONLN )

ncpu_cpuset=

ncpu_quota=

ncpu_cpuset_v2=

ncpu_quota_v2=

cpuset=$( get_cgroup_v1_path "cpuset" ) && ncpu_cpuset=$( get_cpuset "$cpuset" "cpuset.effective_cpus" ) || ncpu_cpuset=$ncpu_online

cpu=$( get_cgroup_v1_path "cpu" ) && ncpu_quota=$( get_quota "$cpu" ) || ncpu_quota=$ncpu_online

cgroup_v2=$( get_cgroup_v2_path ) && ncpu_cpuset_v2=$( get_cpuset "$cgroup_v2" "cpuset.cpus.effective" ) || ncpu_cpuset_v2=$ncpu_online

cgroup_v2=$( get_cgroup_v2_path ) && ncpu_quota_v2=$( get_quota_v2 "$cgroup_v2" ) || ncpu_quota_v2=$ncpu_online

ncpu=$( printf "%s\n%s\n%s\n%s\n%s\n" \

"$ncpu_online" \

"$ncpu_cpuset" \

"$ncpu_quota" \

"$ncpu_cpuset_v2" \

"$ncpu_quota_v2" \

| sort -n \

| head -n 1 )

sed -i.bak -r 's/^(worker_processes)(.*)$/# Commented out by '"$ME"' on '"$(date)"'\n#\1\2\n\1 '"$ncpu"';/' /etc/nginx/nginx.conf

docker-entrypoint.sh:

#!/bin/sh

# vim:sw=4:ts=4:et

set -e

if [ -z "${NGINX_ENTRYPOINT_QUIET_LOGS:-}" ]; then

exec 3>&1

else

exec 3>/dev/null

fi

if [ "$1" = "nginx" -o "$1" = "nginx-debug" ]; then

if /usr/bin/find "/docker-entrypoint.d/" -mindepth 1 -maxdepth 1 -type f -print -quit 2>/dev/null | read v; then

echo >&3 "$0: /docker-entrypoint.d/ is not empty, will attempt to perform configuration"

echo >&3 "$0: Looking for shell scripts in /docker-entrypoint.d/"

find "/docker-entrypoint.d/" -follow -type f -print | sort -V | while read -r f; do

case "$f" in

*.sh)

if [ -x "$f" ]; then

echo >&3 "$0: Launching $f";

"$f"

else

# warn on shell scripts without exec bit

echo >&3 "$0: Ignoring $f, not executable";

fi

;;

*) echo >&3 "$0: Ignoring $f";;

esac

done

echo >&3 "$0: Configuration complete; ready for start up"

else

echo >&3 "$0: No files found in /docker-entrypoint.d/, skipping configuration"

fi

fi

exec "$@"

Run a couple of files at the same catalog and then run docker build -t nginxinc/docker-nginx-unprivileged:latest

Look at the error first:

The mistake is like the above. At first, I thought my command was wrong or something else, but later I found it was not

But it’s still troubleshooting one by one.

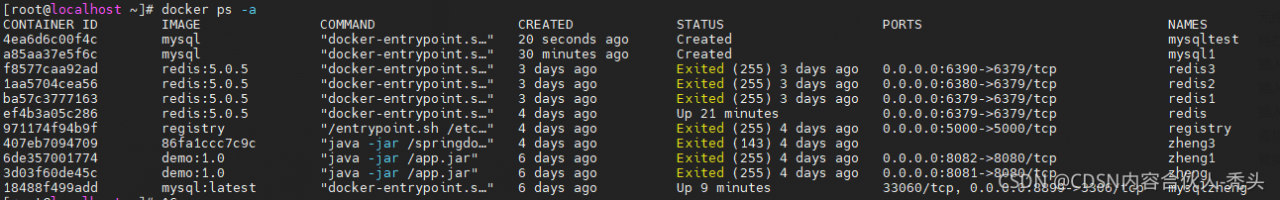

first, the command to create a MySQL container:

docker create – P 3306:3306 — name of the container – e mysql_ ROOT_ Password = database password MySQL version:

After creation, enter the command: docker PS – a

to view all containers

Then you can see the MySQL you just named

Start command:

docker start container ID

Then an error will be reported. If an error is reported, please see the following:

Problem solving:

The first is to restart the docker. It is useless for me to restart anyway. The restart command:

systemctl restart docker

it is also possible that you will be OK after restarting

The second is to view all containers through the docker PS – a command. If you have created MySQL before, when you create a MySQL container again, you need to change an external port, not 3306. Ensure that the port and name are different from the MySQL container created before

You can also use the previous MySQL container and start it directly through the docker start container ID

The following error occurred when using docker to deploy the project today:

unsupported Compose file version: 3.2

It seems that there is a version problem by searching the data:

https://stackoverflow.com/questions/58007968/unsupported-compose-file-version-x-x

Solution:

upgrade the docker and docker compose versions to the latest versions.