1. Find problems

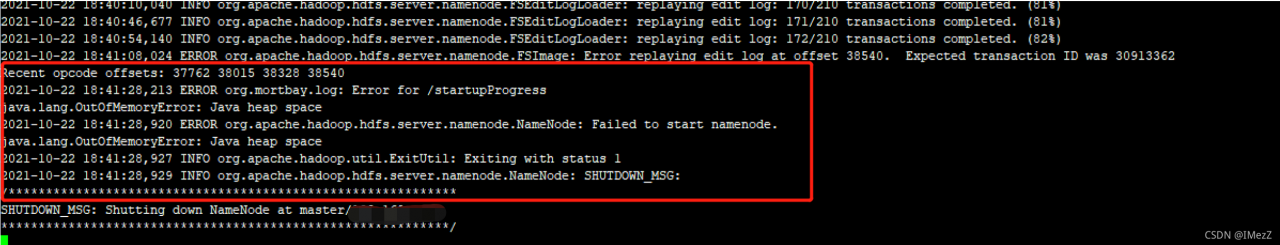

Phenomenon: restart the Hadoop cluster, and the namenode reports an error and cannot be started.

Error reported:

2. Analyze problems

As soon as you see the word “outofmemoryerror: Java heap space” in the error report, it should be the problem of JVM related parameters. Go to the hadoop-env.sh configuration file when. The configuration file settings are as follows:

export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"It can be seen from the above that the size of heap memory is not set in the parameter.

The default heap memory size of roles (namenode, secondarynamenode, datanode) in the HDFS cluster is 1000m

3. Problem solving

Change the parameters to the following, start the cluster again, and the start is successful.

export HADOOP_NAMENODE_OPTS="-Xms4096m -Xmx4096m -Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_SECONDARYNAMENODE_OPTS="-Xms4096m -Xmx4096m -Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

export HADOOP_DATANODE_OPTS="-Xms2048M -Xmx2048M -Dhadoop.security.logger=ERROR,RFAS -Xmx4096m $HADOOP_DATANODE_OPTS"Parameter Description:

– Xmx4096m Maximum heap memory available

– Xms4096m Initial heap memory

Reference: HDFS memory configuration – flowers are not fully opened * months are not round – blog Park

Read More:

- Error in idea compilation: java.lang.OutOfMemoryError Java heap space and java.lang.StackOverflowError

- Tomcat memory overflow in Eclipse: Java. Lang. outofmemoryerror: permgen space solution:

- Error occurred during initialization of VM Could not reserve enough space for object heap

- JVM start error: could not reserve enough space for object heap error

- Couldn’t reserve space for cygwin’s heap, Win32 error 487

- c:\Git\bin\ssh.exe: *** Couldn’t reserve space for cygwin’s heap, Win32 error 487

- Java:java.lang.OutOfMemoryError : GC overhead limit exceeded solution

- Error occurred during initialization of VM Could not reserve enough space for 3145728KB object heap

- Java. Lang. outofmemoryerror when using idea build project

- Differences between Java stack overflow ror and outofmemoryerror

- Error when idea starts: Java: outofmemoryerror: insufficient memory

- Ineffective mark-compacts near heap limit Allocation failed – JavaScript heap out of memory

- Error: attempting to operate on HDFS namenode as root

- Why namenode can’t be started and its solution

- Start Additional NameNode [How to Solve]

- Error report in idea compilation Error:Android Dex : [Project] java.lang.OutOfMemoryError : GC overhead limit exceeded

- Error in initializing namenode when configuring Hadoop!!!

- CDH Namenode Abnormal stop Error: flush failed for required journal (JournalAndStream(mgr=QJM to

- Eclipse startup error: a Java runtime environment (JRE) or Java Development Kit (JDK) must be available

- zookeeper Failed to Startup: Error: JAVA_HOME is not set and java could not be found in PATH