Hadoop cluster: about course not obtain block: error reporting

When accessing HDFS, you encounter the above problems,

it is a node problem:

then check whether the firewall is closed, whether the datanode is started, and whether the data block is damaged:

check and find out that the second problem is the second problem. Then restart Hadoop daemon start datanode on the corresponding host on the command line, JPS to see that it has been started,

then try to execute the code to see if there is an error,

Similarly,

datanodes often hang up automatically,

…

go to the web (host: 9870)

find that other nodes are not really started in live node

OK

Restart,

reformat

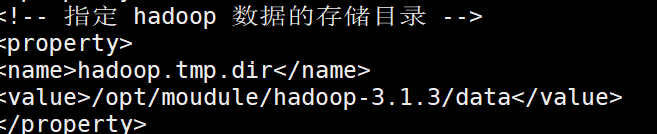

find the HDFS data storage path in the configuration file:

delete $Hadoop from all nodes_ Home%/data/DFs/data/current

then restart the Hadoop cluster (turn off the security mode% hadoop_home% $bin/HDFS dfsadmin – safemode leave)

you can also see that the data has been deleted on the web side,

the landlord found that there are still previous data directories, but the content has been lost

you need to delete these damaged data blocks as well

execute HDFS fsck

View the data block of the mission

hdfs fsck

-Delete deletes a damaged data block

Then upload the data again and execute it again.

Read More:

- A solution to the problem that the number of nodes does not increase and the name of nodes is unstable after adding nodes dynamically in Hadoop cluster

- Common problems of Hadoop startup error reporting

- Error reported when debugging Hadoop cluster under windows failed to find winutils.exe

- When setting up etcd cluster, an error is reported. Etcd: request cluster ID mismatch error resolution is only applicable to new etcd cluster or no data cluster

- ORA-19502: write error on file “”, block number (block size=)

- Solve the problem that the local flow of the nifi node is inconsistent with the cluster flow, resulting in the failure to join the cluster

- mkdir: Call From hadoop102/192.168.6.102 to hadoop102:8020 failed on connection exception: java.net.

- Built on Ethereum, puppeth cannot be used to create the initial block, and an error is reported Fatal: Failed to write genesis block: unsupported fork ordering: eip15

- What to do if you repeatedly format a cluster

- Introduction of Hadoop HDFS and the use of basic client commands

- hdfs-bug:DataXceiver error processing WRITE_BLOCK operation

- Hadoop — HDFS data writing process

- IOS warning – this block declaration is not a prototype

- MS SQL Could not obtain information about Windows NT group/user ‘domain\login’, error code 0x5. [SQ…

- error: declaration may not appear after executable statement in block

- Hadoop datanode using JPS to view the solution that can’t be started

- Linux Mint installs Hadoop environment

- Summary of Hadoop error handling methods

- Error reporting – the project directly introduces the error reporting and solution of. SCSS file

- Invalid cluster ID. ignore in building Doris database environment