Hadoop cluster: about course not obtain block: error reporting

When accessing HDFS, you encounter the above problems,

it is a node problem:

then check whether the firewall is closed, whether the datanode is started, and whether the data block is damaged:

check and find out that the second problem is the second problem. Then restart Hadoop daemon start datanode on the corresponding host on the command line, JPS to see that it has been started,

then try to execute the code to see if there is an error,

Similarly,

datanodes often hang up automatically,

…

go to the web (host: 9870)

find that other nodes are not really started in live node

OK

Restart,

reformat

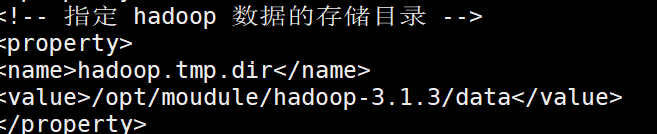

find the HDFS data storage path in the configuration file:

delete $Hadoop from all nodes_ Home%/data/DFs/data/current

then restart the Hadoop cluster (turn off the security mode% hadoop_home% $bin/HDFS dfsadmin – safemode leave)

you can also see that the data has been deleted on the web side,

the landlord found that there are still previous data directories, but the content has been lost

you need to delete these damaged data blocks as well

execute HDFS fsck

View the data block of the mission

hdfs fsck

-Delete deletes a damaged data block

Then upload the data again and execute it again.