Recently I’ve been developing projects using scrapy.

scrapy project, using a proxy.

Then it runs locally, everything is fine, the data can be crawled normally.

After deploying it to the online gerapy and running it, it reports an error and the logs show that:

2022-10-08 17:03:24 [scrapy.downloadermiddlewares.retry] ERROR: Gave up retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): User timeout caused connection failure: Getting https://www.xxx.com/en/product?o=100&p=100 took longer than 180.0 seconds..

2022-10-08 17:03:24 [xxx] ERROR: <twisted.python.failure.Failure twisted.internet.error.TimeoutError: User timeout caused connection failure: Getting https://www.xxx.com/en/product?o=100&p=100 took longer than 180.0 seconds..>

2022-10-08 17:03:24 [xxx] ERROR: TimeoutError on https://www.xxx.com/en/product?o=100&p=100

I thought it was a proxy problem, then I removed the proxy and it worked fine locally

After deploying to the online gerapy and running, the error was reported again and the logs showed that:

2022-10-09 10:39:45 [scrapy.downloadermiddlewares.retry] DEBUG: Retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): [<twisted.python.failure.Failure twisted.internet.error.ConnectionLost: Connection to the other side was lost in a non-clean fashion: Connection lost.>]

2022-10-09 10:39:56 [scrapy.downloadermiddlewares.retry] ERROR: Gave up retrying <GET https://www.xxx.com/en/product?o=100&p=100> (failed 3 times): [<twisted.python.failure.Failure twisted.internet.error.ConnectionLost: Connection to the other side was lost in a non-clean fashion: Connection lost.>]

Find a solution from stackoverflow:

Add a method in spider:

# Citation needed

from scrapy.spidermiddlewares.httperror import HttpError

from twisted.internet.error import DNSLookupError

from twisted.internet.error import TimeoutError

# How to use

yield scrapy.Request(url=url, meta={'dont_redirect': True, dont_filter=True, callback=self.parse_list, errback=self.errback_work)

# Definition Method

def errback_work(self, failure):

self.logger.error(repr(failure))

if failure.check(HttpError):

response = failure.value.response

self.logger.error('HttpError on %s', response.url)

elif failure.check(DNSLookupError):

request = failure.request

self.logger.error('DNSLookupError on %s', request.url)

elif failure.check(TimeoutError):

request = failure.request

self.logger.error('TimeoutError on %s', request.url)After deploying it to the online gerapy and running it again, it still reported errors again.

Looking at the version of scrapy, I found that it was version 2.6.1, so I changed it to version 2.5.1.

pip3 install scrapy==2.5.1

Run it locally and report an error:

AttributeError: module ‘OpenSSL. SSL’ has no attribute ‘SSLv3_ METHOD’

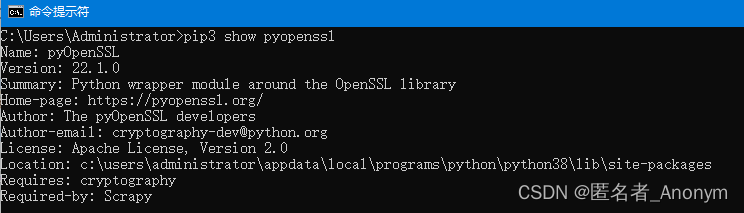

Look at the version of the pyopenssl library:

pip3 show pyopenssl

I find that the version is incorrect.

Then change the library version to 22.0.0

pip3 install pyopenssl==22.0.0

Run it locally again, it is normal!

Then deploy it to online gerapy to run, and it is normal!

Read More:

- [TCP] TCP connection SYN timeout retransmission times and timeout period

- Scrapy runs a crawler with an error importerror: cannot import name suppress

- Error report on startup of rabbitmq in MAC installation error:epmd error for host xiongmindeMacBook-Pro: timeout

- scrapy:LookupError: unknown encoding: ‘unicode’

- How to run Python program directly with atom

- Using DOS command to run Java program

- Cannot run program “git.exe”: CreateProcess error=2 the correct solution

- Angular: Program ng failed to run No application is associated

- The problem of window flash after C + + program is compiled and run

- Raspberry pie set up to run Python program automatically

- Wamp Apache can’t run error report could not execute menu item

- Cannot run program “cd“: error=2, No such file or directory

- Cannot run program “make” when compiling APM firmware with eclipse under Windows: launching failed problem

- Vs under the execution of OpenGL program can run successfully, but do not show things

- Solution to prompt run time error “438” when a VB program is opened

- Clion configuration MingGW report error test cmake run with errors solution

- Error starting ApplicationContext. To display the conditions report re run

- Python program uses OS. System () method to call exe program, resulting in no response of main program process

- Android studio compile and run report: AAPT2 error: check logs for details

- Error report set of unity: failed to unload “assets / animation / clips / run_ 5.anim”