Analyze the existing logs and see from the crash dump logs that the error is reported by the systemui module, the specific error logs are as follows:

Cmdline: com.android.systemui

pid: 3329, tid: 3329, name: ndroid.systemui >>> com.android.systemui <<<

The summary in the log shows that the BinderDeathTracker of the BinderCacheManager was referenced 50133 times, and some of the other references combined exceeded the 51200 limit.

Summary:

50113 of android.telephony.BinderCacheManager$BinderDeathTracker (50113 unique instances)

adb log has a log printed frequently and found the isVowifiAvailable() method in MobileSignalController.java under the systemui module.

39645766 20.08.2022 23:15:03.992 Main 3329 3446 LogcatInfo NetworkController.MobileSignalController(1) isVowifiAvailable,mVoWiFiSettingEnabled = falsemMMtelVowifi = falseIn the isVowifiAvailable() method, the BinderCacheManager is retrieved and the getBinder() method is called. getBinder() then initializes the BinderDeathTracker. isVowifiAvailable() method in MobileSignalController will be called frequently, causing the reference to BinderDeathTracker to slowly exceed the limit.

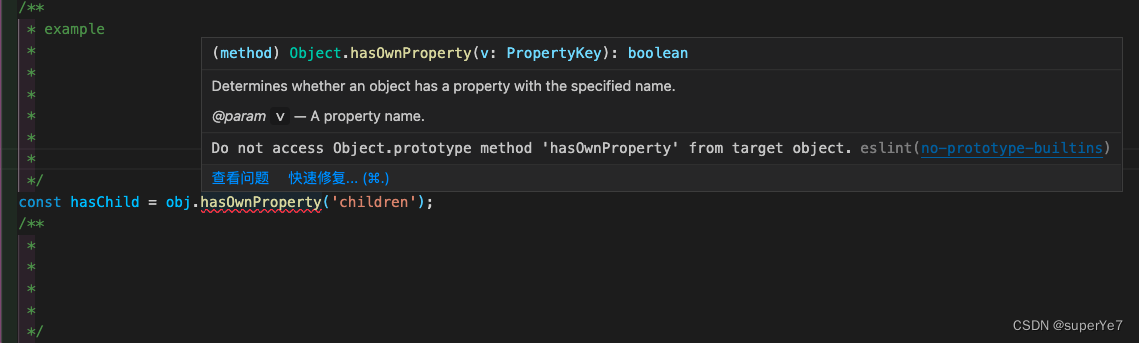

Before Modified:

try {

final ImsMmTelManager imsMmTelManager =

ImsMmTelManager.createForSubscriptionId(activeDataSubId);

// From CarrierConfig Settings

mVoWiFiSettingEnabled = imsMmTelManager.isVoWiFiSettingEnabled();

} catch (IllegalArgumentException exception) {

Log.w(mTag, "fail to get Wfc settings. subId=" + activeDataSubId, exception);

}

Modified:

ImsMmTelManager imsMmTelManager;

try {

imsMmTelManager =

ImsMmTelManager.createForSubscriptionId(activeDataSubId);

// From CarrierConfig Settings

mVoWiFiSettingEnabled = imsMmTelManager.isVoWiFiSettingEnabled();

} catch (IllegalArgumentException exception) {

Log.w(mTag, "fail to get Wfc settings. subId=" + activeDataSubId, exception);

} finaly {

imsMmTelManager = null;

}

Solution: Because this is a local variable, set it to a null value in time after use, and no longer reference the ImsMmTelManager.

Experience: You can view some repeated logs in the adb log, and check whether the code near the log directly or indirectly calls the classes or methods displayed in the Summary.