Error: RuntimeError: CUDA out of memory.

Error Messages:

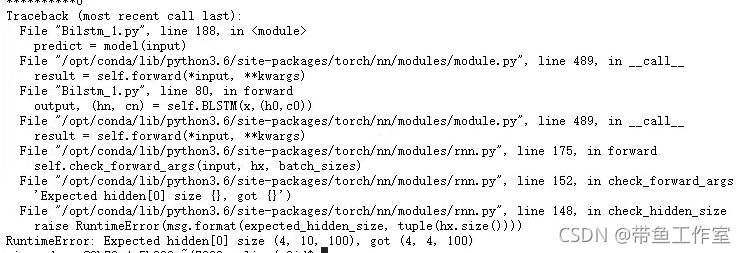

Traceback (most recent call last):

File "xxx.py", line 110, in <module>

loss.backward()

File "/nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/tensor.py", line 185, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/autograd/__init__.py", line 127, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: CUDA out of memory. Tried to allocate 132.00 MiB (GPU 0; 15.78 GiB total capacity; 13.69 GiB already allocated; 91.50 MiB free; 14.53 GiB reserved in total by PyTorch)

Exception raised from malloc at /pytorch/c10/cuda/CUDACachingAllocator.cpp:272 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x42 (0x14c9ce19a1e2 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libc10.so)

frame #1: <unknown function> + 0x1e64b (0x14c9ce3f064b in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #2: <unknown function> + 0x1f464 (0x14c9ce3f1464 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #3: <unknown function> + 0x1faa1 (0x14c9ce3f1aa1 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libc10_cuda.so)

frame #4: at::native::empty_cuda(c10::ArrayRef<long>, c10::TensorOptions const&, c10::optional<c10::MemoryFormat>) + 0x11e (0x14c9d10fc90e in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #5: <unknown function> + 0xf33949 (0x14c9cf536949 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #6: <unknown function> + 0xf4d777 (0x14c9cf550777 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #7: <unknown function> + 0x10e9c7d (0x14ca0a2ecc7d in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #8: <unknown function> + 0x10e9f97 (0x14ca0a2ecf97 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #9: at::empty(c10::ArrayRef<long>, c10::TensorOptions const&, c10::optional<c10::MemoryFormat>) + 0xfa (0x14ca0a3f7a1a in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #10: at::native::mm_cuda(at::Tensor const&, at::Tensor const&) + 0x6c (0x14c9d05ebffc in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #11: <unknown function> + 0xf22a20 (0x14c9cf525a20 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cuda.so)

frame #12: <unknown function> + 0xa56530 (0x14ca09c59530 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #13: at::Tensor c10::Dispatcher::call<at::Tensor, at::Tensor const&, at::Tensor const&>(c10::TypedOperatorHandle<at::Tensor (at::Tensor const&, at::Tensor const&)> const&, at::Tensor const&, at::Tensor const&) const + 0xbc (0x14ca0a44181c in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #14: at::mm(at::Tensor const&, at::Tensor const&) + 0x4b (0x14ca0a3926ab in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #15: <unknown function> + 0x2ed0a2f (0x14ca0c0d3a2f in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #16: <unknown function> + 0xa56530 (0x14ca09c59530 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #17: at::Tensor c10::Dispatcher::call<at::Tensor, at::Tensor const&, at::Tensor const&>(c10::TypedOperatorHandle<at::Tensor (at::Tensor const&, at::Tensor const&)> const&, at::Tensor const&, at::Tensor const&) const + 0xbc (0x14ca0a44181c in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #18: at::Tensor::mm(at::Tensor const&) const + 0x4b (0x14ca0a527cab in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #19: <unknown function> + 0x2d11c34 (0x14ca0bf14c34 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #20: torch::autograd::generated::MmBackward::apply(std::vector<at::Tensor, std::allocator<at::Tensor> >&&) + 0x294 (0x14ca0bf30814 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #21: <unknown function> + 0x3375bb7 (0x14ca0c578bb7 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #22: torch::autograd::Engine::evaluate_function(std::shared_ptr<torch::autograd::GraphTask>&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr<torch::autograd::ReadyQueue> const&) + 0x1400 (0x14ca0c574400 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #23: torch::autograd::Engine::thread_main(std::shared_ptr<torch::autograd::GraphTask> const&) + 0x451 (0x14ca0c574fa1 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #24: torch::autograd::Engine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x89 (0x14ca0c56d119 in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_cpu.so)

frame #25: torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x4a (0x14ca19d0ddea in /nfsshare/apps/anaconda3/lib/python3.7/site-packages/torch/lib/libtorch_python.so)

frame #26: <unknown function> + 0xbd6df (0x14ca5616b6df in /usr/lib/x86_64-linux-gnu/libstdc++.so.6)

frame #27: <unknown function> + 0x76db (0x14ca5a6356db in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #28: clone + 0x3f (0x14ca5a35ea3f in /lib/x86_64-linux-gnu/libc.so.6)

Solution:

CUDA_LAUNCH_BLOCKING=1 python xx.py failed

Reduce the batch size to solve the problem.