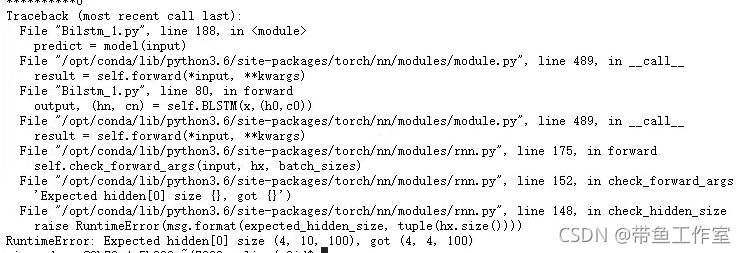

Start with the above figure:

The above figure shows the problem when training the bilstm network.

Problem Description: define the initial weights H0 and C0 of bilstm network and input them to the network as the initial weight of bilstm, which is realized by the following code

output, (hn, cn) = self.bilstm(input, (h0, c0))The network structure is as follows:

self.bilstm = nn.LSTM(

input_size=self.input_size,

hidden_size=self.hidden_size,

num_layers=self.num_layers,

bidirectional=True,

bias=True,

dropout=config.drop_out

)The dimension of initial weight is defined as H0 and C0 are initialized. The dimension is:

**h_0** of shape `(num_layers * num_directions, batch, hidden_size)`

**c_0** of shape `(num_layers * num_directions, batch, hidden_size)`In bilstm network, the parameters are defined as follows:

num_layers: 2

num_directions: 2

batch: 4

seq_len: 10

input_size: 300

hidden_size: 100 Then according to the definition in the official documents H0, C0 dimensions should be: (2 * 2, 4100) = (4, 4100)

However, according to the error screenshot at the beginning of the article, the dimension of the initial weight of the hidden layer should be (4, 10100), which makes me doubt whether the dimension specified in the official document is correct.

Obviously, the official documents cannot be wrong, and the hidden state dimensions when using blstm, RNN and bigru in the past are the same as those specified by the official, so I don’t know where to start.

Therefore, we re examined the network structure and found that an important parameter, batch, was missing_ First, let’s take a look at all the parameters required by bilstm:

Args:

input_size: The number of expected features in the input `x`

hidden_size: The number of features in the hidden state `h`

num_layers: Number of recurrent layers. E.g., setting ``num_layers=2``

would mean stacking two LSTMs together to form a `stacked LSTM`,

with the second LSTM taking in outputs of the first LSTM and

computing the final results. Default: 1

bias: If ``False``, then the layer does not use bias weights `b_ih` and `b_hh`.

Default: ``True``

batch_first: If ``True``, then the input and output tensors are provided

as (batch, seq, feature). Default: ``False``

dropout: If non-zero, introduces a `Dropout` layer on the outputs of each

LSTM layer except the last layer, with dropout probability equal to

:attr:`dropout`. Default: 0

bidirectional: If ``True``, becomes a bidirectional LSTM. Default: ``False``batch_ The first parameter can make the dimension batch in the first dimension during training, that is, the input data dimension is

(batch size, SEQ len, embedding dim), if not added batch_ First = true, the dimension is

(seq len,batch size,embedding dim)

Because there was no break at noon, I vaguely forgot to add this important parameter, resulting in an error: the initial weight dimension is incorrect, and I can add it batch_ Run smoothly after first = true.

The modified network structure is as follows:

self.bilstm = nn.LSTM(

input_size=self.input_size,

hidden_size=self.hidden_size,

num_layers=self.num_layers,

batch_first=True,

bidirectional=True,

bias=True,

dropout=config.drop_out

)

Extension: when we use RNN and its variant network, if we want to add the initial weight, the dimension must be the officially specified dimension, i.e

(num_layers * num_directions, batch, hidden_size)

At the same time, be sure to set batch_ First = true. The official document does not specify when batch is set_ When first = true, the dimensions of H0, C0, HN and CN are (num_layers * num_directions, batch, hidden_size), so be careful!

At the same time, check whether batch is set when the dimensions of HN and CN are incorrect_ First parameter, RNN and its variant networks are applicable to this method!

Read More:

- [Solved] emulator: glteximage2d: got err pre 🙁 0x502 internal 0x1908 format 0x1908 type 0x1401

- CLP: error: getaddrinfo enotfound http://x.x.x.x/

- [-] handler failed to bind to x.x.x.x: Port troubleshooting

- module ‘win32com.gen_py.00020813-0000-0000-C000-000000000046x0x1x9‘ has no attribute ‘CLSIDToClassMa

- A problem occurred configuring project ‘:x x x‘. > java.lang.NullPointerException (no error message)

- Apache Groovy——java.lang.NoSuchMethodError: x.x.x: method <init>()V not found

- 1067 – Invalid default value for ‘sex‘1366 – Incorrect string value: ‘\xE6\x8A\x80\xE6\x9C\xAF…‘ f

- Could not write JSON: write javaBean error, fastjson version x.x.x, class

- Microsoft OLE DB Provider for SQL Server error & #x27;80040e4d & #x27;

- OSD deployment failure code (0x00000001) 0x80004005

- 【PTA:】 Error: class X is public should be declared in a file named X.java

- Unhandled exception at 0x00000000: 0xc0000005: access violation at position 0x0000000000

- Ubuntu18.04 x11vnc failed, report error opening logfile: /var/log/x11vnc.log

- SSH Login: RSA host key for 192.168.x.x has changed and you have requested strict checking. Host key

- Running program encountered Error:Access violation at 0x**(tried to write to 0x**) program terminated

- Error in `./a.out‘: free(): invalid next size (fast): 0x0000000001da8010

- Solve the problem that “figure size 640×480 with 1 axes” does not display pictures in jupyter notebook

- Endnote x9 error

- win7 error code 0x80070522

- Win 10 System Restore Fail 0x80070091