background note: the virtual machine TANG2 lacks the vmdk file tang2.vmdk, resulting in boot failure and error

[ root@localhost :/vmfs/volumes/e9f402/tang2] ls -l

total 84028480

-rw------- 1 root root 49936498688 Sep 9 02:30 tang2-000001-sesparse.vmdk

-rw------- 1 root root 329 Aug 17 2020 tang2-000001.vmdk

-rw------- 1 root root 4294967296 Dec 25 2019 tang2-Snapshot1.vmem

-rw------- 1 root root 9732350 Dec 25 2019 tang2-Snapshot1.vmsn

-rw------- 1 root root 107374182400 Dec 25 2019 tang2-flat.vmdk

-rw------- 1 root root 8684 Sep 9 01:43 tang2.nvram

-rw-r--r-- 1 root root 0 Feb 24 2021 tang2.vmsd

-rwxr-xr-x 1 root root 3303 Feb 7 2021 tang2.vmx

-rw------- 1 root root 3237 Feb 7 2021 tang2.vmxf

-rw------- 1 root root 107374182400 Sep 9 08:42 temp-flat.vmdk

-rw------- 1 root root 494 Sep 9 08:42 temp.vmdk

-rw-r--r-- 1 root root 266758 Oct 18 2019 vmware-1.log

-rw-r--r-- 1 root root 351477 May 3 2020 vmware-2.log

-rw-r--r-- 1 root root 271780 Aug 17 2020 vmware-3.log

-rw-r--r-- 1 root root 296091 Sep 9 01:43 vmware-4.log

-rw-r--r-- 1 root root 78208 Sep 9 01:44 vmware-5.log

-rw-r--r-- 1 root root 76793 Sep 9 02:30 vmware.log

1. Generate a vmdk disk boot file based on the tang2-flat.vmdk file size 107374182400

[root@localhost:/vmfs/volumes/e9f402/tang2] vmkfstools -c 107374182400 -d thin temp.vmdk

Create: 100% done.

2. Delete the -flat.vmdk actual disk file and keep the .vmdk disk boot file**

[root@localhost:/vmfs/volumes/e9f402/tang2] rm -f temp-flat.vmdk

3. Rename the newly generated disk boot file to the missing file name

[root@localhost:/vmfs/volumes/e9f402/tang2] mv temp.vmdk tang2.vmdk

[root@localhost:/vmfs/volumes/e9f402/tang2] ls -l

total 84028480

-rw------- 1 root root 49936498688 Sep 9 02:30 tang2-000001-sesparse.vmdk

-rw------- 1 root root 329 Aug 17 2020 tang2-000001.vmdk

-rw------- 1 root root 4294967296 Dec 25 2019 tang2-Snapshot1.vmem

-rw------- 1 root root 9732350 Dec 25 2019 tang2-Snapshot1.vmsn

-rw------- 1 root root 107374182400 Dec 25 2019 tang2-flat.vmdk

-rw------- 1 root root 8684 Sep 9 01:43 tang2.nvram

-rw------- 1 root root 494 Sep 9 08:42 tang2.vmdk

-rw-r--r-- 1 root root 0 Feb 24 2021 tang2.vmsd

-rwxr-xr-x 1 root root 3303 Feb 7 2021 tang2.vmx

-rw------- 1 root root 3237 Feb 7 2021 tang2.vmxf

-rw-r--r-- 1 root root 266758 Oct 18 2019 vmware-1.log

-rw-r--r-- 1 root root 351477 May 3 2020 vmware-2.log

-rw-r--r-- 1 root root 271780 Aug 17 2020 vmware-3.log

-rw-r--r-- 1 root root 296091 Sep 9 01:43 vmware-4.log

-rw-r--r-- 1 root root 78208 Sep 9 01:44 vmware-5.log

-rw-r--r-- 1 root root 76793 Sep 9 02:30 vmware.log

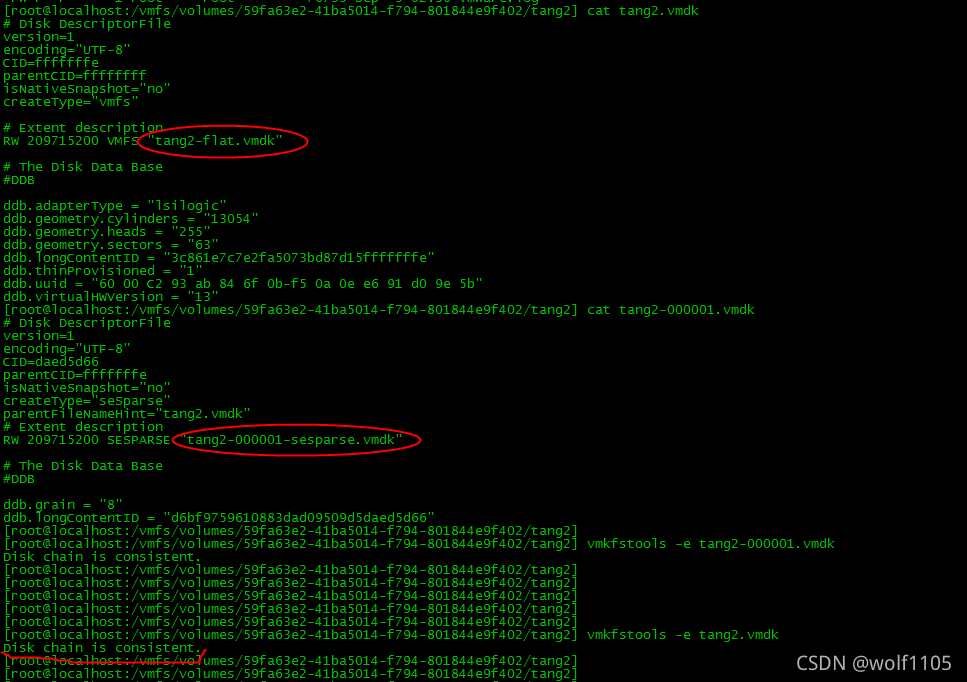

Confirm that the master disk is RW 209715200 VMFS “tang2-flat.vmdk”

[root@localhost:/vmfs/volumes/e9f402/tang2] vi tang2.vmdk

# Disk DescriptorFile

version=1

encoding="UTF-8"

CID=fffffffe

parentCID=ffffffff

isNativeSnapshot="no"

createType="vmfs"

# Extent description

RW 209715200 VMFS "tang2-flat.vmdk"

# The Disk Data Base

#DDB

ddb.adapterType = "lsilogic"

ddb.geometry.cylinders = "13054"

ddb.geometry.heads = "255"

ddb.geometry.sectors = "63"

ddb.longContentID = "dd1fc9f492c51eb078deb1b8fffffffe"

ddb.thinProvisioned = "1"

ddb.uuid = "60 00 C2 91 4b f7 a2 67-9d 42 aa b1 50 cf fe d0"

ddb.virtualHWVersion = "13"

[ root@localhost :/vmfs/volumes/e9f402/tang2]

4. Modify the secondary disk boot file as follows: parentcid = fffffffe modify the CID in the primary disk boot file

[ root@localhost :/vmfs/volumes/e9f402/tang2] vi tang2-000001.vmdk

# Disk DescriptorFile

version=1

encoding="UTF-8"

CID=daed5d66

parentCID=fffffffe

isNativeSnapshot="no"

createType="seSparse"

parentFileNameHint="tang2.vmdk"

# Extent description

RW 209715200 SESPARSE "tang2-000001-sesparse.vmdk"

# The Disk Data Base

#DDB

ddb.grain = "8"

ddb.longContentID = "d6bf9759610883dad09509d5daed5d66"

[root@localhost:/vmfs/volumes/e9f402/tang2] cat tang2-000001.vmdk

# Disk DescriptorFile

version=1

encoding="UTF-8"

CID=daed5d66

parentCID=fffffffe

isNativeSnapshot="no"

createType="seSparse"

parentFileNameHint="tang2.vmdk"

# Extent description

RW 209715200 SESPARSE "tang2-000001-sesparse.vmdk"

# The Disk Data Base

#DDB

ddb.grain = "8"

ddb.longContentID = "d6bf9759610883dad09509d5daed5d66"

[ root@localhost :/vmfs/volumes/e9f402/tang2]

5. Check whether the disk chain configuration of the master vmdk file is correct

[ root@localhost :/vmfs/volumes/e9f402/tang2] vmkfstools -e tang2.vmdk

Disk chain is consistent.

6. Then check whether the disk chain configuration of the secondary vmdk file is correct

[ root@localhost :/vmfs/volumes/e9f402/tang2] vmkfstools -e tang2-000001.vmdk

Disk chain is consistent.

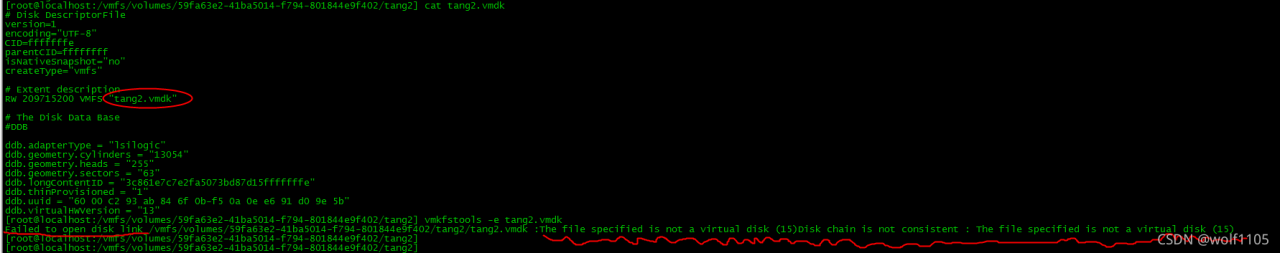

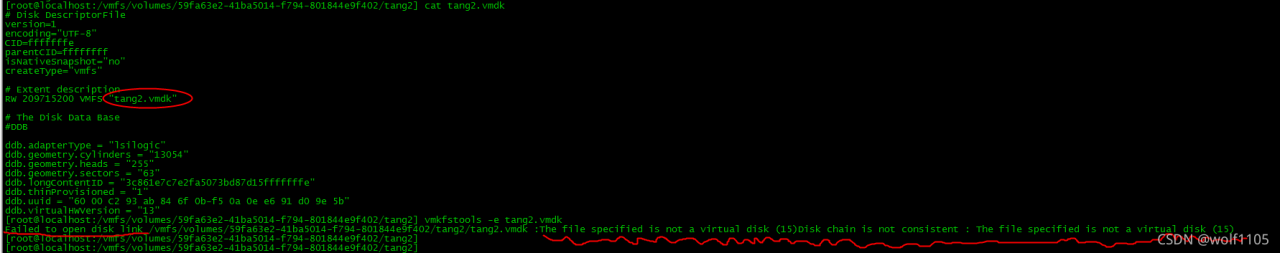

If the configuration is wrong, the following error messages will be reported:

It’s done. Turn on the console normally!

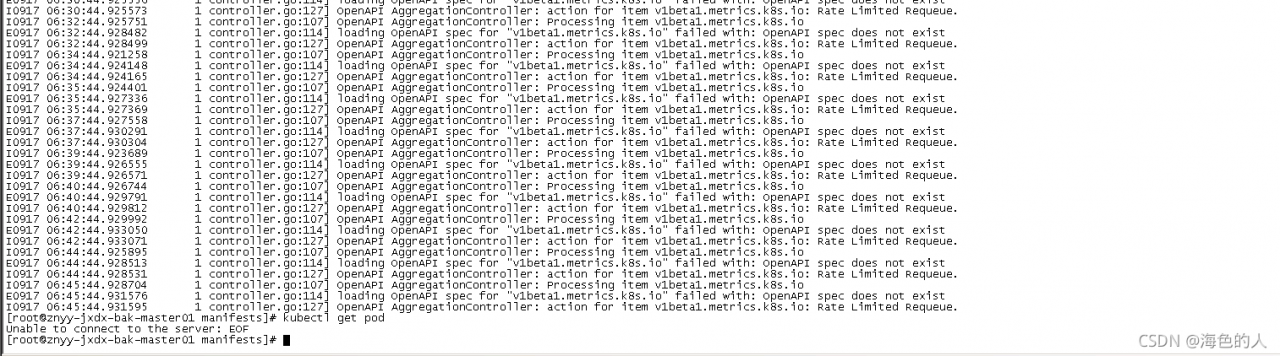

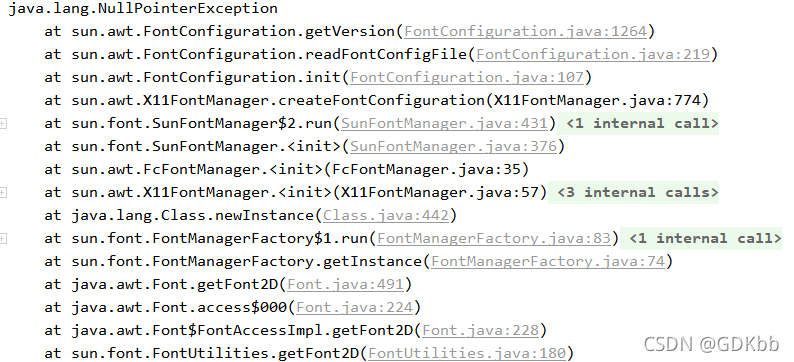

kubectl suddenly failed to obtain resources in the environment just deployed a few days ago. Check the apiserver log, as shown in the above results

kubectl suddenly failed to obtain resources in the environment just deployed a few days ago. Check the apiserver log, as shown in the above results

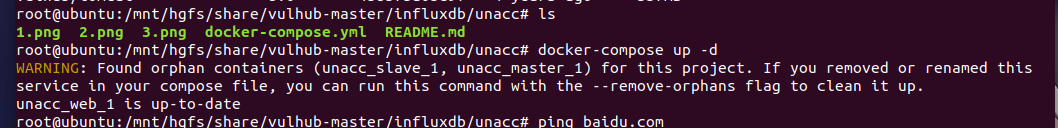

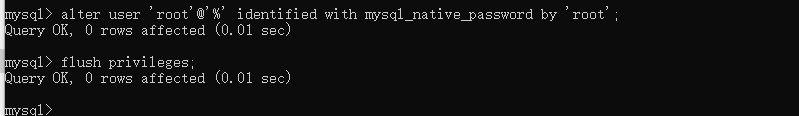

reconnect the Navicat will solve the problem.

reconnect the Navicat will solve the problem.