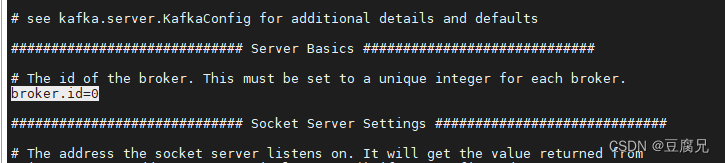

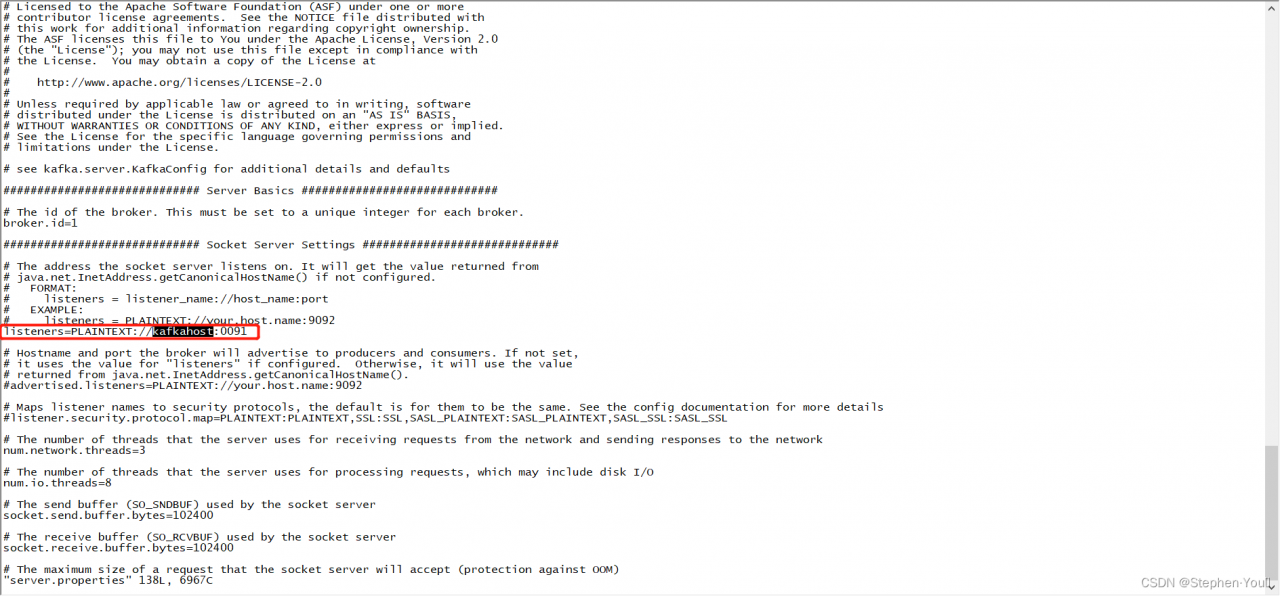

Solution: Modify the configuration file of server.properties as below:

Add these two items to the server.properties configuration file.

listeners=PLAINTEXT://xx.xx.xx.xx(server intranet IP address):9092

advertised.listeners=PLAINTEXT://xx.xx.xx.xx(server external IP address):9092

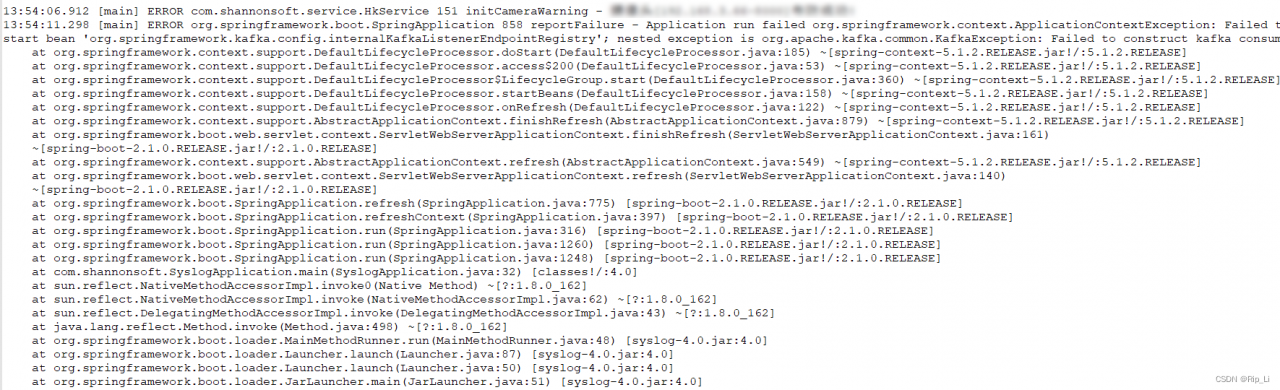

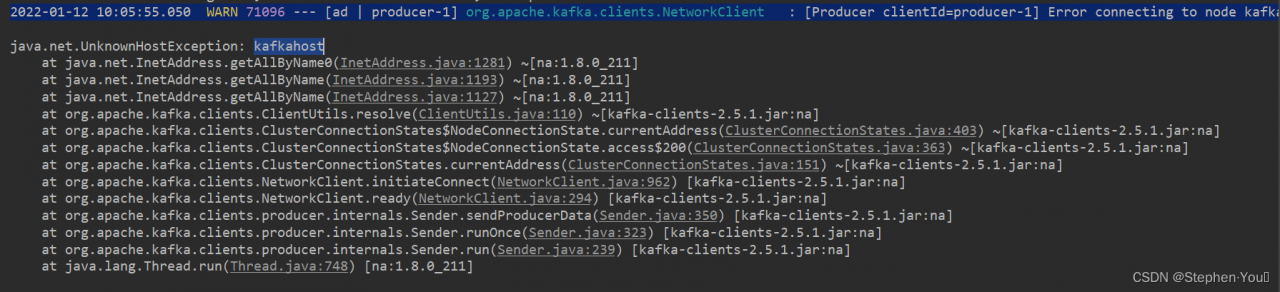

kafka server fail to startup error:

[2022-09-29 16:04:58,630] ERROR [KafkaServer id=1] Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

org.apache.kafka.common.KafkaException: Socket server failed to bind to 47.100.19.248:9092: Cannot assign requested address.

at kafka.network.Acceptor.openServerSocket(SocketServer.scala:778)

at kafka.network.Acceptor.<init>(SocketServer.scala:672)

at kafka.network.DataPlaneAcceptor.<init>(SocketServer.scala:531)

at kafka.network.SocketServer.createDataPlaneAcceptor(SocketServer.scala:287)

at kafka.network.SocketServer.$anonfun$createDataPlaneAcceptorsAndProcessors$1(SocketServer.scala:267)

at kafka.network.SocketServer.$anonfun$createDataPlaneAcceptorsAndProcessors$1$adapted(SocketServer.scala:261)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at kafka.network.SocketServer.createDataPlaneAcceptorsAndProcessors(SocketServer.scala:261)

at kafka.network.SocketServer.startup(SocketServer.scala:135)

at kafka.server.KafkaServer.startup(KafkaServer.scala:309)

at kafka.Kafka$.main(Kafka.scala:109)

at kafka.Kafka.main(Kafka.scala)

Caused by: java.net.BindException: Cannot assign requested address

at java.base/sun.nio.ch.Net.bind0(Native Method)

at java.base/sun.nio.ch.Net.bind(Net.java:461)

at java.base/sun.nio.ch.Net.bind(Net.java:453)

at java.base/sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:227)

at java.base/sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:80)

at kafka.network.Acceptor.openServerSocket(SocketServer.scala:774)

… 13 more

[2022-09-29 16:04:58,632] INFO [KafkaServer id=1] shutting down (kafka.server.KafkaServer)