[background]

Today, we tested the node node capacity expansion of k8s cluster. The whole process of capacity expansion was very smooth. However, it was later found that on the newly expanded node (k8s-node04), there has always been an error reported and restarted pod instance of calico node.

[phenomenon]

From the running status query results of the following pod instances, it can be found that a pod instance (calico-node-xl9bc) is constantly restarting.

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 75m

kube-system calico-node-6dk7g 1/1 Running 0 75m

kube-system calico-node-dlf26 1/1 Running 0 75m

kube-system calico-node-s5phd 1/1 Running 0 75m

kube-system calico-node-xl9bc 0/1 Running 30 3m28s

[troubleshooting]

Query the log of pod

[root@k8s-master01 ~]# kubectl logs calico-node-xl9bc -n kube-system -f

2021-09-04 12:32:45.011 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:46.025 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:47.038 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:48.050 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:49.061 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:50.072 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:51.079 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:52.093 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:53.104 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:54.114 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:55.127 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:56.138 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:57.148 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:58.162 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:32:59.176 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:33:00.186 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:33:01.199 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:33:02.211 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:33:03.225 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

2021-09-04 12:33:04.238 [ERROR][69] felix/health.go 246: Health endpoint failed, trying to restart it... error=listen tcp: lookup localhost on 114.114.114.114:53: no such host

This error report has been searched on the Internet for a long time, and no targeted solution has been found.

As like as two peas of IPv4 and IPv6, the /etc/hosts file was found to be missing from the two files of the node file. It turned out to be a little bit wrong with the /etc/hosts file of my local node node. I didn’t know that when I installed the virtual machine last night, I did not know that the last time I installed it on my own. What strange operation did you do.

### /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

After adding these two lines of configuration in the/etc/hosts file of k8s-node04, restart the network and find that crashloopbackoff occurs in the pod instance.

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 80m

kube-system calico-node-6dk7g 1/1 Running 0 80m

kube-system calico-node-dlf26 1/1 Running 0 80m

kube-system calico-node-s5phd 1/1 Running 0 80m

kube-system calico-node-xl9bc 0/1 CrashLoopBackOff 7 8m24s

After deleting this pod instance, it is found that the running state of the recreated pod instance finally returns to normal.

[root@k8s-master01 ~]# kubectl delete pod calico-node-xl9bc -n kube-system

pod "calico-node-xl9bc" deleted

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 81m

kube-system calico-node-6dk7g 1/1 Running 0 81m

kube-system calico-node-dlf26 1/1 Running 0 81m

kube-system calico-node-mz58r 0/1 Running 0 5s

kube-system calico-node-s5phd 1/1 Running 0 81m

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 81m

kube-system calico-node-6dk7g 1/1 Running 0 81m

kube-system calico-node-dlf26 1/1 Running 0 81m

kube-system calico-node-mz58r 0/1 Running 0 7s

kube-system calico-node-s5phd 1/1 Running 0 81m

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 81m

kube-system calico-node-6dk7g 1/1 Running 0 81m

kube-system calico-node-dlf26 1/1 Running 0 81m

kube-system calico-node-mz58r 0/1 Running 0 8s

kube-system calico-node-s5phd 1/1 Running 0 81m

[root@k8s-master01 ~]# kubectl get pods -A| grep calico

kube-system calico-kube-controllers-78d6f96c7b-tv2g6 1/1 Running 0 81m

kube-system calico-node-6dk7g 1/1 Running 0 81m

kube-system calico-node-dlf26 1/1 Running 0 81m

kube-system calico-node-mz58r 1/1 Running 0 11s

kube-system calico-node-s5phd 1/1 Running 0 81m

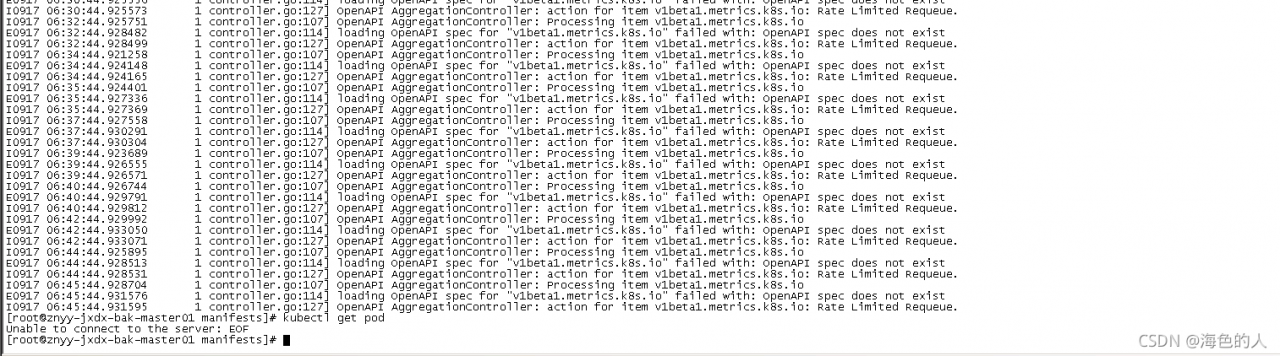

kubectl suddenly failed to obtain resources in the environment just deployed a few days ago. Check the apiserver log, as shown in the above results

kubectl suddenly failed to obtain resources in the environment just deployed a few days ago. Check the apiserver log, as shown in the above results