urllib.error.URLError: < urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certi

Solution:

Add the following two lines of code before the code starts:

import ssl

ssl._create_default_https_context = ssl._create_unverified_contextComplete example:

import torch

import torchvision

import torchvision.transforms as transforms

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

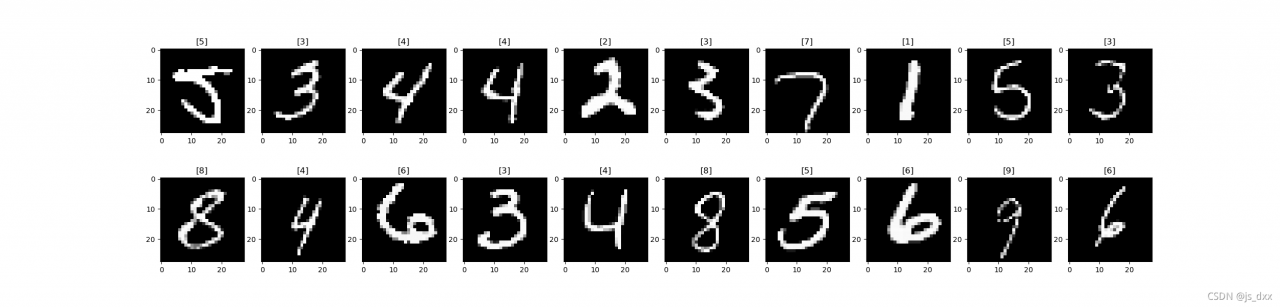

#Download the data set and adjust the image, because the output of the torchvision data set is in PILImage format, and the data field is in [0,1]

#We convert it into the tensor format of the standard data field [-1,1]

#transform Data Converter

transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

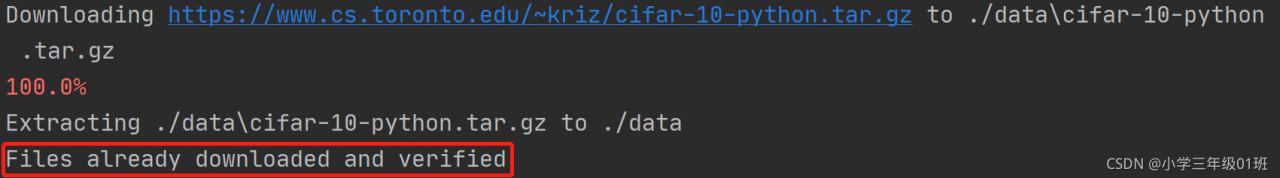

trainset=torchvision.datasets.CIFAR10(root='./data',train=True,download=True,transform=transform)

# The downloaded data is placed in the trainset

trainloader=torch.utils.data.DataLoader(trainset,batch_size=4,shuffle=True,num_workers=2)

# DataLoader Data Iterator Encapsulate data into DataLoader

# num_workers: Two threads read data

# batch_size=4 batch processing

testset=torchvision.datasets.CIFAR10(root='./data',train=False,download=True,transform=transform)

testloader=torch.utils.data.DataLoader(testset,batch_size=4,shuffle=False,num_workers=2)

classes=('airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck')Download result