A bug was encountered while recording the test

After initiating the request, return to 502 bad gateway (nginx/1.1.19) to check that nginx starts normally. After checking the back-end log, an error is found

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:361)

at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1081)

The error message shows that the Dubbo link and the zookeeper link are not available

Find the bin directory of zookeeper and use./zkserver.sh start to start normally

but when using./zkserver.sh status, an error is found in the error contacting service. It is probable not running

ZooKeeper JMX enabled by default

Using config: /opt/zk1/zookeeper-3.4.10/bin/../conf/zoo.cfg

Error contacting service. It is probably not running.

Use the systemctl status ZK command to view the status (small gray dots)

● zk.service - Zookeeper Service

Loaded: loaded (/etc/systemd/system/zk.service; enabled; vendor preset: enabled)

Active: activating (start-post) (Result: exit-code) since Mon 2021-10-11 20:17:54 CST; 2s ago

Process: 9433 ExecStop=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh stop (code=exited, status=0/SUCCESS)

Process: 9448 ExecStart=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh start (code=exited, status=0/SUCCESS)

Main PID: 9458 (code=exited, status=1/FAILURE); : 9485 (sleep)

Tasks: 1

Memory: 256.0K

CPU: 3.695s

CGroup: /system.slice/zk.service

└─control

└─9485 /bin/sleep 4

Oct 11 20:17:54 ubuntu systemd[1]: Starting Zookeeper Service...

Oct 11 20:17:54 ubuntu zkServer.sh[9448]: ZooKeeper JMX enabled by default

Oct 11 20:17:54 ubuntu zkServer.sh[9448]: Using config: /opt/zk1/zookeeper-3.4.10/bin/../conf/zoo.cfg

Oct 11 20:17:55 ubuntu zkServer.sh[9448]: Starting zookeeper ... STARTED

Oct 11 20:17:56 ubuntu systemd[1]: zk.service: Main process exited, code=exited, status=1/FAILURE

Attempt to start systemctl start ZK

Job for zk.service failed because the control process exited with error code. See "systemctl status zk.service" and "journalctl -xe" for details.

Check systemctl status ZK again

● zk.service - Zookeeper Service

Loaded: loaded (/etc/systemd/system/zk.service; enabled; vendor preset: enabled)

Active: activating (start-post) since Mon 2021-10-11 20:18:16 CST; 1s ago

Process: 9894 ExecStop=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh stop (code=exited, status=0/SUCCESS)

Process: 9910 ExecStart=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh start (code=exited, status=0/SUCCESS)

Main PID: 9920 (java); : 9953 (sleep)

Tasks: 26

Memory: 170.6M

CPU: 3.268s

CGroup: /system.slice/zk.service

├─9920 java -Dzookeeper.log.dir=. -Dzookeeper.root.logger=INFO,ROLLINGFILE -cp /opt/zk1/zookeeper-3.4.10/bin/../build/classes:/opt/zk1/zookeeper-3.4.10/bin/../build/lib/*.jar:/

└─control

└─9953 /bin/sleep 4

Oct 11 20:18:16 ubuntu systemd[1]: Starting Zookeeper Service...

Oct 11 20:18:16 ubuntu zkServer.sh[9910]: ZooKeeper JMX enabled by default

Oct 11 20:18:16 ubuntu zkServer.sh[9910]: Using config: /opt/zk1/zookeeper-3.4.10/bin/../conf/zoo.cfg

Oct 11 20:18:17 ubuntu zkServer.sh[9910]: Starting zookeeper ... STARTED

I still can’t find it

view the configuration file less zoo.cfg to find the log path

dataLogDir=/opt/zk1/zookeeper-3.4.10/datalog

Enter/opt/ZK1/zookeeper-3.4.10/datalog/version-2 and find a log suspected to be damaged (log. 220770)

-rw-r--r-- 1 root root 67108880 Sep 22 00:21 log.1ce8b6

-rw-r--r-- 1 root root 67108880 Sep 22 02:30 log.1e0dbe

-rw-r--r-- 1 root root 67108880 Sep 22 11:15 log.1e4a05

-rw-r--r-- 1 root root 67108880 Sep 22 20:26 log.1f407d

-rw-r--r-- 1 root root 67108880 Sep 23 02:30 log.20427c

-rw-r--r-- 1 root root 67108880 Sep 23 12:32 log.20ecc9

-rw-r--r-- 1 root root 0 Sep 23 12:33 log.220770

Delete this log RM log.220770 start again and check the status

systemctl start ZK systemctl status ZK (small green dot)

● zk.service - Zookeeper Service

Loaded: loaded (/etc/systemd/system/zk.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-10-11 20:19:30 CST; 5s ago

Process: 11032 ExecStop=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh stop (code=exited, status=0/SUCCESS)

Process: 11088 ExecStartPost=/bin/sleep 4 (code=exited, status=0/SUCCESS)

Process: 11047 ExecStart=/opt/zk1/zookeeper-3.4.10/bin/zkServer.sh start (code=exited, status=0/SUCCESS)

Main PID: 11057 (java)

Tasks: 28

Memory: 222.5M

CPU: 4.229s

CGroup: /system.slice/zk.service

└─11057 java -Dzookeeper.log.dir=. -Dzookeeper.root.logger=INFO,ROLLINGFILE -cp /opt/zk1/zookeeper-3.4.10/bin/../build/classes:/opt/zk1/zookeeper-3.4.10/bin/../build/lib/*.jar:

Oct 11 20:19:25 ubuntu systemd[1]: Starting Zookeeper Service...

Oct 11 20:19:25 ubuntu zkServer.sh[11047]: ZooKeeper JMX enabled by default

Oct 11 20:19:25 ubuntu zkServer.sh[11047]: Using config: /opt/zk1/zookeeper-3.4.10/bin/../conf/zoo.cfg

Oct 11 20:19:26 ubuntu zkServer.sh[11047]: Starting zookeeper ... STARTED

Oct 11 20:19:30 ubuntu systemd[1]: Started Zookeeper Service.

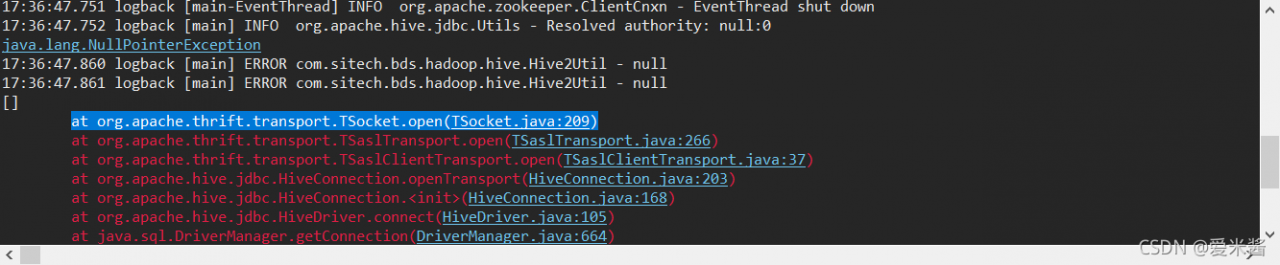

Restart the back-end service. The error is found and solved

in addition, there are other situations that can lead to this problem

1. The firewall is not closed

Ubuntu

CentOS

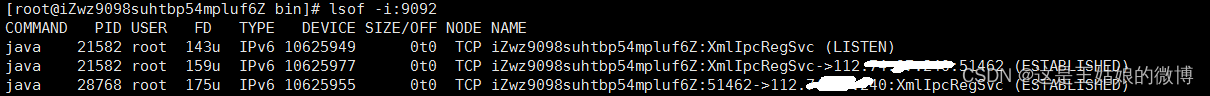

2. The port is occupied

netstat – ANP | grep port number

kill – 9 ID