1. For fixed length label and feature

Generate tfrecord data:

Multiple label samples, where the label contains 5

import os

import tensorflow as tf

import numpy as np

output_flie = str(os.path.dirname(os.getcwd()))+"/train.tfrecords"

with tf.python_io.TFRecordWriter(output_flie) as writer:

labels = np.array([[1,0,0,1,0],[0,1,0,0,1],[0,0,0,0,1],[1,0,0,0,0]])

features = np.array([[0,0,0,0,0,0],[1,1,1,1,1,2],[1,1,1,0,0,2],[0,0,0,0,1,9]])

for i in range(4):

label = labels[i]

feature = features[i]

example = tf.train.Example(features=tf.train.Features(feature={

"label": tf.train.Feature(int64_list=tf.train.Int64List(value=label)),

'feature': tf.train.Feature(int64_list=tf.train.Int64List(value=feature))

}))

writer.write(example.SerializeToString())

Parse tfrecord data:

import os

import tensorflow as tf

import numpy as np

def read_tf(output_flie):

filename_queue = tf.train.string_input_producer([output_flie])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

result = tf.parse_single_example(serialized_example,

features={

'label': tf.FixedLenFeature([5], tf.int64),

'feature': tf.FixedLenFeature([6], tf.int64),

})

feature = result['feature']

label = result['label']

return feature, label

output_flie = str(os.path.dirname(os.getcwd())) + "/train.tfrecords"

feature, label = read_tf(output_flie)

imageBatch, labelBatch = tf.train.batch([feature, label], batch_size=2, capacity=3)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

print(1)

images, labels = sess.run([imageBatch, labelBatch])

print(images)

print(labels)

coord.request_stop()

coord.join(threads)

Output:

1

('----images: ', array([[0, 0, 0, 0, 0, 0],

[1, 1, 1, 1, 1, 2]]))

('----labels:', array([[1, 0, 0, 1, 0],

[0, 1, 0, 0, 1]]))

2. For variable length label and feature

Generate tfrecord

It is the same as the fixed length data generation method

import os

import tensorflow as tf

import numpy as np

train_TFfile = str(os.path.dirname(os.getcwd()))+"/hh.tfrecords"

writer = tf.python_io.TFRecordWriter(train_TFfile)

labels = [[1,2,3],[3,4],[5,2,6],[6,4,9],[9]]

features = [[2,5],[3],[5,8],[1,4],[5,9]]

for i in range(5):

label = labels[i]

print(label)

feature = features[i]

example = tf.train.Example(

features=tf.train.Features(

feature={'label': tf.train.Feature(int64_list=tf.train.Int64List(value=label)),

'feature': tf.train.Feature(int64_list=tf.train.Int64List(value=feature))}))

writer.write(example.SerializeToString())

writer.close()

Parsing tfrecord

The main changes are:

tf.VarLenFeature(tf.int64)

Unfinished to be continued

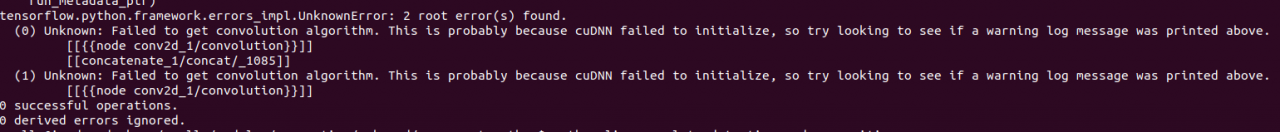

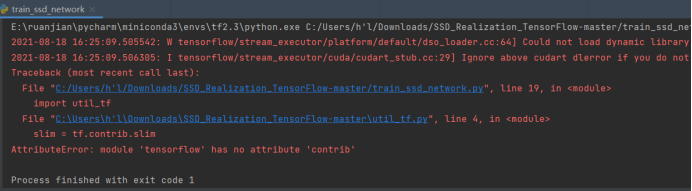

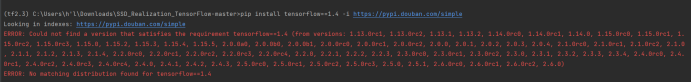

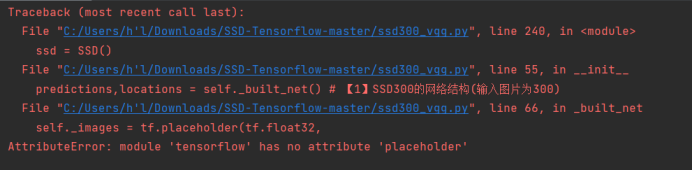

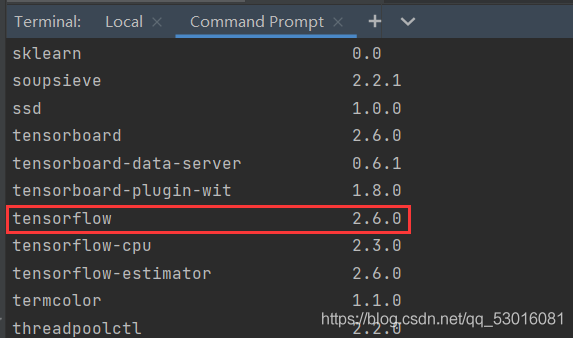

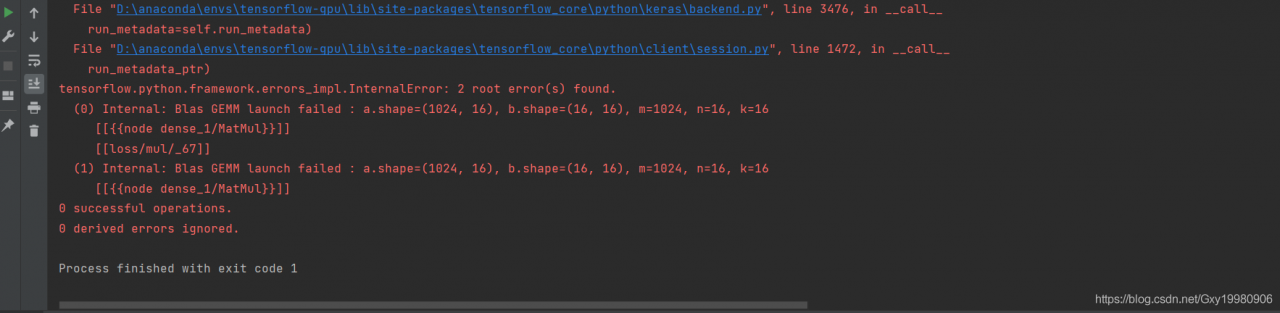

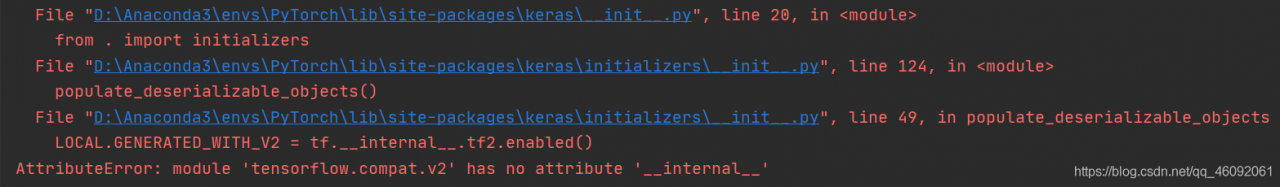

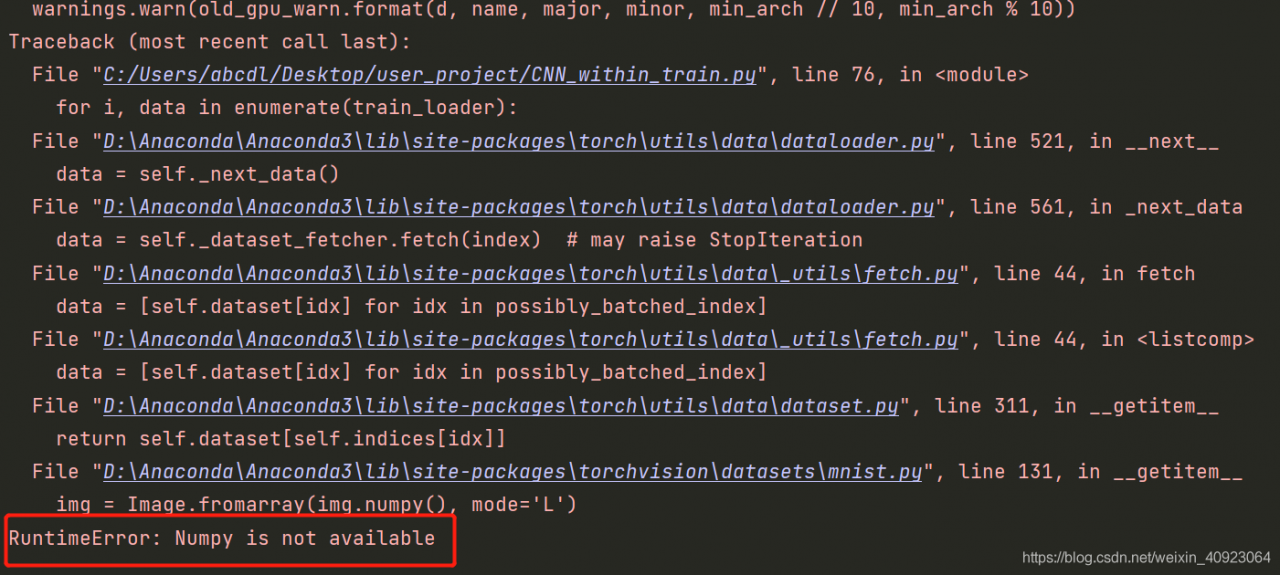

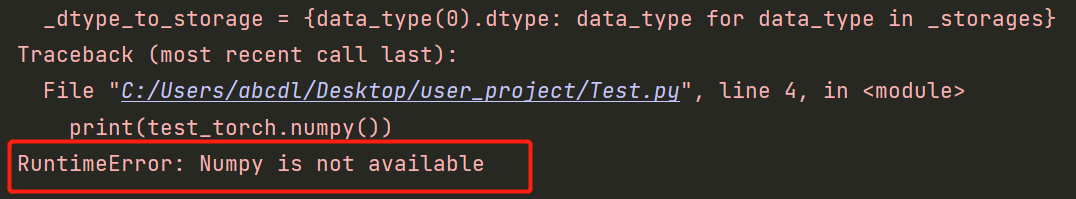

Common errors:

When the defined label dimension is different from the dimension during parsing, an error will be reported as follows:

Details of error reporting:

tensorflow.python.framework.errors_impl.InvalidArgumentError: Name: <unknown>, Key: label, Index: 0. Number of int64 values != expected. Values size: 1 but output shape: [3]

The size of the label is 1, but when used, it exceeds 1

Solution: when generating tfrecord, the length of label should be the same as that during parsing.