Error from keras.preprocessing.text import tokenizer: attributeerror: module ‘tensorflow. Compat. V2’ has

Import the vocabulary mapper tokenizer in keras in NLP code

from keras.preprocessing.text import Tokenizer

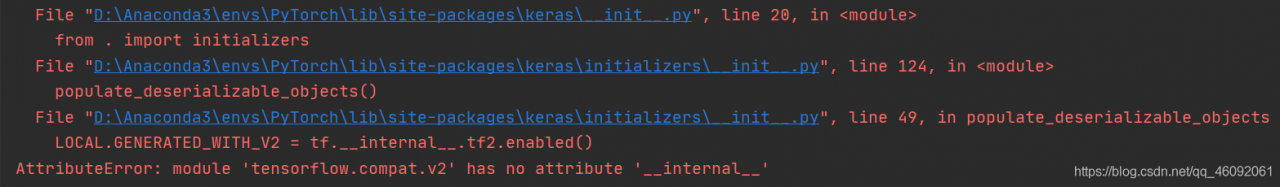

Execute code and report error:

AttributeError: module 'tensorflow.compat.v2' has no attribute '__ internal__'

Baidu has been looking for the same error for a long time, but it sees a similar problem. Just change the above code to:

from tensorflow.keras.preprocessing.text import Tokenizer

That’s it!

Read More:

- [Solved] AttributeError: module ‘tensorflow._api.v2.train‘ has no attribute ‘AdampOptimizer‘

- Python AttributeError: module ‘tensorflow‘ has no attribute ‘InteractiveSession‘

- [Solved] AttributeError: module ‘tensorflow‘ has no attribute ‘distributions‘

- [Solved] Error: AttributeError: module ‘tensorflow‘ has no attribute ‘placeholder‘

- [Solved] Pytorch call tensorboard error: AttributeError: module ‘tensorflow’ has no attribute ‘gfile’

- [Solved] R Error: Python module tensorflow.keras was not found.

- [Solved] Python Keras Error: AttributeError: ‘Sequential‘ object has no attribute ‘predict_classes‘

- Keras import package error: importerror: cannot import name ‘get_ config‘

- AttributeError: module ‘time‘ has no attribute ‘clock‘ [How to Solve]

- [Solved] AttributeError: module ‘tensorboard.summary._tf.summary‘ has no attribute ‘merge‘

- [How to Solve]AttributeError: module ‘scipy’ has no attribute ‘io’

- [Solved] YOLO v5 Error: AttributeError: Can‘t get attribute SPPF on module models

- AttributeError: module ‘enum‘ has no attribute ‘IntFlag‘ [How to Solve]

- [Solved]AttributeError: module ‘urllib’ has no attribute ‘quote’

- [Solved] AttributeError: module ‘setuptools._distutils‘ has no attribute ‘version‘

- [Solved] AttributeError: partially initialized module ‘xlwings’ has no attribute ‘App’

- [Solved] AttributeError: module ‘time‘ has no attribute ‘clock‘

- Python3.7 AttributeError: module ‘time‘ has no attribute ‘clock‘

- [Solved] AttributeError: module ‘PIL.Image‘ has no attribute ‘open‘

- [Solved] AttributeError: module ‘logging‘ has no attribute ‘Handler‘