After restarting the server, the NVIDIA driver cannot be connected.

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

Solution:

check the version number of NVIDIA driver installed before

ls /usr/src | grep nvidia

Output

sudo apt install dkms

sudo dkms install -m nvidia -v 460.73.01

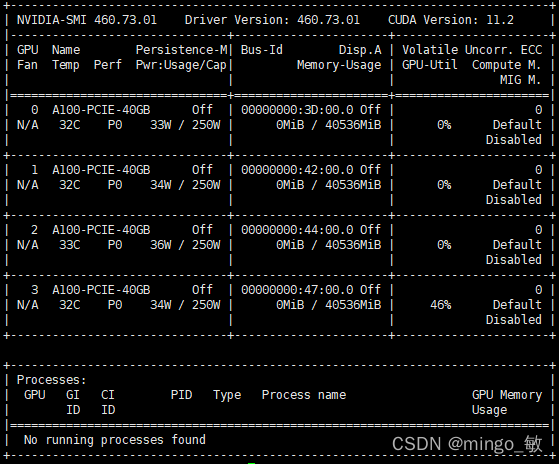

Finally, The familiar interface is back