Error Message: 2022-03-22 15:55:57,000 (lifecycleSupervisor-1-0) [ERROR – org.apache.flume.source.taildir.ReliableTaildirEventReader.loadPositionFile(ReliableTaildirEventReader.java:147)] Failed loading positionFile: /opt/module/flume/taildir_position.json

use flume1.9 tildir source, memory channel, hdfs sink to write a configuration file, use in hdfs no file into, and then I look at the log file, found the above error

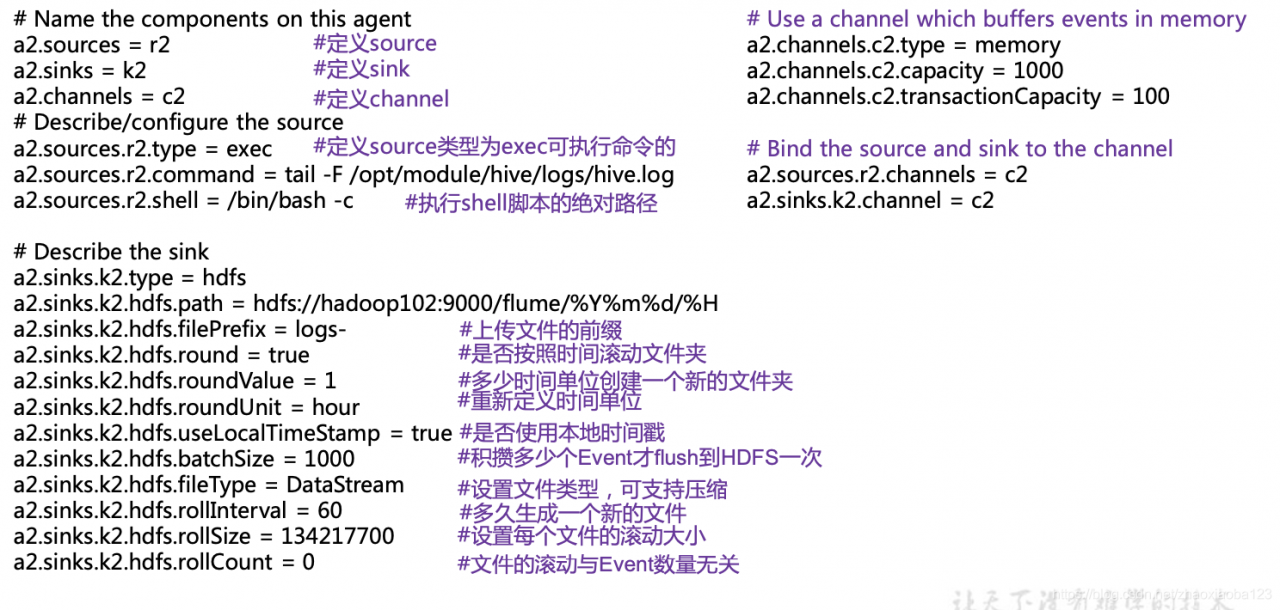

My profile

Profile:

a3.sources = r3

a3.sinks = k3

a3.channels = c3

a3.sources.r3.type = TAILDIR

a3.sources.r3.positionFile = /opt/module/flume/taildir_position.json

a3.sources.r3.filegroups = f1 f2

a3.sources.r3.filegroups.f1 = /opt/module/flume/files/.*log.*

a3.sources.r3.filegroups.f2 = /opt/module/flume/files2/.*file.*

# Describe the sink

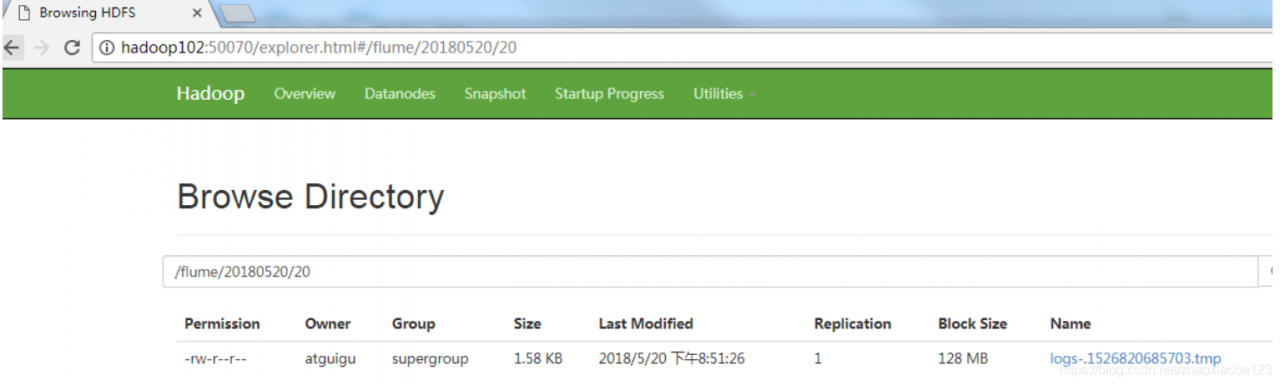

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path = hdfs://hadoop102:8020/flume/test2/%Y%m%d/%H

#Prefix of the uploaded file

a3.sinks.k3.hdfs.filePrefix = upload-

# whether to scroll the folder according to time

a3.sinks.k3.hdfs.round = true

# how many time units to create a new folder

a3.sinks.k3.hdfs.roundValue = 1

# redefine the units of time

a3.sinks.k3.hdfs.roundUnit = hour

# whether to use the local timestamp

a3.sinks.k3.hdfs.useLocalTimeStamp = true

# accumulate how many Event before flush to HDFS once

a3.sinks.k3.hdfs.batchSize = 100

# set the file type, can support compression

a3.sinks.k3.hdfs.fileType = DataStream

# how often to generate a new file

a3.sinks.k3.hdfs.rollInterval = 600

# set the scrolling size of each file is about 128M

a3.sinks.k3.hdfs.rollSize = 134217700

# file scrolling and the number of Event has nothing to do with

a3.sinks.k3.hdfs.rollCount = 0

#Minimum number of redundancies

a3.sinks.k3.hdfs.minBlockReplicas = 1

# Use a channel which buffers events in memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3

Solution:

Modify

a3.sources.r3.positionFile = /opt/module/flume/taildir_position.json,

to

a3.sources.r3.positionFile = /opt/module/flume/taildir.json

a3.sources = r3

a3.sinks = k3

a3.channels = c3

a3.sources.r3.type = TAILDIR

a3.sources.r3.positionFile = /opt/module/flume/taildir_position.json

a3.sources.r3.filegroups = f1 f2

a3.sources.r3.filegroups.f1 = /opt/module/flume/files/.*log.*

a3.sources.r3.filegroups.f2 = /opt/module/flume/files2/.*file.*

# Describe the sink

a3.sinks.k3.type = hdfs

a3.sinks.k3.hdfs.path = hdfs://hadoop102:8020/flume/test2/%Y%m%d/%H

#Prefix of the uploaded file

a3.sinks.k3.hdfs.filePrefix = upload-

# whether to scroll the folder according to time

a3.sinks.k3.hdfs.round = true

# how many time units to create a new folder

a3.sinks.k3.hdfs.roundValue = 1

# redefine the units of time

a3.sinks.k3.hdfs.roundUnit = hour

# whether to use the local timestamp

a3.sinks.k3.hdfs.useLocalTimeStamp = true

# accumulate how many Event before flush to HDFS once

a3.sinks.k3.hdfs.batchSize = 100

# set the file type, can support compression

a3.sinks.k3.hdfs.fileType = DataStream

# how often to generate a new file

a3.sinks.k3.hdfs.rollInterval = 600

# set the scrolling size of each file is about 128M

a3.sinks.k3.hdfs.rollSize = 134217700

# file scrolling and the number of Event has nothing to do with

a3.sinks.k3.hdfs.rollCount = 0

#Minimum number of redundancies

a3.sinks.k3.hdfs.minBlockReplicas = 1

# Use a channel which buffers events in memory

a3.channels.c3.type = memory

a3.channels.c3.capacity = 1000

a3.channels.c3.transactionCapacity = 100

# Bind the source and sink to the channel

a3.sources.r3.channels = c3

a3.sinks.k3.channel = c3