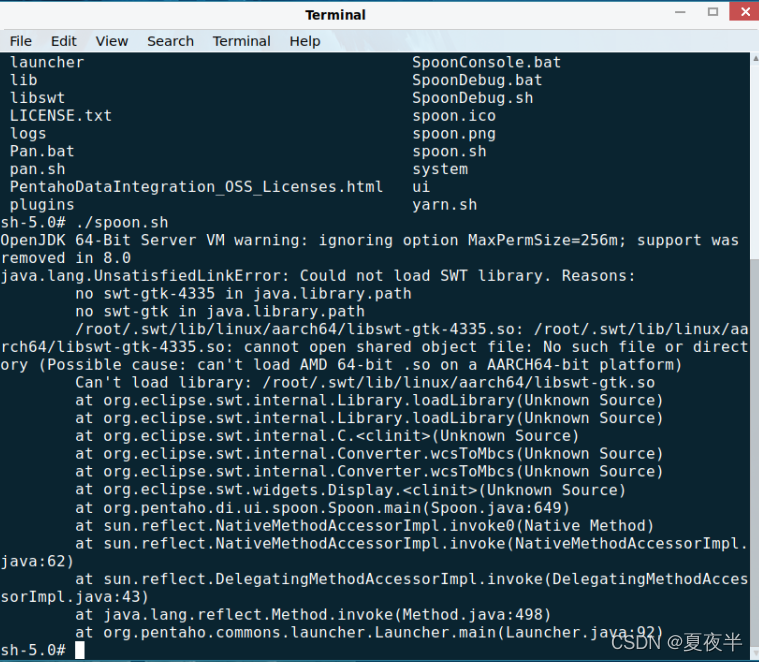

Because of the work requirements, recently have been engaged in kettle-related business, in the deployment environment is not known to be linux (in the standard Kirin), has been doing conversions and operations under windows, on the recent to deploy to production, the problem is also exposed, in the environment to start kettle when the error reported:

Solution.

According to the error message: SWT package could not be loaded, so go online and search for downloading swt.jar .

Place the downloaded jar package under the kettle path of the installation: data-integration/libswt/linux/aarch64/

If there is no aarch64 folder, create one and put it there.

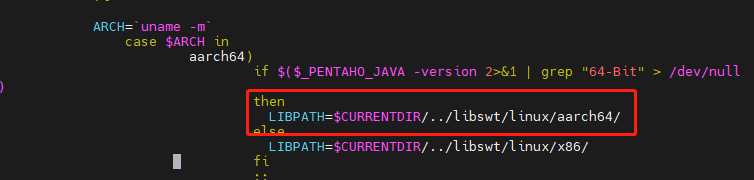

Then modify the spoon.sh configuration information:

When you’re done, restart ./spoon.sh and you’re done!

Tag Archives: ETL

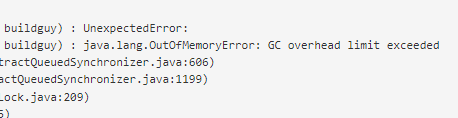

[Solved] kettle Error: GC overhead limit exceeded

An error is reported when running a kettle script online

java.lang.OutOfMemoryError: GC overhead limit exceeded

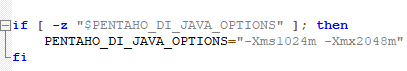

The reason is that the memory settings of the local test are different from the online memory settings, and the memory size configured on the offline can be modified.

Spoon. The memory set in bat (windows side) and spoon.sh (Linux side) is too small. It can be set to 1/4 of the machine memory, such as 16g memory, which can be set to 4G.

– XMS initial heap size.

– Xmx maximum heap size.

In many cases, – XMS and – Xmx are set to the same. This setting is because when heap is not enough, memory jitter will occur, affecting the stability of program operation.

Install additional stage package for streamsets – error in cdh6.3.0 package rest API call error: java.io.eofexception

Version

streamsets3.16.1 (core)

cdh6.3.2

1、 Question

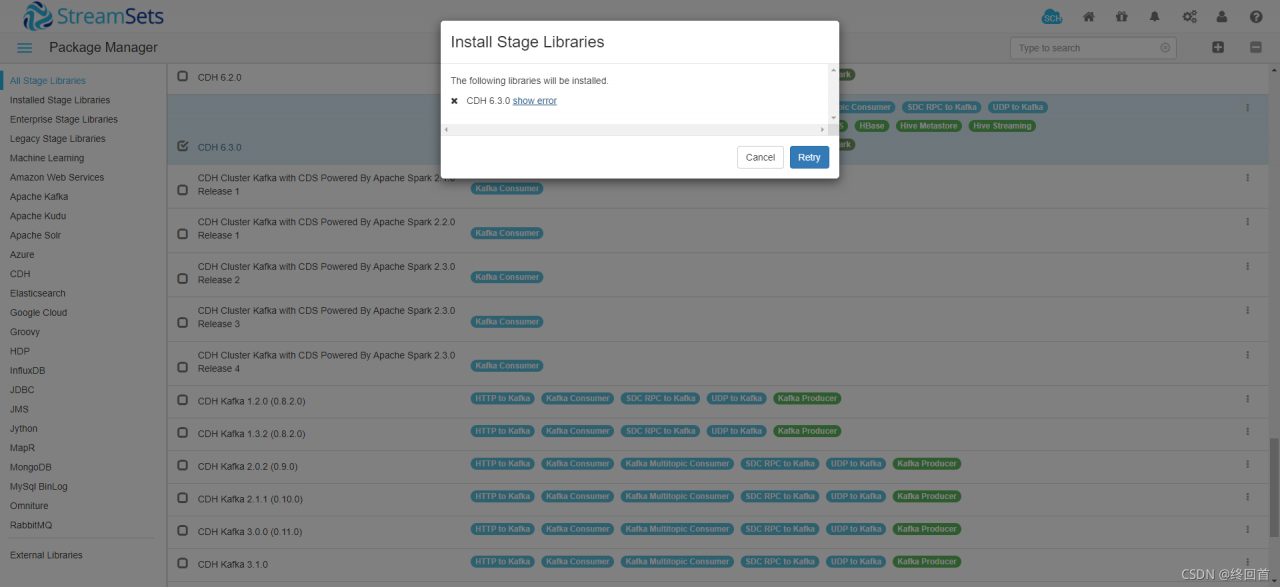

The name of the streamsets installation package is streamsets-datacollector-core-3.16.1. Tgz , and an error is reported when downloading the package of cdh6.3 after installation

1 operation

Install the package of cdh6.3.0 through the streamsets UI and report an error

click Show error

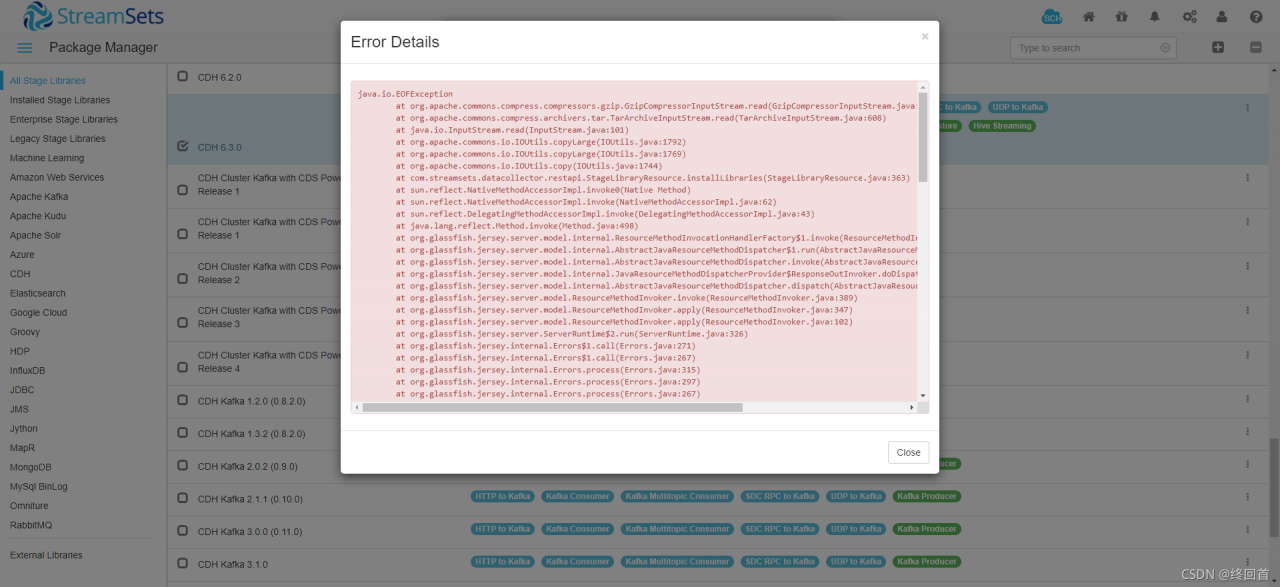

2. Complete error reporting contents

java.io.EOFException

at org.apache.commons.compress.compressors.gzip.GzipCompressorInputStream.read(GzipCompressorInputStream.java:303)

at org.apache.commons.compress.archivers.tar.TarArchiveInputStream.read(TarArchiveInputStream.java:608)

at java.io.InputStream.read(InputStream.java:101)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1792)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1769)

at org.apache.commons.io.IOUtils.copy(IOUtils.java:1744)

at com.streamsets.datacollector.restapi.StageLibraryResource.installLibraries(StageLibraryResource.java:363)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.glassfish.jersey.server.model.internal.ResourceMethodInvocationHandlerFactory$1.invoke(ResourceMethodInvocationHandlerFactory.java:81)

at org.glassfish.jersey.server.model.internal.AbstractJavaResourceMethodDispatcher$1.run(AbstractJavaResourceMethodDispatcher.java:144)

at org.glassfish.jersey.server.model.internal.AbstractJavaResourceMethodDispatcher.invoke(AbstractJavaResourceMethodDispatcher.java:161)

at org.glassfish.jersey.server.model.internal.JavaResourceMethodDispatcherProvider$ResponseOutInvoker.doDispatch(JavaResourceMethodDispatcherProvider.java:160)

at org.glassfish.jersey.server.model.internal.AbstractJavaResourceMethodDispatcher.dispatch(AbstractJavaResourceMethodDispatcher.java:99)

at org.glassfish.jersey.server.model.ResourceMethodInvoker.invoke(ResourceMethodInvoker.java:389)

at org.glassfish.jersey.server.model.ResourceMethodInvoker.apply(ResourceMethodInvoker.java:347)

at org.glassfish.jersey.server.model.ResourceMethodInvoker.apply(ResourceMethodInvoker.java:102)

at org.glassfish.jersey.server.ServerRuntime$2.run(ServerRuntime.java:326)

at org.glassfish.jersey.internal.Errors$1.call(Errors.java:271)

at org.glassfish.jersey.internal.Errors$1.call(Errors.java:267)

at org.glassfish.jersey.internal.Errors.process(Errors.java:315)

at org.glassfish.jersey.internal.Errors.process(Errors.java:297)

at org.glassfish.jersey.internal.Errors.process(Errors.java:267)

at org.glassfish.jersey.process.internal.RequestScope.runInScope(RequestScope.java:317)

at org.glassfish.jersey.server.ServerRuntime.process(ServerRuntime.java:305)

at org.glassfish.jersey.server.ApplicationHandler.handle(ApplicationHandler.java:1154)

at org.glassfish.jersey.servlet.WebComponent.serviceImpl(WebComponent.java:473)

at org.glassfish.jersey.servlet.WebComponent.service(WebComponent.java:427)

at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:388)

at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:341)

at org.glassfish.jersey.servlet.ServletContainer.service(ServletContainer.java:228)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:760)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1617)

at com.streamsets.datacollector.http.GroupsInScopeFilter.lambda$doFilter$0(GroupsInScopeFilter.java:82)

at com.streamsets.datacollector.security.GroupsInScope.execute(GroupsInScope.java:34)

at com.streamsets.datacollector.http.GroupsInScopeFilter.doFilter(GroupsInScopeFilter.java:81)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at com.streamsets.datacollector.restapi.rbean.rest.RestResourceContextFilter.doFilter(RestResourceContextFilter.java:42)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at org.eclipse.jetty.servlets.CrossOriginFilter.handle(CrossOriginFilter.java:310)

at org.eclipse.jetty.servlets.CrossOriginFilter.doFilter(CrossOriginFilter.java:264)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at com.streamsets.datacollector.http.LocaleDetectorFilter.doFilter(LocaleDetectorFilter.java:39)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at org.eclipse.jetty.servlets.HeaderFilter.doFilter(HeaderFilter.java:117)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at com.streamsets.pipeline.http.MDCFilter.doFilter(MDCFilter.java:47)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1604)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:545)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:143)

at org.eclipse.jetty.server.handler.gzip.GzipHandler.handle(GzipHandler.java:717)

at org.eclipse.jetty.security.SecurityHandler.handle(SecurityHandler.java:501)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:235)

at org.eclipse.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1592)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1296)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:188)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:485)

at org.eclipse.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1562)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:186)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1211)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.rewrite.handler.RewriteHandler.handle(RewriteHandler.java:322)

at org.eclipse.jetty.server.handler.HandlerCollection.handle(HandlerCollection.java:146)

at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:221)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.eclipse.jetty.server.Server.handle(Server.java:500)

at com.streamsets.lib.security.http.LimitedMethodServer.handle(LimitedMethodServer.java:41)

at org.eclipse.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:386)

at org.eclipse.jetty.server.HttpChannel.dispatch(HttpChannel.java:562)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:378)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:270)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:103)

at org.eclipse.jetty.io.ChannelEndPoint$2.run(ChannelEndPoint.java:117)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:336)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:313)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:171)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:129)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:388)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:806)

at org.eclipse.jetty.util.thread.QueuedThreadPool$Runner.run(QueuedThreadPool.java:938)

at java.lang.Thread.run(Thread.java:748)

3 sdc.log key error

2021-11-02 11:02:04,278 [user:admin] [pipeline:] [runner:] [thread:webserver-48] [stage:] INFO StageLibraryResource - Installing stage library streamsets-datacollector-cdh_6_3-lib from http://archives.streamsets.com/datacollector/3.16.1/tarball/streamsets-datacollector-cdh_6_3-lib-3.16.1.tgz

2021-11-02 11:21:13,324 [user:admin] [pipeline:] [runner:] [thread:webserver-48] [stage:] ERROR ExceptionToHttpErrorProvider - REST API call error: java.io.EOFException

java.io.EOFException

at org.apache.commons.compress.compressors.gzip.GzipCompressorInputStream.read(GzipCompressorInputStream.java:303)

at org.apache.commons.compress.archivers.tar.TarArchiveInputStream.read(TarArchiveInputStream.java:608)

at java.io.InputStream.read(InputStream.java:101)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1792)

at org.apache.commons.io.IOUtils.copyLarge(IOUtils.java:1769)

2、 Positioning

1 idea a

According to the log, it is from http://archives.streamsets.com/datacollector/3.16.1/tarball/streamsets-datacollector-cdh_ 6_ 3-lib-3.16.1. Tgz Download streamsets DataCollector CDH_ 6_ 3-lib

try the browser to visit this address and find that it can be downloaded. Try to manually download the package and put it in the target directory

SSH connects to the server and finds that the network is very slow. Try downloading this package on a PC. after decompression, you find streamsets-datacollector-3.16.1 \ streamsets LIBS \ streamsets DataCollector CDH_ 6_ There are jar packages in the 3-lib \ lib directory. Put these in the target directory of streamsets download streamsets-datacollector-3.16.1/streamsets-libs/streamsets-datacollector-cdh_ 6_ When looking at the installed packages in 3-lib/lib , it is found that there is still no

this method is not very good. It may be that some other configurations will be written in addition to the jar package during the installation process, which we can’t do manually. Change your mind

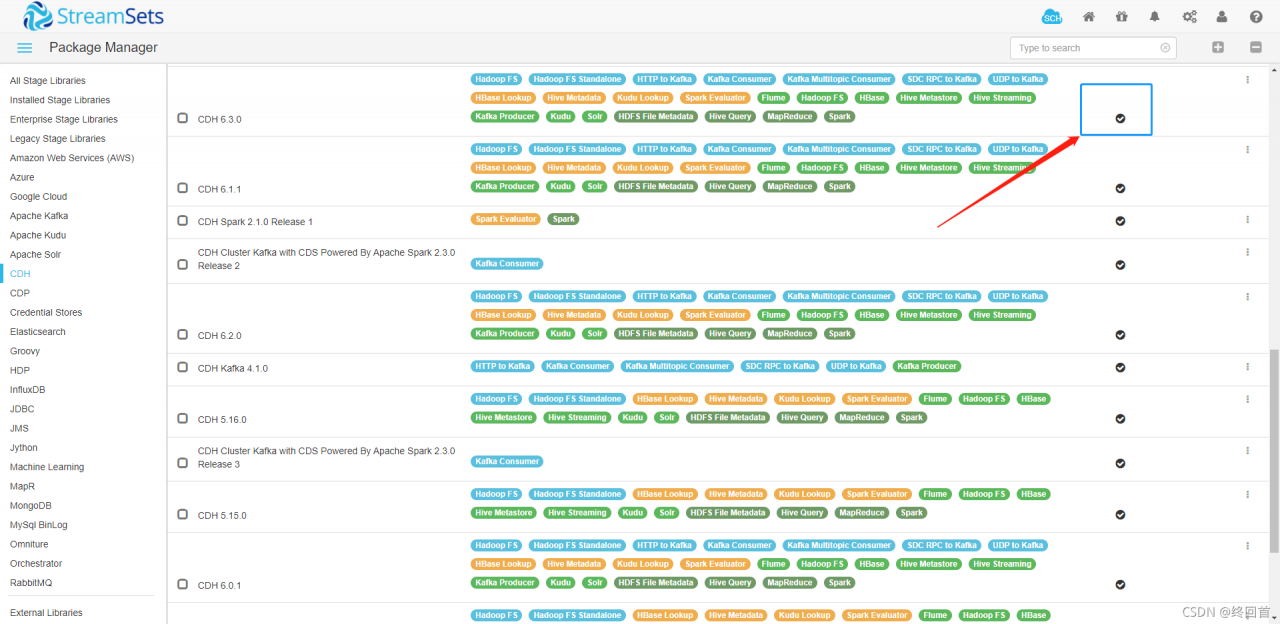

2 idea B (feasible)

The installation package of this version is the core version. Consider reinstalling the full version. Download streamsets-datacollector-all-3.22.3. Tgz the installation steps refer to the official documents

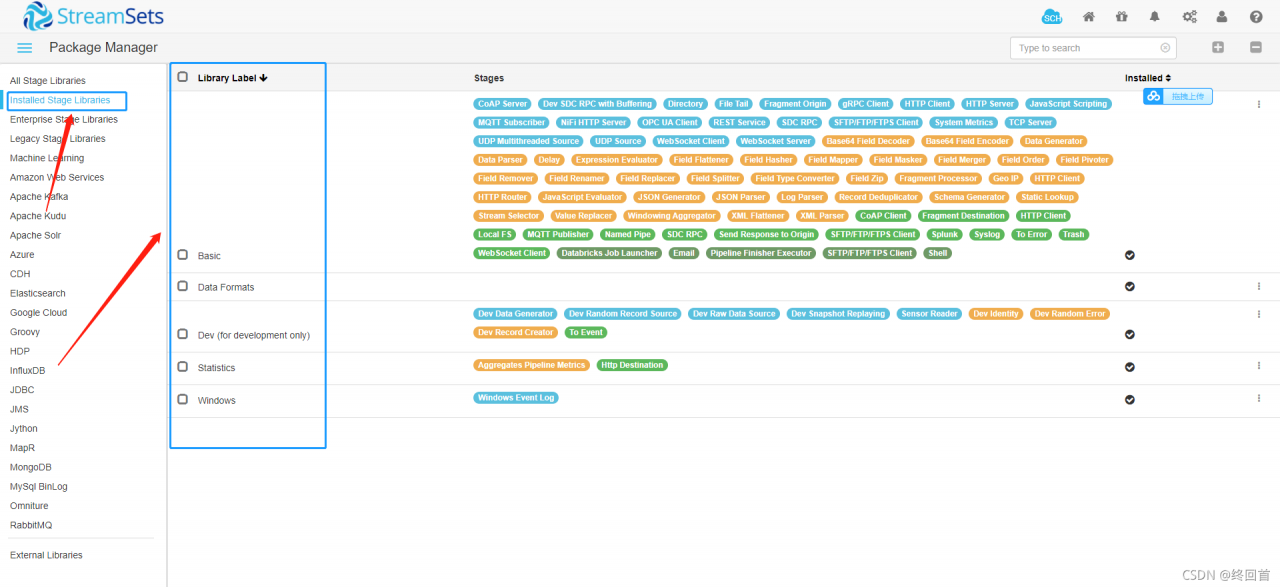

You can see that the required package is already installed after reinstallation

reference material

Official documents

Step on the pit — error reported by sqoop tool.ExportTool : Error during export

@To live better

Step on the pit — error reported by sqoop tool.ExportTool : Error during export

The error printed on the console is

19/04/19 20:17:09 ERROR mapreduce.ExportJobBase: Export job failed!

19/04/19 20:17:09 ERROR tool.ExportTool: Error during export:

Export job failed!

at org.apache.sqoop.mapreduce.ExportJobBase.runExport(ExportJobBase.java:445)

at org.apache.sqoop.manager.MySQLManager.upsertTable(MySQLManager.java:145)

at org.apache.sqoop.tool.ExportTool.exportTable(ExportTool.java:73)

at org.apache.sqoop.tool.ExportTool.run(ExportTool.java:99)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

finishing touch : this error indicates that the fields of MySQL and hive do not correspond or the data format is different

solution:

Step 1 : check whether the structure (field name and data type) of MySQL and hive tables are consistent.

Step 2 : check whether the data has been imported?If the data is not imported, please change the time type in MySQL and hive to string or varchar in the first step. If there is an import, but the imported data is incomplete or incorrect. It must be that your data type is inconsistent with the actual data. There are two situations. See the following three steps for details.

Step 3 : in MySQL to hive, please be sure to check whether your data contains the default line break for hive table creation

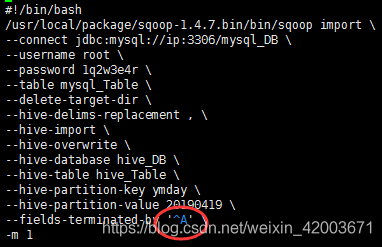

#!/bin/bash

/usr/local/package/sqoop-1.4.7.bin/bin/sqoop import \

--connect jdbc:mysql://ip:3306/mysql_DB \

--username root \

--password 1q2w3e4r \

--table mysql_Table \

--delete-target-dir \

--hive-delims-replacement , \

--hive-import \

--hive-overwrite \

--hive-database hive_DB \

--hive-table hive_Table \

--hive-partition-key ymday \

--hive-partition-value 20190419 \

--fields-terminated-by '\t' \

-m 1

Step 4 : in MySQL to hive, please be sure to check whether your data contains the field separator commonly used in hive table creation

Step 5 : if there are many empty columns from hive to hive, sometimes the above error will be reported, then add input null string and input null non string in the sqoop statement, and the following code

remember ^ A, and use Ctrl + V and Ctrl + A in VI of Linux To generate, you can't copy the following ^ a

remember ^ a directly. In VI of Linux, you can't copy the following ^ a

remember ^ a directly. In VI of Linux, you can't copy the following ^ a directly

#!/bin/bash

/usr/local/package/sqoop-1.4.7.bin/bin/sqoop export \

--connect "jdbc:mysql://ip:3306/report_db?useUnicode=true&characterEncoding=utf-8" \

--username root \

--password 1q2w3e4r \

--table mysql_table \

--columns name,age \

--update-key name \

--update-mode allowinsert \

--input-null-string '\\N' \

--input-null-non-string '\\N' \

--export-dir "/hive/warehouse/dw_db.db/hive_table/ymday=20190419/*" \

--input-fields-terminated-by '^A' \

-m 1

====================================================================

@To live better

If you have any questions about the blog, please leave a message