Question

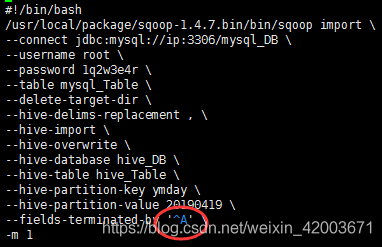

When importing Oracle data with sqoop, the following errors are reported:

INFO mapreduce.Job: Task Id : attempt_1646802944907_15460_m_000000_1, Status : FAILED

Error: java.io.IOException: SQLException in nextKeyValue

at org.apache.sqoop.mapreduce.db.DBRecordReader.nextKeyValue(DBRecordReader.java:275)

at org.apache.hadoop.mapred.MapTask$NewTrackingRecordReader.nextKeyValue(MapTask.java:568)

at org.apache.hadoop.mapreduce.task.MapContextImpl.nextKeyValue(MapContextImpl.java:80)

at org.apache.hadoop.mapreduce.lib.map.WrappedMapper$Context.nextKeyValue(WrappedMapper.java:91)

at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:145)

at org.apache.sqoop.mapreduce.AutoProgressMapper.run(AutoProgressMapper.java:64)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:799)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:174)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1875)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:168)

Caused by: java.sql.SQLException: ORA-24920: column size too large for client

reason

Before using sqoop import other database is normal, this time from the new database import data problems, first check what is the difference between the two databases, found an Oracle version is 11, the new Oracle database version is 19, which may be the cause of the problem.

Go online to check the ORA-24920 error, said to upgrade the oracle client, further speculation may be the problem of Oracle driver.

Under the lib file of sqoop tool, the Oracle JDBC driver found for sqoop is ojdbc6.jar, which does not match with Oracle version 19.

You can check the Oracle version and the corresponding Oracle JDBC driver version on this page:

https://www.oracle.com/database/technologies/faq-jdbc.html#02_03

The screenshot is as follows:

the link to the download page is as follows:

https://www.oracle.com/database/technologies/appdev/jdbc-downloads.html

Solution:

According to the version, ojdbc8.0.jar was downloaded. After uploading, delete the original version and re import the data.

the driver of the original version here needs to be deleted or moved, otherwise it will not succeed. Guess that if there are two versions, the old version may be read