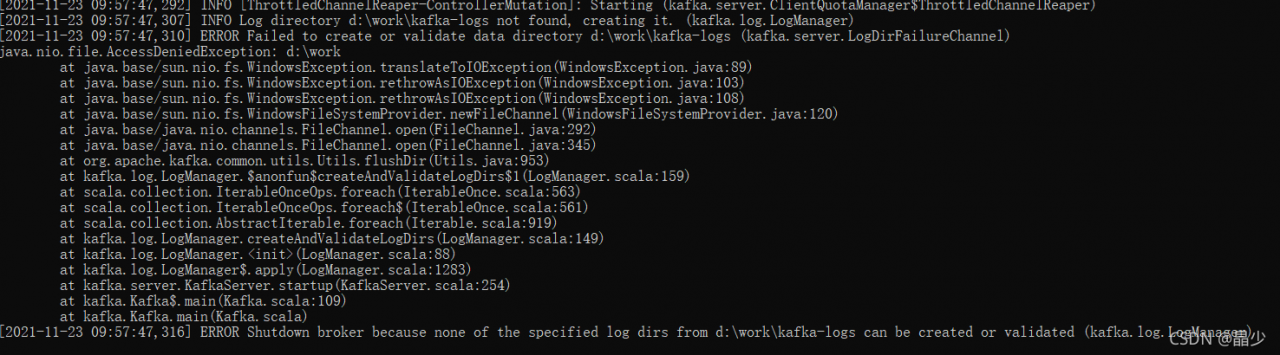

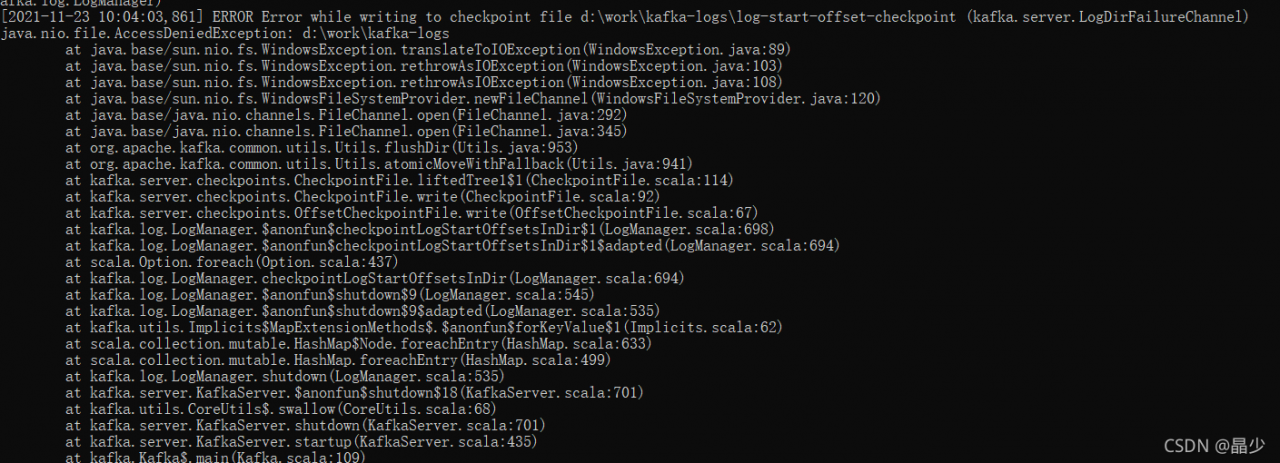

Unable to register due to connection using local network.

Error reporting information:

address: http://192.168.x.xxx:9999/ code: 500 msg: xxl-rpc remoting error(connect timed out), for url: http://192.168.x.xxx:9999/run

Solution:

1. Change automatic registration to manual registration

2. Use intranet penetration tool

3. Fill in the manually registered address as the address of Intranet penetration