Background

No. 3.8 on the business development side responded that sparkstreaming lost, scanned the Doris table (query SQL) and reported an error

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 7 in stage 0.0 failed 4 times, most recent failure: Lost task 7.3 in stage 0.0 (TID 20, hd012.corp.yodao.com, executor 7): com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: errCode = 2,

detailMessage = there is no scanNode Backend. [126101: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS), 14587381: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS), 213814: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS)]

Error:

detailMessage = there is no scanNode Backend. [126101: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS), 14587381: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS), 213814: in black list(Ocurrs time out with specfied time 10000 MICROSECONDS)]

Source Code Analysis

//Blacklisted objects

private static Map<Long, Pair<Integer, String>> blacklistBackends = Maps.newConcurrentMap();

//The task execution process requires getHost, and the return value is the TNetworkAddress object

public static TNetworkAddress getHost(long backendId,

List<TScanRangeLocation> locations,

ImmutableMap<Long, Backend> backends,

Reference<Long> backendIdRef)

//Get the backend object by backendId in the getHost() method

Backend backend = backends.get(backendId);

// determine if the backend object is available

//return the TNetworkAddress object if it is available

//If it's not available, it iterate through the locations object to find a candidate backend object

//If the backend just unavailable is the same as the candidate backend object id, then continue

//If not, determine whether it is available, available then return to change the candidate be's TNetworkAddress

//If not available, continue to change the next candidate be

if (isAvailable(backend)) {

backendIdRef.setRef(backendId);

return new TNetworkAddress(backend.getHost(), backend.getBePort());

} else {

for (TScanRangeLocation location : locations) {

if (location.backend_id == backendId) {

continue;

}

// choose the first alive backend(in analysis stage, the locations are random)

Backend candidateBackend = backends.get(location.backend_id);

if (isAvailable(candidateBackend)) {

backendIdRef.setRef(location.backend_id);

return new TNetworkAddress(candidateBackend.getHost(), candidateBackend.getBePort());

}

}

}

public static boolean isAvailable(Backend backend) {

return (backend != null && backend.isAlive() && !blacklistBackends.containsKey(backend.getId()));

}

//If a be is not returned until the end, the cause of the exception is returned

// no backend returned

throw new UserException("there is no scanNode Backend. " +

getBackendErrorMsg(locations.stream().map(l -> l.backend_id).collect(Collectors.toList()),

backends, locations.size()));

// get the reason why backends can not be chosen.

private static String getBackendErrorMsg(List<Long> backendIds, ImmutableMap<Long, Backend> backends, int limit) {

List<String> res = Lists.newArrayList();

for (int i = 0; i < backendIds.size() && i < limit; i++) {

long beId = backendIds.get(i);

Backend be = backends.get(beId);

if (be == null) {

res.add(beId + ": not exist");

} else if (!be.isAlive()) {

res.add(beId + ": not alive");

} else if (blacklistBackends.containsKey(beId)) {

Pair<Integer, String> pair = blacklistBackends.get(beId);

res.add(beId + ": in black list(" + (pair == null ?"unknown" : pair.second) + ")");

} else {

res.add(beId + ": unknown");

}

}

return res.toString();

}

//blacklistBackends object's put

public static void addToBlacklist(Long backendID, String reason) {

if (backendID == null) {

return;

}

blacklistBackends.put(backendID, Pair.create(FeConstants.heartbeat_interval_second + 1, reason));

LOG.warn("add backend {} to black list. reason: {}", backendID, reason);

}

public static void addToBlacklist(Long backendID, String reason) {

if (backendID == null) {

return;

}

blacklistBackends.put(backendID, Pair.create(FeConstants.heartbeat_interval_second + 1, reason));

LOG.warn("add backend {} to black list. reason: {}", backendID, reason);

}

Cause analysis

According to the task error

detailmessage = there is no scannode backend. [126101: in black list (ocurrs time out with specified time 10000 microseconds), 14587381: in black list (ocurrs time out with specified time 10000 microseconds), 213814: in black list (ocurrs time out with specified time 10000 microseconds)]

analysis, be ID is 126101 The reason why nodes 14587381 and 213814 are in the blacklist may be that ocurrs time out with specified time 10000 microseconds

then it is likely that the three bes on March 8 hung up at that time

according to point 7 of the previous experience of community students

it can be inferred that the be hung up because of improper tasks or configurations

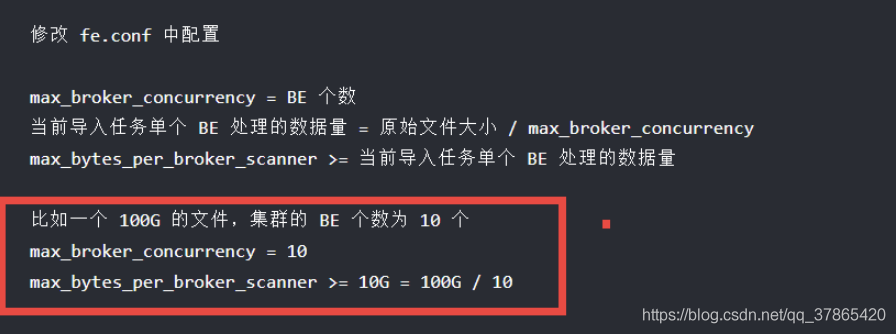

Broker or other tasks overwhelm the be service_ broker_ concurrencymax_ bytes_ per_ broker_ scanner

The specific error was reported because the problem occurred on March 8. Today, more than 20 days have passed. During this period, it has experienced Doris cluster expansion, node rearrangement and other operation and maintenance work. Logs and many backups cannot be recovered. It can only be inferred from ocurrs time out with specified time 10000 microseconds that the be may have hung up at that time, Then our services will be mounted on the supervisor, so they will start automatically (the node service is not available before) ‘s Prometheus rules & amp; Alertmanager alarm)

if the same problem occurs again in the future, continue to improve this article

Solutions

Prometheus rules & amp; amp; amp; amp; nbsp; with be node service unavailable ; Alertmanager alarm

adjust the configuration in fe.conf

configure the spark task and broker task during execution

there is no substantive solution for the time being. If the problem reappears, continue to track and supplement solutions