preface

This article belongs to the column “big data abnormal problems summary”, which is the author’s original. Please indicate the source of the quotation, and point out the shortcomings and errors in the comments area. Thank you!

For the table of contents and references of this column, please refer to the summary of big data anomalies

Questions

When installing and deploying hive3.1.2, an error occurred during startup:

[root@node2 apache-hive-3.1.2-bin]# ./bin/hive

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/opt/java/bin:/opt/maven/bin:/opt/bigdata/hadoop-3.2.2/bin:/opt/bigdata/hadoop-3.2.2/sbin:/opt/bigdata/spark-3.2.0/bin:/opt/bigdata/spark-3.2.0/sbin:/opt/java/bin:/opt/maven/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1357)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1338)

at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:536)

at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:554)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:448)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5141)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5099)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:97)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

solve

From the error log, we can see that there is a problem with the guava package. Either there are fewer packages or there are conflicts. Let’s first check whether the package exists

[root@node2 apache-hive-3.1.2-bin]# find/-name *guava*

find: ‘/proc/9666’: Not having that file or directory

/opt/maven/lib/guava.license

/opt/maven/lib/guava-25.1-android.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/common/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/hdfs/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/httpfs/tomcat/webapps/webhdfs/WEB-INF/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/kms/tomcat/webapps/kms/WEB-INF/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/yarn/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-2.7.3/share/hadoop/tools/lib/guava-11.0.2.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/hdfs/lib/guava-27.0-jre.jar

/opt/bigdata/hadoop-3.2.2/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

/opt/bigdata/spark-3.2.0/jars/guava-14.0.1.jar

/opt/bigdata/apache-hive-3.1.2-bin/lib/guava-19.0.jar

/opt/bigdata/apache-hive-3.1.2-bin/lib/jersey-guava-2.25.1.jar

There are guava packages in hive’s lib directory and Hadoop’s common lib directory, but the package versions are inconsistent. We use guava-27.0-jre.jar to override guava-19.0.jar in hive’s lib directory

[root@node2 apache-hive-3.1.2-bin]# cd lib

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2308517 Sep 27 2018 guava-19.0.jar

-rwxr-xr-x. 1 hadoop hadoop 971309 May 21 2019 jersey-guava-2.25.1.jar

[root@node2 lib]# cd /opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2747878 Sep 26 00:18 guava-27.0-jre.jar

-rwxr-xr-x. 1 hadoop hadoop 2199 Sep 26 00:18 listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar

[root@node2 lib]# cd /opt/bigdata/apache-hive-3.1.2-bin/lib/

[root@node2 lib]# mv guava-19.0.jar guava-19.0.jar.bak

[root@node2 lib]# cp /opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/guava-27.0-jre.jar /opt/bigdata/apache-hive-3.1.2-bin/lib/

[root@node2 lib]# ll *guava*

-rwxr-xr-x. 1 hadoop hadoop 2308517 Sep 27 2018 guava-19.0.jar.bak

-rwxr-xr-x. 1 root root 2747878 Jun 19 13:23 guava-27.0-jre.jar

-rwxr-xr-x. 1 hadoop hadoop 971309 May 21 2019 jersey-guava-2.25.1.jar

[root@node2 lib]# chmod 755 guava-27.0-jre.jar

[root@node2 lib]# chown hadoop:hadoop guava-27.0-jre.jar

At the same time, we switch to Hadoop users to execute (this can ensure that Hadoop is a super user)

[root@node2 lib]# su - hadoop

Last login: April June 17 22:14:01 CST 2021pts/0 AM

[hadoop@node2 ~]$ cd /opt/bigdata/apache-hive-3.1.2-bin/

[hadoop@node2 apache-hive-3.1.2-bin]$ ./bin/hive

which: no hbase in (/home/hadoop/.local/bin:/home/hadoop/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/java/bin:/opt/maven/bin:/opt/bigdata/hadoop-3.2.2/bin:/opt/bigdata/hadoop-3.2.2/sbin:/opt/bigdata/spark-3.2.0/bin:/opt/bigdata/spark-3.2.0/sbin)

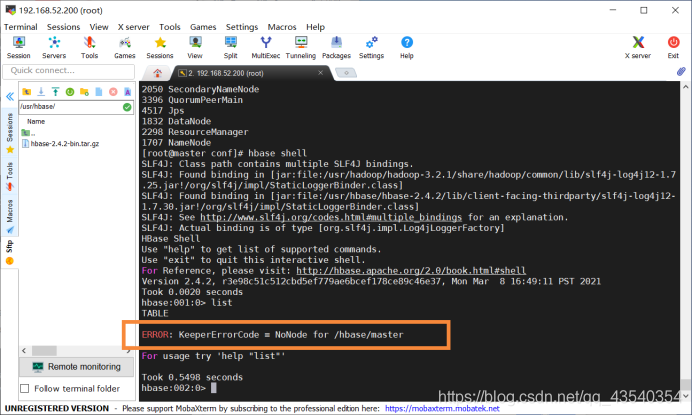

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 901d74e6-fa5d-45b5-b949-bebdacb582ed

Logging initialized using configuration in jar:file:/opt/bigdata/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

The problem has been solved

processing method

processing method