1. Sparksql configuration

Put $hive_ HOME/conf/hive- site.xml Copy configuration file to $spark_ Home/conf directory.

Add $Hadoop_ HOME/etc/hadoop/hdfs- site.xml Copy configuration file to $spark_ Home/conf directory.

2. Run sparkql

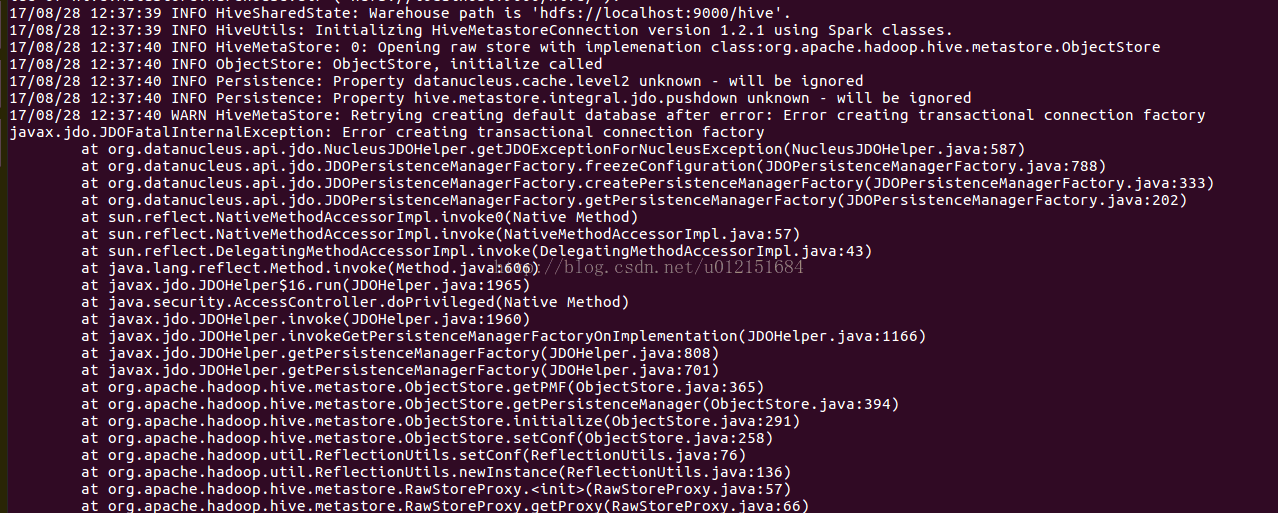

Run./bin/spark SQL under Cd/usr/local/spark to report error creating transactional connection factory

First of all, because the MySQL provided for hive is used as the metadata server, the corresponding jar package support is required. Looking at the detailed error output log, we find that the jar package lacking MySQL connector is indeed missing, so we need to specify the path of the MySQL jar package when starting sparkql as follows:

modify

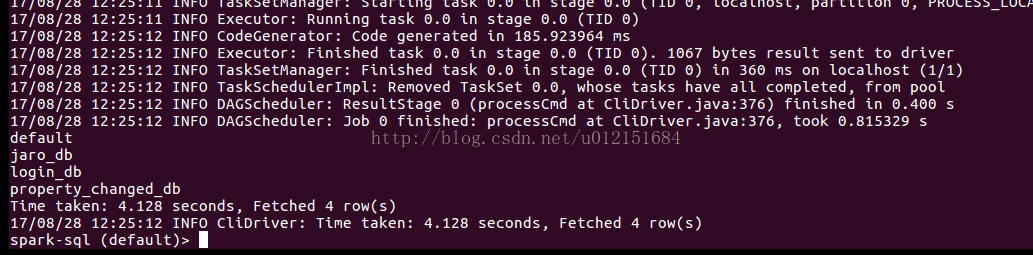

./bin/spark SQL — driver class path/usr/local/hive/lib/mysql-connector-java-5.1.25. Jar can run normally

The output is as follows:

Read More:

- Run spark to report error while identifying ‘ org.apache.spark . sql.hive.HiveSessionState ‘

- org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark session 0354

- Spark shell startup error, error: not found: value spark (low level solved)

- Error while instantiating ‘org.apache.spark.sql.hive.HiveExternalCatalog’:

- [Solved] Spark SQL Error: File xxx could only be written to 0 of the 1 minReplication nodes.

- org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name

- spark SQL Export Data to Kafka error [How to Solve]

- org.springframework.beans.factory.BeanCreationException: Error creating bean with name ‘serviceImpl‘

- org.springframework.beans.factory.UnsatisfiedDependencyException: Error creating bean with name ‘use

- Caused by: org.springframework.beans.factory.beancreationexception: error creating be

- SQL 2005 remote connection error (provider: SQL network interface, error: 28 – the server does not support the requested protocol

- Error in project operation org.springframework.beans . factory.BeanCreationException : error creating bean with name can be configured by

- Spring configuration error: org.springframework.beans . factory.BeanCreationException : Error creating bean with name ‘m

- Java connection SQL error, network error IO Exception:Connection refused :connect

- Springboot startup exception: error creating bean with name ‘permissioncontroller’

- [Solved] hiveonspark:Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

- Failed to retrieve platformtransactionmanager for @ transactional test: [defaulttest]

- java.sql.SQLRecoverableException: IO Error: Connection reset

- java.sql.SQLException: The Network Adapter could not establish the connection

- Java 11.0.11 SQL Server connection error