Some people on the Internet say

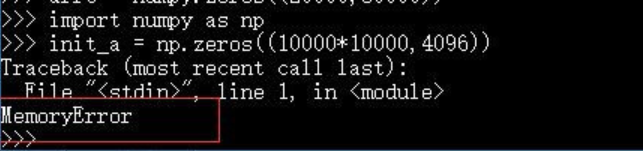

The matrix created by NumPY in Python is of limited size and cannot be created with tens of thousands of rows or columns, as shown in the following error

If you think about my code, it’s similar. I store 40,000 pictures at one time, maybe a little too much, so I make it a little smaller, and I don’t make any mistakes.

Take advice from others:

When dealing with big data in Python, the 16GB of memory started to report MemoryError before a quarter of the memory was used. Later, I learned that 32bit Python would report this error after using more than 2 gb of memory, but there were no other prompt messages. Decide to switch to 64bit Python.

originally installed 32bit Python because the official numpy and scipy versions only supported 32bit. Later, an unofficial version was found: http://www.lfd.uci.edu/~gohlke/ Python /#numpy

wheel file was installed: Fatal error in launcher: Python33\python.exe “” C:\Program Files (x86)\ python.exe” “C:\Program Files (x86)\ pipi.exe”

Find a solution on http://stackoverflow.com/questions/24627525/fatal-error-in-launcher-unable-to-create-process-using-c-program-files-x86:

python -m PIP install XXX

Python is prone to memory errors when dealing with large data sets, that is, running out of memory.

1. The original python data type takes up a lot of space, and does not have too many choices, the default is generally like 24 bytes, but in fact sometimes it does not need to be so big or so high precision, at this time you can use Float32, Float16 and so on in NumPY, in short, choose enough according to your own needs, this is several times of memory savings.

2. Python’s garbage collection mechanism is relatively lazy. Sometimes, variables in a for loop will not be collected when they are used up, and space will be opened up again in the next reinitialization.

3. In the case of sparse data, such as a large number of one Hot features in the training set, the dense data will be turned into sparse storage. Refer to the SPARSE module in SCIPY, where several data structures supporting sparse storage can be called directly. But notice that a centralized data structure requires at least two or three times the space in a dense data store. That means sparse arrays would take up even more space if they are half or less sparse. It only works if a lot of the data is sparse.

4. In essence, it is about checking whether there is something wrong with the way you organize your data, such as whether it can be one hot in each batch. In other words, don’t store all the things you need or don’t need in memory at one time.

— — — — — — — — — — — — — — — — — — — — —

the original: https://blog.csdn.net/yimingsilence/article/details/79717768

Reference: https://jingyan.baidu.com/article/a65957f434970a24e67f9be6.html

https://zhidao.baidu.com/question/2058013252876894707.html

Recommend interested can look at: https://blog.csdn.net/weixin_39750084/article/details/81501395

Read More:

- The sparse matrix of R language is too large to be used as.matrix

- Python memoryerror (initializing a large matrix)

- No module named numpy error in Python code

- Unable to call numpy in pychar, module notfounderror: no module named ‘numpy’

- Warning when using numpy: runtimewarning: numpy.dtype size changed, may indicate binary incompatibility

- Detecting memory consumption by Python program

- Modification scheme of binary files in dot matrix font library

- Python Numpy.ndarray ValueError:assignment destination is read-only

- UserWarning: Failed to initialize NumPy: No module named ‘numpy.core._multiarray_umath‘

- Python_ Part 2 programming problems (3)_ solve numpy.core.multiarray Failed to import problem

- Python: CUDA error: an illegal memory access was accounted for

- Runtimeerror using Python training model: CUDA out of memory error resolution

- The difference, cause and solution of memory overflow and memory leak

- Kvm internal error: process exited :cannot set up guest memory ‘pc.ram‘:Cannot allocate memory

- Fatal error: Newspace:: rebalance allocation failed – process out of memory (memory overflow)

- os::commit_memory(0x0000000538000000, 11408506880, 0) failed; error=‘Cannot allocate memory‘

- Hbase Native memory allocation (mmap) failed to map xxx bytes for committing reserved memory

- Matlab matrix transpose function

- WebHost failed to process a request.Memory gates checking failed because the free memory (140656640 …

- error: Eigen does not name a type Eigen::Matrix