HDFS connection failed:

Common causes of errors may be:

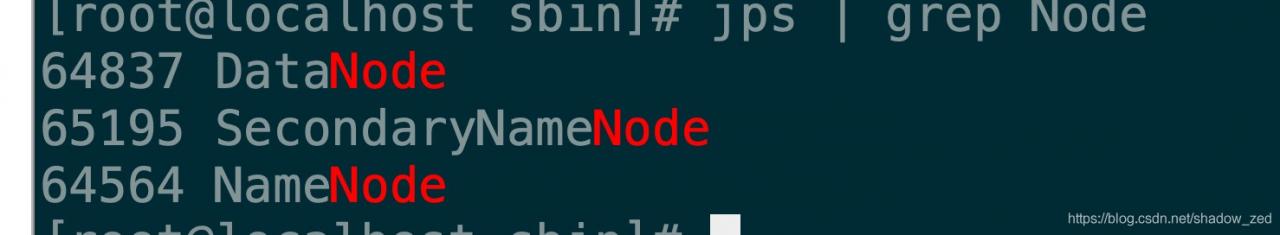

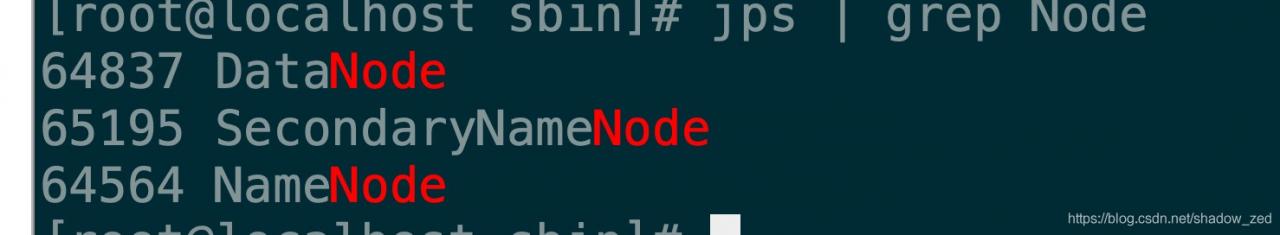

1. Hadoop is not started (not all started). Hadoop is normally started including the following services. If the services are not all started, you can check the log

2. Installation of pseudo-distributed mode; localhost or 127.0.0.1 is used in the configuration file; at this time, the real ID should be changed, including core-site.xml, Mapred-site.xml, Slaves,

Masters, after modifying IP, the dataNode may fail to start,

Set DFS. Data.dir in the HDFs-site.xml profile:

& lt; property>

& lt; name> dfs.data.dir< /name>

& lt; value> /data/hdfs/data< /value>

& lt; /property>

Delete all files in the folder in Hadoop and restart Hadoop

Common causes of errors may be:

1. Hadoop is not started (not all started). Hadoop is normally started including the following services. If the services are not all started, you can check the log

2. Installation of pseudo-distributed mode; localhost or 127.0.0.1 is used in the configuration file; at this time, the real ID should be changed, including core-site.xml, Mapred-site.xml, Slaves,

Masters, after modifying IP, the dataNode may fail to start,

Set DFS. Data.dir in the HDFs-site.xml profile:

& lt; property>

& lt; name> dfs.data.dir< /name>

& lt; value> /data/hdfs/data< /value>

& lt; /property>

Delete all files in the folder in Hadoop and restart Hadoop

Read More:

- solve java.net.ConnectException : Connection refused:connect report errors

- How to Fix java.net.ConnectException: Connection refused: connect

- JMeter performance test monitoring server resource reported error: java.net.ConnectException : Connection refused: connect

- [resolved] exception java.net.ConnectException : Error opening socket to server Connection timed out.

- failed: Error in connection establishment: net::ERR_CONNECTION_REFUSED

- Java connection SQL error, network error IO Exception:Connection refused :connect

- Solve the java.net.connectexception: connection rejected: connect error

- Zookeeper starts the client and reports an error: java.net.connectexception: connection rejected

- mkdir: Call From hadoop102/192.168.6.102 to hadoop102:8020 failed on connection exception: java.net.

- Error: JMeter monitors Linux system performance java.net.ConnectException : Connection timed out: connect

- Failed to load resource: net::ERR_CONNECTION_REFUSED

- Solve the problem of Failed to load resource: net::ERR_CONNECTION_REFUSED

- Synergy 1.4.12 “ipc connection error, connection refused” and other issues

- Hadoop hdfs dfs -ls/ error: Call From master/192.168.88.108 to slave1:9820 failed on connection except

- curl: (7) Failed connect to localhost:9200; Connection refused

- 【wget failed】Connecting to 127.0.0.1:7890… failed: Connection refused.

- error: \*1035 connect() failed (111: Connection refused) while connecting to upstream, client…..

- Caused by: java.net.SocketException : connection reset or caused by: java.sql.SQLRecoverableException solve

- samba Error NT_STATUS_CONNECTION_REFUSED Failed to connect with SMB1 — no workgroup available

- Nginx reports 502 error, log connect() failed (111: Connection refused) while connecting to upstream. A personal effective solution