problem

Error response from daemon: OCI runtime create failed: container with id exists:xxzxxxxxxxx

Solution:

rm -rf /var/run/docker/runtime-runc/moby/xxzxxxxxxxx

problem

Error response from daemon: OCI runtime create failed: container with id exists:xxzxxxxxxxx

Solution:

rm -rf /var/run/docker/runtime-runc/moby/xxzxxxxxxxx

Load “…\Obj\Laliji.axf” Set JLink Project File to “F:\01MCU\STM32F429\TestProgram\Project\JLinkSettings.ini” JLink Info: Device “STM32F429IG” selected. JLink info: DLL: V4.98e, compiled May 5 2015 11:00:52 Firmware: J-Link V10 compiled Oct 15 2018 16:40:35 Hardware: V10.10 S/N : 260106173 Feature(s) : FlashBP, GDB, FlashDL, JFlash, RDI JLink Info: Found SWD-DP with ID 0x2BA01477JLink Info: Found SWD-DP with ID 0x2BA01477JLink Info: Found Cortex-M4 r0p1, Little endian.JLink Info: FPUnit: 6 code (BP) slots and 2 literal slotsJLink Info: CoreSight components:JLink Info: ROMTbl 0 @ E00FF000JLink Info: ROMTbl 0 [0]: FFF0F000, CID: B105E00D, PID: 000BB00C SCSJLink Info: ROMTbl 0 [1]: FFF02000, CID: B105E00D, PID: 003BB002 DWTJLink Info: ROMTbl 0 [2]: FFF03000, CID: B105E00D, PID: 002BB003 FPBJLink Info: ROMTbl 0 [3]: FFF01000, CID: B105E00D, PID: 003BB001 ITMJLink Info: ROMTbl 0 [4]: FFF41000, CID: B105900D, PID: 000BB9A1 TPIUJLink Info: ROMTbl 0 [5]: FFF42000, CID: B105900D, PID: 000BB925 ETM ROMTableAddr = 0xE00FF003 Target info: Device: STM32F429IG VTarget = 3.270V State of Pins: TCK: 0, TDI: 1, TDO: 1, TMS: 1, TRES: 1, TRST: 1 Hardware-Breakpoints: 6 Software-Breakpoints: 8192 Watchpoints: 4 JTAG speed: 5000 kHz No Algorithm found for: 08000000H - 08001073H Erase skipped! Error: Flash Download failed - “Cortex-M4” Flash Load finished at 14:46:07

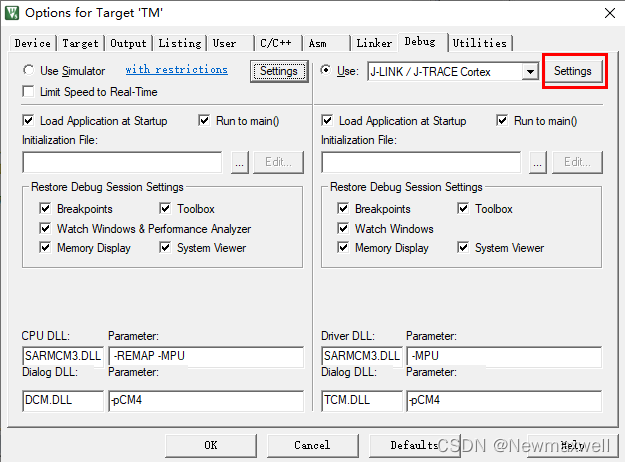

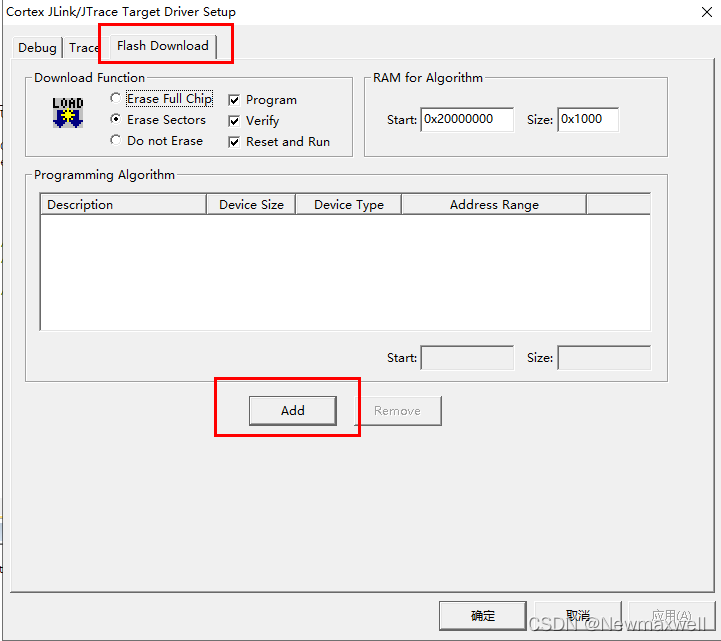

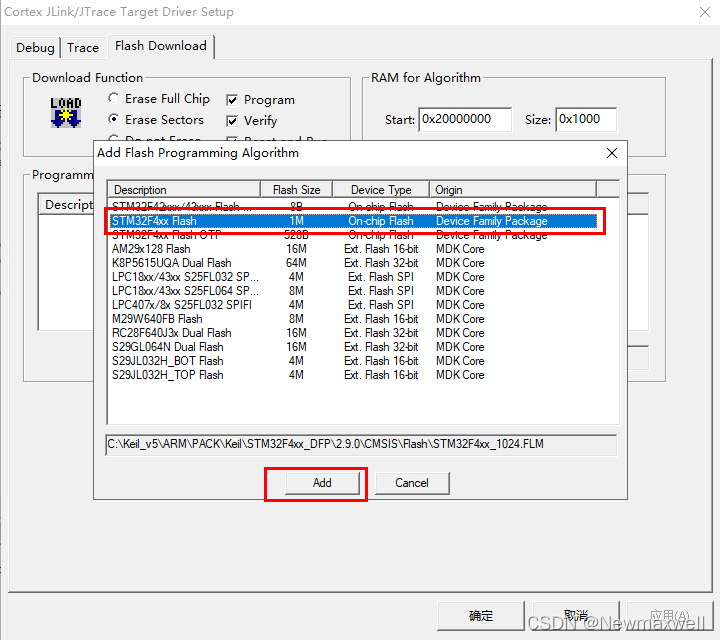

Solution:

1, click the magic wand of keil interface (Alt + F7)

2, Settings

3, Flash Download -> add

4, choose the type of chip-> add

When Android studio develops Android projects, the error “CreateProcess error = 206” is easy to appear every time it runs. The console error message says that the file name or extension is too long. I used openjdk to solve this problem. I found many ways to solve it. For a long time, I can only restart Android studio or forcibly end the JDK process in the application manager. Later, I just changed the open JDK to Oracle JDK.

First, the error information is as follows:

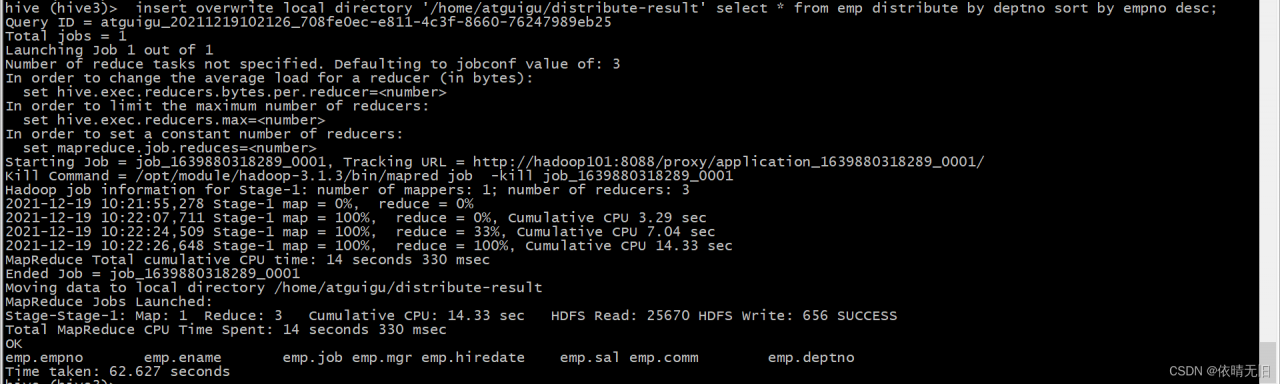

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

java.io.IOException: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested resource type=[memory-mb] < 0 or greater than maximum allowed allocation. Requested resource=<memory:1536, vCores:1>, maximum allowed allocation=<memory:256, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:256, vCores:4>It can be seen from the error message that the main reason is that the maximum memory of MR is less than the requested content. Here, we can find it on the yarn site Configure RM data information in XML:

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2548</value>

<discription>Available memory per node, units MB</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>128</value>

<discription>Minimum memory that can be requested for a single task, default 1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<discription>Maximum memory that can be requested for a single task, default 8192MB</discription>

</property>

When we restart the Hadoop cluster and run the partition again, an error is reported:

Diagnostic Messages for this Task:

[2021-12-19 10:04:27.042]Container [pid=5821,containerID=container_1639879236798_0001_01_000005] is running 253159936B beyond the 'VIRTUAL' memory limit. Current usage: 92.0 MB of 1 GB physical memory used; 2.3 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1639879236798_0001_01_000005 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 5821 5820 5821 5821 (bash) 0 0 9797632 286 /bin/bash -c /opt/module/jdk1.8.0_161/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/atguigu/appcache/application_1639879236798_0001/container_1639879236798_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.17.42 42894 attempt_1639879236798_0001_m_000000_3 5 1>/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005/stdout 2>/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005/stderr

|- 5833 5821 5821 5821 (java) 338 16 2498220032 23276 /opt/module/jdk1.8.0_161/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/atguigu/appcache/application_1639879236798_0001/container_1639879236798_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.17.42 42894 attempt_1639879236798_0001_m_000000_3 5 It can be seen from the above information that the physical memory data is too small, but in fact our virtual memory is enough, so we can configure here not to check the physical memory:

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>Then restart the cluster: run SQL

The results can be output normally.

1. Clickhouse service restart

sudo service clickhouse-server start

2. Error message

Start clickhouse-server service: Poco::Exception. Code: 1000, e.code() = 0,

e.displayText() = Exception: Failed to merge config with '/etc/clickhouse-server/config.d/metric_log.xml':

Exception: Root element doesn't have the corresponding root element as the config file.

It must be <yandex> (version 21.3.4.25 (official build))

Cannot obtain value of path from config file: /etc/clickhouse-server/config.xml

3. Problem analysis

Exception starting Clickhouse server service. Unable to merge configuration with ‘/ etc/Clickhouse server/config’. The root element does not have a corresponding root element as a configuration file. It must be (version 21.3.4.25 (official version)). The path value cannot be obtained from the configuration file:/etc/Clickhouse server/config.xml

translated as/etc/Clickhouse server/config d/metric_log. The content in the XML configuration file, XML, cannot be parsed correctly. The official version is 21.3 4.25 it is required that the content of the configuration file should be in the label

4. Solutions

# open files /etc/clickhouse-server/config.d/metric_log.xml

vi /etc/clickhouse-server/config.d/metric_log.xml

# Mofdify the content of metric_log.xml file

<clickhouse>

<metric_log>

<database>system</database>

<table>metric_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

<collect_interval_milliseconds>1000</collect_interval_milliseconds>

</metric_log>

</clickhouse>

# Modify the file content and put it in <yandex> tag

# Contents of the modified metric_log.xml file

<yandex>

<clickhouse>

<metric_log>

<database>system</database>

<table>metric_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

<collect_interval_milliseconds>1000</collect_interval_milliseconds>

</metric_log>

</clickhouse>

</yandex>

# save and restart

sudo service clickhouse-server start

# Prompt after execution

Start clickhouse-server service: Path to data directory in /etc/clickhouse-server/config.xml: /data/clickhouse/

DONE

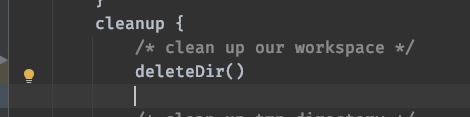

Today’s development feedback has been using the good Jenkins compilation service, but it reported an error

Look at the log and say something is wrong with deletedir

org.jenkinsci.plugins.workflow.steps.MissingContextVariableException: Required context class hudson.FilePath is missing

Perhaps you forgot to surround the code with a step that provides this, such as: node

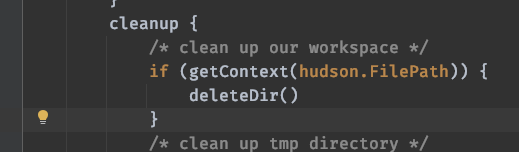

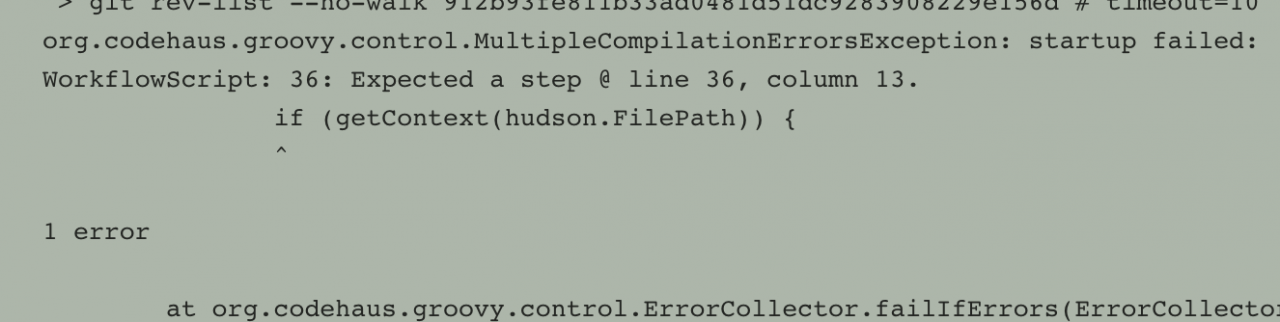

Then after development and adjustment

Start reporting another error

After developing no solution, he found me

I looked at the pipeline

pipeline {

agent{ label 'qa-gpu016.test.cn' }

parameters {

string(defaultValue: '0.0.0', description: '版本号', name: 'version', trim: false)

}

......

The discovery task is scheduled to · qa-gpu016 test.cn , and then log in to qa-gpu016 test.cn View

It was found that the disk was full. Because I didn’t know who to clean, I could only clean some unused images and containers

root@qa-gpu016:/# docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 12 5 21.67GB 20.58GB (94%)

Containers 6 6 4.765MB 0B (0%)

Local Volumes 544 2 197.3GB 197.1GB (99%)

Build Cache 0 0 0B 0B

root@qa-gpu016:/home# docker system prune -a

WARNING! This will remove:

- all stopped containers

- all networks not used by at least one container

- all images without at least one container associated to them

- all build cache

Are you sure you want to continue?[y/N] y

Deleted Containers:

e0a493a142c680033c0bce6eed8e2ee3538b6e967077c6d8e27110d992ae543e

d0283ada7950f92dbf43f02a543a12eb4efc0a1a285516500ae2ccbd87ad58f7

fbaba3555782cace190c5d8c471ab302b0a9ed8372f881899c3c421aaac96a32

facab655ec5f0bc7a1737ced60353be2c6a51a79f80cfcec04bc2b63c802fe5f

095d2f0c22483c7515c2a17bb19489fe4f52cd7251ff865bd23893ae7064e015

db4614f42fe72795335af99b64fbac7f3b41311631d349511b593b186acb6e53

4fc5b1870cb1fb4ddd5197d70b0f80ef8be224229417d0ea7b5e5f3ce94da214

53d9edf531c88c27cb31b0039427cb0bc9f7a5d8806ebda608f7bb604252fcb5

8d150da1189ba204d318cb8e028c47d71df4be0ff23a69bb1fe6a0403e938313

Deleted Images:

untagged: be510_test:0.0.217

deleted: sha256:cc9822c1c293e75b5a2806c2a84b34b908b3768f92b246a7ff415cf7f0ec0f37

deleted: sha256:f21a13c9453fb0a846c57c285251ece8d8fc95b803801e9f982891659217527a

deleted: sha256:38c1da0485daa7b5593dff9784da12af855290da40ee20600bc3ce864fb43fc0

......

root@qa-gpu016:/#

Then inform the developer to remove the judgment and keep it as it is

......

cleanup {

/* clean up our workspace */

deleteDir()

/* clean up tmp directory */

dir("${workspace}@tmp") {

deleteDir()

}

/* clean up script directory */

dir("${workspace}@script") {

deleteDir()

}

}

......

Reconstruction, development feedback, pulling an image, and now entering the compilation process. Before, an error was reported on the outside

So far, the problem has been solved

The specific error information is as follows:

[sonkwo@sonkwo-bj-data001 hive-3.1.2]$ bin/hive

which: no hbase in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/module/jdk1.8.0_212/bin:/opt/module/ha/hadoop-3.1.3/bin:/opt/module/ha/hadoop-3.1.3/sbin:/opt/module/zookeeper-3.5.7/bin:/opt/module/hive-3.1.2/bin:/home/sonkwo/.local/bin:/home/sonkwo/bin)

Hive Session ID = cb685500-b6ba-42be-b652-1aa7bdf0e134

Logging initialized using configuration in jar:file:/opt/module/hive-3.1.2/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:98)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:2017)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3125)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1173)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:973)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:527)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1036)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1000)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:928)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2916)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:651)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:591)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:747)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:318)

at org.apache.hadoop.util.RunJar.main(RunJar.java:232)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:98)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:2017)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1441)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getFileInfo(FSNamesystem.java:3125)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getFileInfo(NameNodeRpcServer.java:1173)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getFileInfo(ClientNamenodeProtocolServerSideTranslatorPB.java:973)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:527)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1036)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1000)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:928)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2916)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1545)

at org.apache.hadoop.ipc.Client.call(Client.java:1491)

at org.apache.hadoop.ipc.Client.call(Client.java:1388)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118)

at com.sun.proxy.$Proxy28.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:904)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:422)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:165)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:157)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:359)

at com.sun.proxy.$Proxy29.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1661)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1577)

at org.apache.hadoop.hdfs.DistributedFileSystem$29.doCall(DistributedFileSystem.java:1574)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1589)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1683)

at org.apache.hadoop.hive.ql.exec.Utilities.ensurePathIsWritable(Utilities.java:4486)

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:760)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:701)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:627)

... 9 more

reason:

The status of the three namenodes is standby

Overview ‘sonkwo-bj-data001:8020’ (standby)

Overview ‘sonkwo-bj-data002:8020’ (standby)

Overview ‘sonkwo-bj-data003:8020’ (standby)

Solution:

1) Close the HDFS cluster

stop DFS SH

2) start zookeeper cluster

ZK SH start

3) initialize ha status in zookeeper

HDFS zkfc – formatzk

4) start HDFS service

start DFS sh

Re execute bin/hive link successfully:

hive>

[1]+ Stopped hive

[sonkwo@sonkwo-bj-data001 hive-3.1.2]$ hive

which: no hbase in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/module/jdk1.8.0_212/bin:/opt/module/ha/hadoop-3.1.3/bin:/opt/module/ha/hadoop-3.1.3/sbin:/opt/module/zookeeper-3.5.7/bin:/opt/module/hive-3.1.2/bin:/home/sonkwo/.local/bin:/home/sonkwo/bin)

Hive Session ID = 698d0919-f46c-42c4-b92e-860f501a7711

Logging initialized using configuration in jar:file:/opt/module/hive-3.1.2/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

INTEL MKL ERROR: /opt/intel/mkl/lib/intel64/libmkl_avx2.so: undefined symbol: mkl_sparse_optimize_bsr_trsm_i8.

Intel MKL FATAL ERROR: Cannot load libmkl_avx2.so or libmkl_def.so.

Add in the command:

export LD_PRELOAD=~/anaconda3/lib/libmkl_core.so:~/anaconda3/lib/libmkl_sequential.so

ERROR: Couldn’t create the kaldinnet2onlinedecoder element!

Couldn’t find kaldinnet2onlinedecoder element at /home/cs_hsl/kaldi/src/gst-plugin. If it’s not the right path, try to set GST_PLUGIN_PATH to the right one, and retry. You can also try to run the following command: ‘GST_PLUGIN_PATH=/home/cs_hsl/kaldi/src/gst-plugin gst-inspect-1.0 kaldinnet2onlinedecoder’.

Enter the installation directory of gst-kaldi-nnet2-online

export GST_PLUGIN_PATH=/home/cs_hsl/kaldi/tools/gst-kaldi-nnet2-online/src

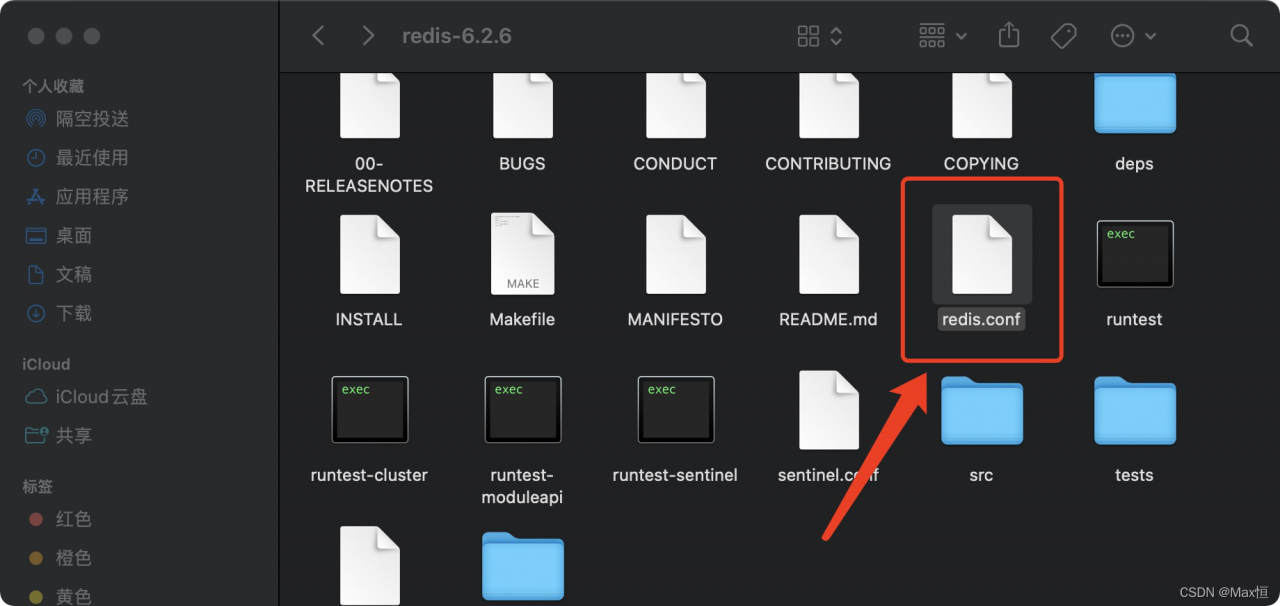

Errors are reported as follows:

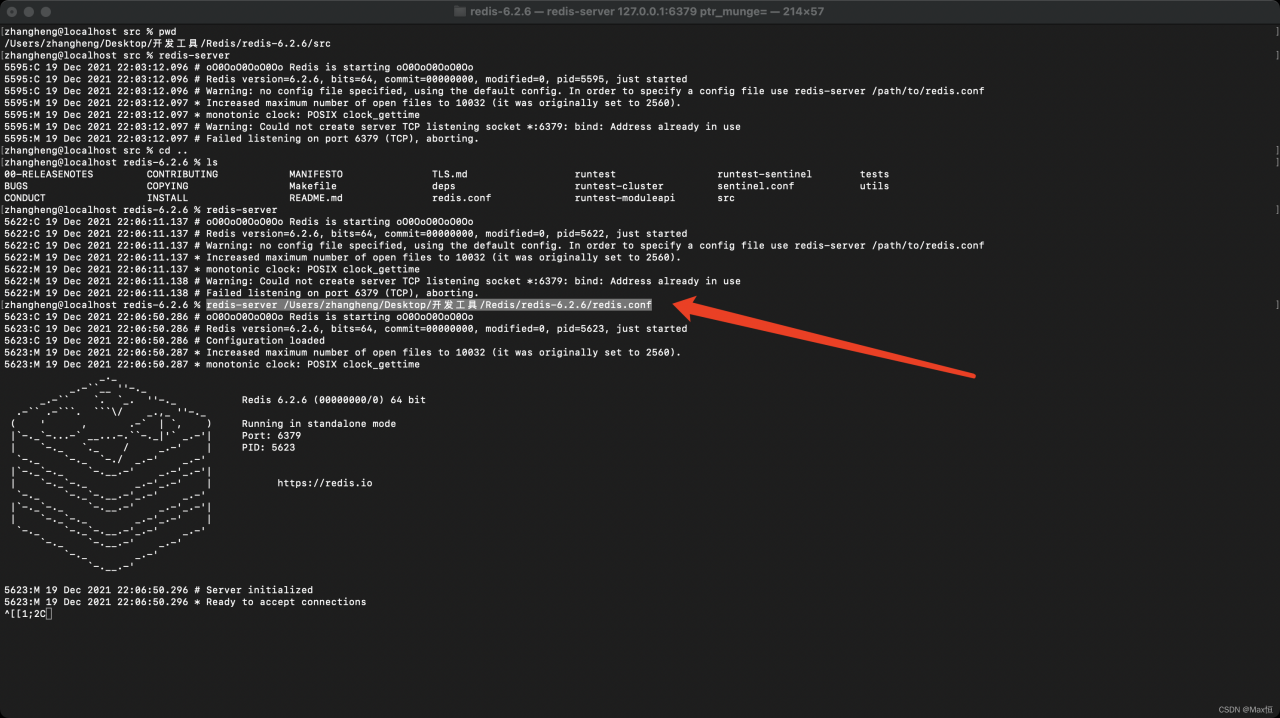

Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

The reason for the error is that the corresponding conf file needs to be specified during startup. The

startup command is as follows

redis-server /Absolute path/to/redis.conf

Find redis in this machine as shown below The conf path is then copied down

Start the MAC terminal CD to the redis path. Enter:

redis-server /Users/zhangheng/Desktop/Tools/Redis/redis-6.2.6/redis.conf

Press enter after input to start redis normally

environment

<spring-boot.version>2.5.3</spring-boot.version>

<spring-cloud.version>2020.0.3</spring-cloud.version>

<spring-cloud-alibaba.version>2021.1</spring-cloud-alibaba.version>

report errors

Errors are reported during project startup, as follows:

2021-12-22 16:55:56.766 WARN 21922 --- [ main] ConfigServletWebServerApplicationContext : Exception encountered during context initialization - cancelling refresh attempt: org.springframework.beans.factory.support.BeanDefinitionOverrideException: Invalid bean definition with name 'oauth-client.FeignClientSpecification' defined in null: Cannot register bean definition [Generic bean: class [org.springframework.cloud.openfeign.FeignClientSpecification]; scope=; abstract=false; lazyInit=null; autowireMode=0; dependencyCheck=0; autowireCandidate=true; primary=false; factoryBeanName=null; factoryMethodName=null; initMethodName=null; destroyMethodName=null] for bean 'oauth-client.FeignClientSpecification': There is already [Generic bean: class [org.springframework.cloud.openfeign.FeignClientSpecification]; scope=; abstract=false; lazyInit=null; autowireMode=0; dependencyCheck=0; autowireCandidate=true; primary=false; factoryBeanName=null; factoryMethodName=null; initMethodName=null; destroyMethodName=null] bound.

2021-12-22 16:55:56.776 INFO 21922 --- [ main] ConditionEvaluationReportLoggingListener :

Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled.

2021-12-22 16:55:56.799 ERROR 21922 --- [ main] o.s.b.d.LoggingFailureAnalysisReporter :

***************************

APPLICATION FAILED TO START

***************************

Description:

The bean 'xxx-client.FeignClientSpecification' could not be registered. A bean with that name has already been defined and overriding is disabled.

Action:

Consider renaming one of the beans or enabling overriding by setting spring.main.allow-bean-definition-overriding=true

reason

Multiple interfaces in a project use @ feignclient to call the same service, which means that a service can only be used once with @ feignclient.

Solution:

1) Distinguish with ContextID

But I don’t know why it didn’t work

@FeignClient(value = “xxx-server”, contextId = “oauth-client”)

public interface OAuthClientFeignClient {

@GetMapping("/api/v1/oauth-clients/{clientId}")

Result<SysOauthClient> getOAuthClientById(@PathVariable String clientId);

}

2) Add the following code to the application.properties file.

#Allow the existence of multiple Feign calls to the same Service interface

spring.main.allow-bean-definition-overriding=true

3) Add the following code to the application.yml file.

spring:

main:

#Allow the existence of multiple Feign calls to the same Service interface

allow-bean-definition-overriding: true

The above code enables multiple interfaces to call the same service using @FeignClient.

――― MARKDOWN TEMPLATE ―――――――――――――――――――――――――――――――――――――――――――――――――――――――――――

### Command

/usr/local/bin/pod install

### Report

* What did you do?

* What did you expect to happen?

* What happened instead?

### Stack

CocoaPods : 1.8.4

Ruby : ruby 2.6.3p62 (2019-04-16 revision 67580) [x86_64-darwin18]

RubyGems : 3.0.6

Host : Mac OS X 10.14.5 (18F132)

Xcode : 11.3.1 (11C504)

Git : git version 2.19.0

Ruby lib dir : /Users/huanghaipo/.rvm/rubies/ruby-2.6.3/lib

Repositories : HPSpecs - git - https://github.com/HuangHaiPo/HPSpecs.git @ 87ca8ef48b56269780abb0e14211ca1f2e121217

HuModularizationSpecs - git - [email protected]:HuModularizationLibrary/HuModularizationSpecs.git @ cd28555226d424e50e8e7454c3a8a5b287e4b9f2

master - git - https://github.com/CocoaPods/Specs.git @ 061335ab06da5c1f542cdb56bc0d73f9af2d1984

### Podfile

ruby

source 'https://github.com/CocoaPods/Specs.git'

# Uncomment this line to define a global platform for your project

platform :ios, '9.0'

――― TEMPLATE END ――――――――――――――――――――――――――――――――――――――――――――――――――――――――――――――――

[!] Oh no, an error occurred.

Search for existing GitHub issues similar to yours:

pe=Issues

If none exists, create a ticket, with the template displayed above, on:

https://github.com/CocoaPods/CocoaPods/issues/new

Be sure to first read the contributing guide for details on how to properly submit a ticket:

https://github.com/CocoaPods/CocoaPods/blob/master/CONTRIBUTING.md

Don't forget to anonymize any private data!

Looking for related issues on cocoapods/cocoapods...

Searching for inspections failed: undefined method `map' for nil:NilClassSolution:

Execute the following command to update the local library. If the version is low, upgrade cocoapods

pod repo update

upgrade

sudo gem update --system

sudo gem install cocoapods

pod setupDirectly at androidmanifest XML is added to the configuration of the application

android:supportsRtl="true"

that will do