one

In tensorflow, exponential decay method is provided to solve the problem of setting learning rate.

adopt tf.train.exponential_ The decay function realizes the exponential decay learning rate.

Steps: 1. First, use a larger learning rate (purpose: to get a better solution quickly);

Secondly, the learning rate is gradually reduced by iteration;

Code implementation:

[html]

view plain

copy

- decayed_ learning_ rate=learining_ rate*decay_ rate^(global_ step/decay_ steps)

Among them, decayed_ learning_ Rate is the learning rate used in each round of optimization;

learning_ Rate is the preset initial learning rate;

decay_ Rate is the attenuation coefficient;

decay_ Steps is the decay rate.

and tf.train.exponential_ For the decay function, you can use the stair case (the default value is false; when it is true, the global_ step/decay_ Steps) are converted to integers, and different attenuation methods are selected.

Code example:

[html]

view plain

copy

- global_ step = tf.Variable (0) learning_ rate = tf.train.exponential_ decay(0.1, global_ Step, 100, 0.96, stair case = true) # generating learning rate # learning_ step = tf.train.GradientDescentOptimizer (learning_ rate).minimize(….., global_ step=global_ Step) # use exponential decay learning rate

learning_ Rate: 0.1; stair case = true; then multiply by 0.96 after every 100 rounds of training

Generally, the setting of initial learning rate, attenuation coefficient and attenuation speed is subjective (i.e. empirical setting), while the decreasing speed of loss function is not necessarily related to the loss after iteration,

So the effect of neural network can’t be compared by the falling speed of loss function in previous rounds.

two

tf.train.exponential_ decay(learning_ rate, global_ , decay_ steps, decay_ rate, staircase=True/False)

For example:

[python]

view plain

copy

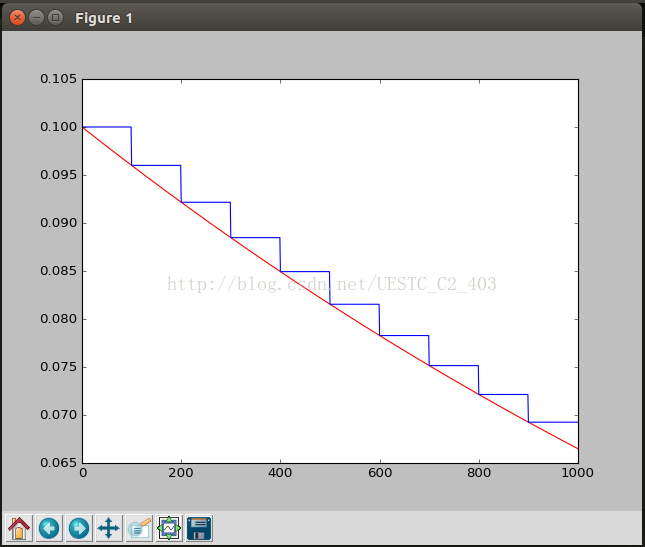

- import tensorflow as tf; import numpy as np; import matplotlib.pyplot as plt; learning_ rate = 0.1 decay_ rate = 0.96 global_ steps = 1000 decay_ steps = 100 global_ = tf.Variable ( tf.constant (0)) c = tf.train.exponential_ decay(learning_ rate, global_ , decay_ steps, decay_ rate, staircase=True) d = tf.train.exponential_ decay(learning_ rate, global_ , decay_ steps, decay_ rate, staircase=False) T_ C = [] F_ D = [] with tf.Session () as sess: for i in range(global_ steps): T_ c = sess.run (c,feed_ dict={global_ : i}) T_ C.append(T_ c) F_ d = sess.run (d,feed_ dict={global_ : i}) F_ D.append(F_ d) plt.figure (1) plt.plot (range(global_ steps), F_ D, ‘r-‘) plt.plot (range(global_ steps), T_ C, ‘b-‘) plt.show ()

Analysis:

The initial learning rate is 0.1, and the total number of iterations is 1000. If stair case = true, it means every decade_ Steps calculates the change of learning rate and updates the original learning rate. If it is false, it means that each step updates the learning rate. Red means false and green means true.

results:

Read More:

- Tensorflow in function tf.Print Method of outputting intermediate value

- In tensorflow tf.reduce_ Mean function

- Tensorflow with tf.Session The usage of () as sess

- module ‘tensorflow_core._api.v2.train’ has no attribute ‘slice_input_producer’

- After the new video card rtx3060 arrives, configure tensorflow and run “TF. Test. Is”_ gpu_ The solution of “available ()” output false

- tf.contrib.layers .xavier_ Initializer function usage

- tf.layers.conv1d Function analysis (one dimensional convolution)

- The routine of benewake tfmini-s / tfmimi plus / tfluna / tf02 Pro / tf03 radar on Python

- tf.gradients is not supported when eager execution is enabled. Use tf.GradientTape instead.

- Solve the problem of using in tensoft 2. X tf.contrib.slim No module named appears in the package: tensorflow.contrib problem

- Tensorflow image random_ There seems to be something wrong with the shift function

- Record a problem of no module named ‘tensorflow. Examples’ and’ tensorflow. Examples. Tutorials’ in tensorflow 2.0

- Resolve – keyerror encountered while installing tensorflow GPU: ‘tensorflow’ error

- Tensorflow error: module ‘tensorflow’ has no attribute ‘xxx’

- To solve the problem of importerror when installing tensorflow: libcublas.so . 10.0, failed to load the native tensorflow runtime error

- TypeError: object of type ‘builtin_function_or_method’ has no len()

- ‘builtin_ function_ or_ Method ‘object is not subscriptable error

- Python error prompt: typeerror: ‘builtin’_ function_ or_ method‘ object is not subscriptable

- Tensorflow error: attributeerror: module ‘tensorflow’ has no attribute ‘unpack’ (‘pack ‘)

- Using pip to install tensorflow: tensorflow — is not a supported wheel on this platform