tf.reduce_ The mean function is used to calculate the mean value of tensor along a specified number axis (a dimension of tensor), mainly for dimension reduction or calculating the mean value of tensor (image).

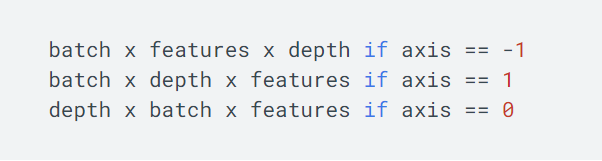

reduce_mean(input_tensor,

axis=None,

keep_dims=False,

name=None,

reduction_indices=None)

The first parameter is input_ Tensor: the input tensor to be reduced; the second parameter axis: the specified axis; if not specified, the mean value of all elements will be calculated; the third parameter keep_ Dims: reduce dimension, set to true, the output result keeps the shape of input tensor, set to false, the output result will reduce dimension; the fourth parameter name: name of operation; the fifth parameter reduction_ Indicators: used to specify axes in previous versions, but has been discarded;

Take a tensor with dimension 2 and shape [2,3] as an example

import tensorflow as tf

x = [[1,2,3],

[1,2,3]]

xx = tf.cast(x,tf.float32)

mean_all = tf.reduce_mean(xx, keep_dims=False)

mean_0 = tf.reduce_mean(xx, axis=0, keep_dims=False)

mean_1 = tf.reduce_mean(xx, axis=1, keep_dims=False)

with tf.Session() as sess:

m_a,m_0,m_1 = sess.run([mean_all, mean_0, mean_1])

print m_a # output: 2.0

print m_0 # output: [ 1. 2. 3.]

print m_1 #output: [ 2. 2.]

If you set the dimension to keep the original tensor, keep_ Dims = true, results:

print m_a # output: [[ 2.]]

print m_0 # output: [[ 1. 2. 3.]]

print m_1 #output: [[ 2.], [ 2.]]

Similar functions include:

tf.reduce_ Sum: calculate the sum of all elements in the axis direction specified by the tensor; tf.reduce_ Max: calculate the maximum value of each element in the axis direction specified by the tensor; tf.reduce_ All: calculate the logical sum (and operation) of each element in the axis direction specified by the tensor; tf.reduce_ Any: calculates the logical or (or operation) of each element in the axis direction specified by the tensor;