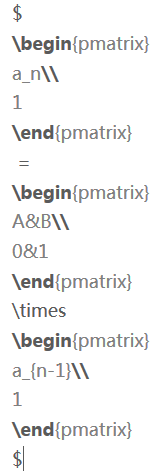

article directory

- 1. An error phenomenon

- 2. Screening process

-

-

- 2.1 Connection reset by peer reason

- 2.2 the syscall: read (..) Failed: Connection reset by peer error

- 3. Final cause

1. Error reporting

A service in

group switched from spring-webmvc framework to spring-webflux. After running online for some time, the following error log occasionally appeared. L:/10.0.168.212:8805 represents the server IP and port where the local service is located. R:/10.0.168.38:47362 represents the server IP and port where the requested service is located. The common reason for this situation is that the server is busy, while checking the monitoring of the service invocation, it is found that the normal invocation amount is not enough to constitute the condition of service busy

2020-05-110 10:35:38.462 ERROR reactor-http-epoll-1 [] reactor.netty.tcp.TcpServer.error(300) - [id: 0x230261ae, L:/10.0.168.212:8805 - R:/10.0.168.38:47362] onUncaughtException(SimpleConnection{channel=[id: 0x230261ae, L:/10.0.168.212:8805 - R:/10.0.168.38:47362]})

io.netty.channel.unix.Errors$NativeIoException: syscall:read(..) failed: Connection reset by peer

at io.netty.channel.unix.FileDescriptor.readAddress(..)(Unknown Source)

2. Screening

2.1 Connection reset by peer

The

error is rarely encountered. The first thing that comes to mind is, of course, searching for the wrong keyword on the Internet and finding the following. It is clear that Connection reset by peer is caused by the server transmitting data to the opposite Socket Connection closed, but the reason for closing the Connection to the opposite end is unknown

| abnormal reason

|

|

java.net.BindException:Address already in use: JVM_Bind |

this exception occurs when the server side is operating new ServerSocket(port), because the port has been started and is listening. At this point with the netstat - an command, you can see the local has been in the use of state of the port, you just need to find a not occupied ports can solve the problem

java.net.ConnectException: Connection refused: Connect

the exception occurs on the client for the new Socket (IP, port) operation, the reason is unable to find the IP address of the machine (that is, from the current machine does not exist to the designated IP routing), or is the IP, but can not find the specified port monitor

java.net.SocketException: The Socket is closed

the exception can occur in both the client and the server, the reason is that their own initiative after closed the connection (call the Socket close method ) read and write operations on the network connection

java.net.SocketException: (Connection reset or Connect reset by peer)

the exception might occur in both client and server, for two reasons, first is if the end of the Socket is closed (or take the initiative to shut down or because of the closing of the anomalies caused by the exit, "mark> the default Connection Socket 60 seconds, 60 seconds without a heartbeat interactions, namely, speaking, reading and writing data, automatically close the Connection ), the other end is still to send data, The first packet sent throws this exception (Connect reset by peer). The other side exits without closing the Connection, and the other side throws an exception if it reads from the Connection again (Connection reset). simple say is to read and write operations after the connection is broken cause of

java.net.SocketException: Broken pipe |

this exception may occur on both the client and server. In the first case of the fourth exception (Connect reset by peer), if the data is written again, the exception |

| | | | | | |

will be thrown

2.2 the syscall: read (...). Failed: Connection reset by peer error

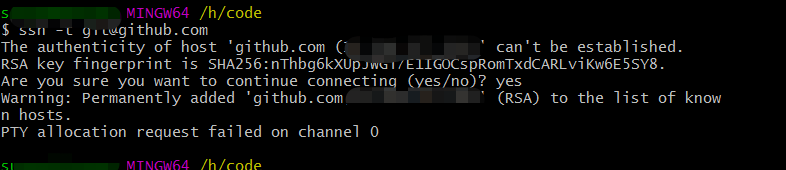

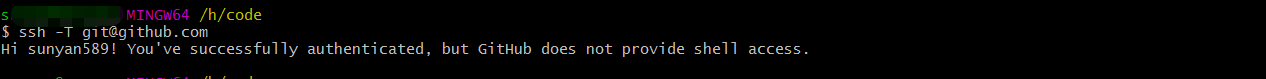

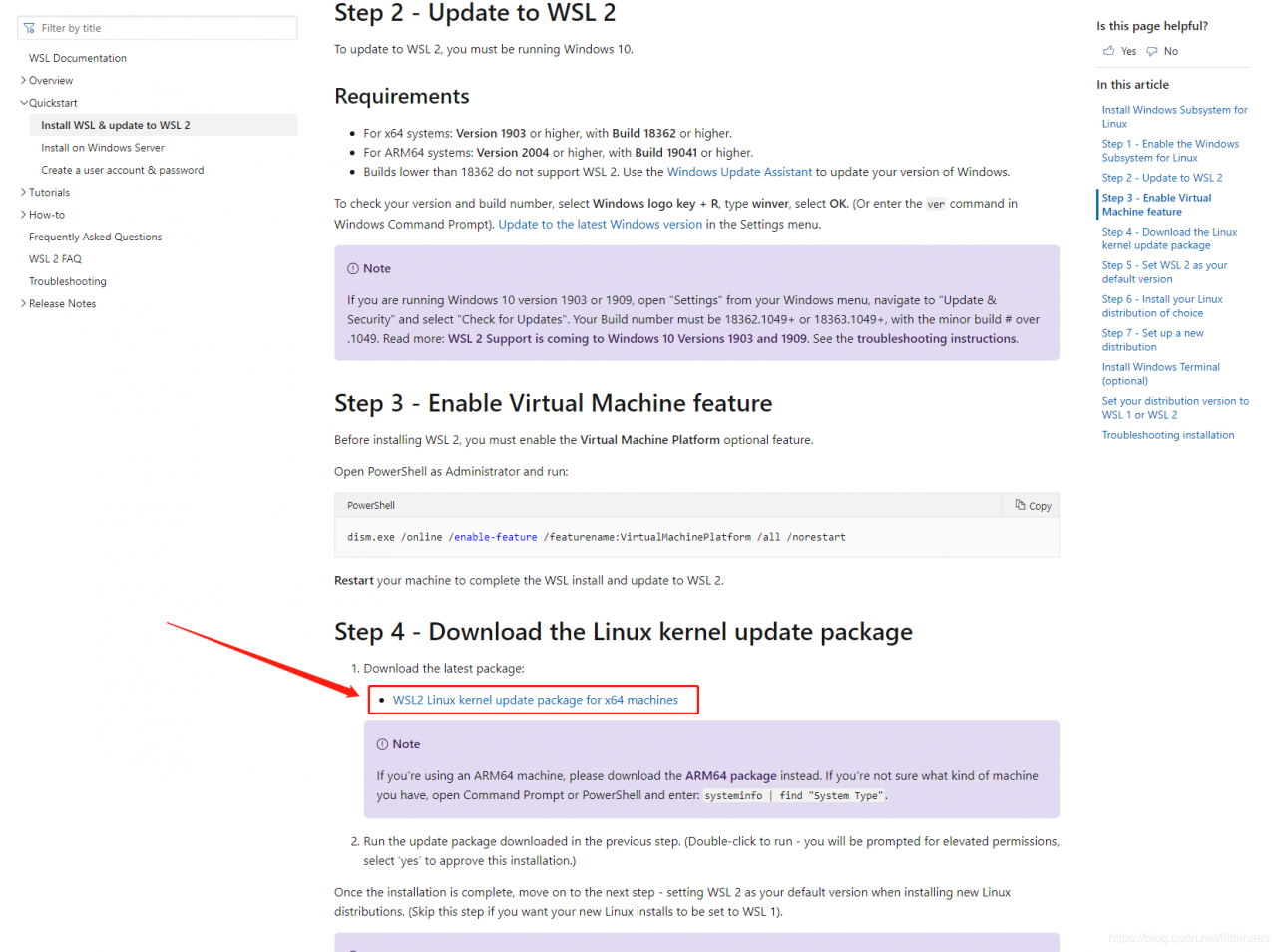

continues searching for other keywords and then goes around and finds the issue of reactor-netty on github. github posted by other developers is almost exactly the same as what I encountered. After careful reading, I found that other developers encountered this problem mainly in the following two ways:

- disabled long connections

- modify the load balancing strategy to the minimum number of connections strategy

From the perspective of comment, this is mainly involved in the connection pooling mechanism of reactor-netty. We know that netty is a framework based on nio (see the Java IO model and examples), which USES a connection pool to ensure concurrent throughput when handling connection requests. By customizing the ClientHttpConnector with a long connection attribute of false, the connection pool thread is guaranteed not to be held for long periods of time, which seems to be an effective solution for this error

in scenarios used by other developers

3. Final cause

check the comment on github, I always feel that the scene of other developers is not exactly the same as ours, but I have no idea for the moment. The leader shouted in the internal group. In the evening, a colleague finally found the clue from the log.

>

>

>

>

>

>

>

- connection pool allocates threads

reactor-http-epoll-1 to process A request A. During the processing of reactor-http-epoll-1, due to the slow SQL blocking all the time, the same interface was accessed with high frequency during this period, and other threads in the connection pool were also allocated to process the same type of request. Then it also blocks because of slow SQL. in the connection pool threads are blocked, a new request to come over, connection pool has no thread can carries on the processing, therefore has been hold request end, closed until after the timeout active Socket . After that, the server Connection pool thread finally finished processing the slow SQL request, and then processed the backlog of requests. When it finished, it sent the data to the request side, only to find that the Connection had been closed, so the error Connection reset by peer was reported. As a rule of thumb, if the service reports a Connection reset by peer error, first check to see if there is a particularly slow action in the service blocking the thread

analysis of slow SQL found that the reason why the statement took so Long to execute was that the MySQL database field of data type VARCAHR accepted the condition of Long data type, resulting in implicit type conversion, unable to use the index, and thus triggering the full table scan.