Problem Description:

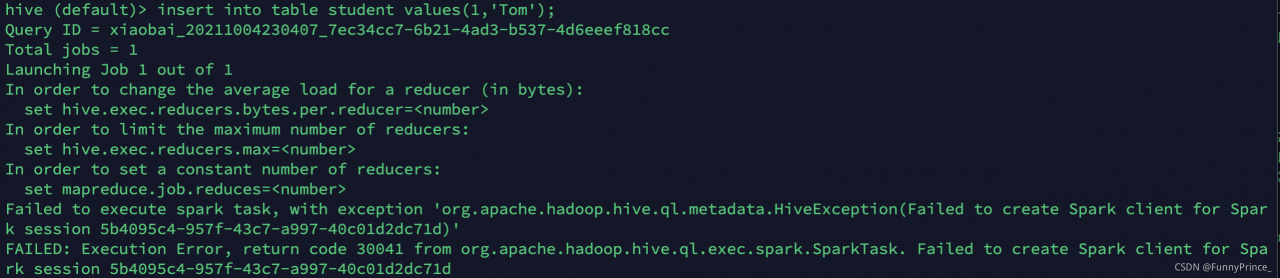

when deploying hive on spark, the test reports an error, and the table creation operation is successful, but the following error occurs when inserting insert:

Failed to execute spark task, with exception ‘org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create Spark client for Spark session 2df0eb9a-15b4-4d81-aea1-24b12094bf44)’

FAILED: Execution Error, return code 30041 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Failed to create Spark client for Spark session 2df0eb9a-15b4-4d81-aea1-24b12094bf44

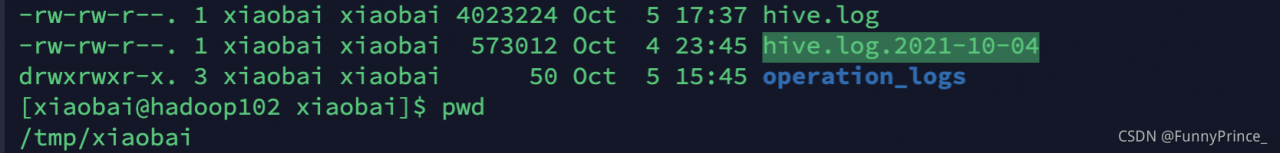

View the hive log according to the required time in the/TMP/Xiaobai path:

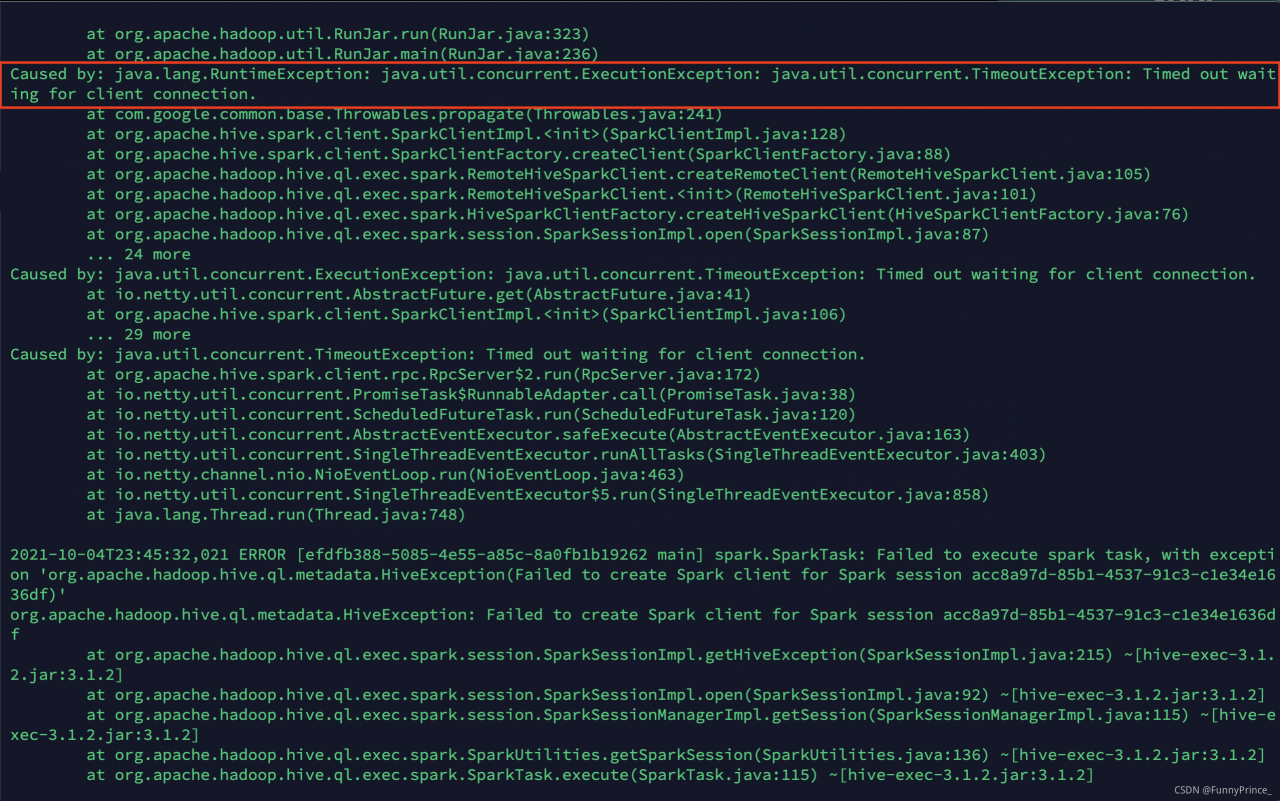

cause analysis

prompt timed out waiting for client connection. Indicates that the connection time between hive and spark has timed out

Solution

1). Change the spark-env.sh.template file in/opt/module/spark/conf/directory to spark env. Sh , and then add the content export spark_ DIST_ CLASSPATH=$(hadoop classpath);

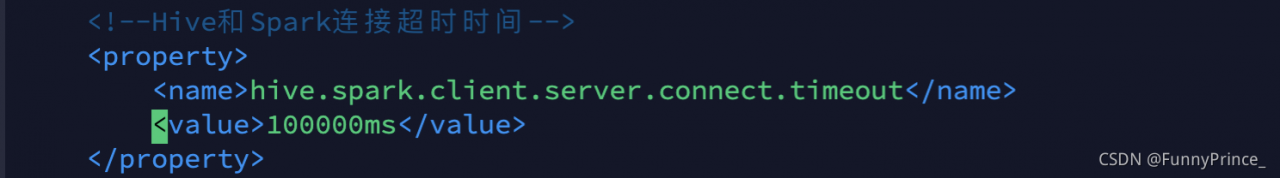

2). Change hive-site.xml in/opt/module/hive/conf directory to modify the connection time between hive and spark

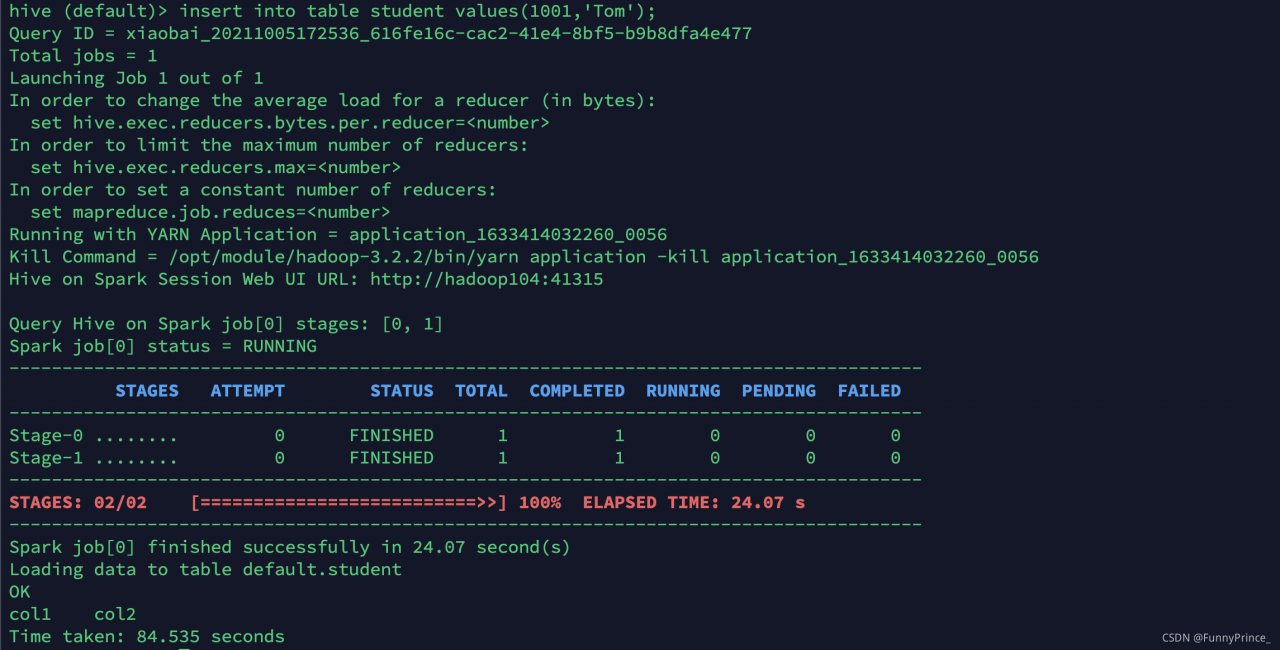

execute the insert statement again. Success! Cry with joy

I made a mistake last night. I checked it all night and didn’t solve it. As a result, I solved it today.