Error reporting information

[WARN ][o.e.x.m.e.l.LocalExporter] [es03.kpt.rongtime.aliapse5.id] unexpected error while indexing monitoring documentorg.elasticsearch.xpack.monitoring.exporter.ExportException: RemoteTransportException[[es01.kpt.rongtime.aliapse5.id][10.107.0.247:29300][indices:admin/create]]; nested: IllegalArgumentException[Validation Failed: 1: this action would add [6] total shards, but this cluster currently has [2998]/[3000] maximum shards open;];

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.lambda$throwExportException$2(LocalBulk.java:125) ~[x-pack-monitoring-7.4.2.jar:7.4.2] at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:195) ~[?:?] at java.util.stream.ReferencePipeline$2$1.accept(ReferencePipeline.java:177) ~[?:?]

at java.util.Spliterators$ArraySpliterator.forEachRemaining(Spliterators.java:948) ~[?:?]

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:484) ~[?:?]

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:474) ~[?:?]

at java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:150) ~[?:?]

at java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:173) ~[?:?]

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) ~[?:?]

at java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:497) ~[?:?]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.throwExportException(LocalBulk.java:126) [x-pack-monitoring-7.4.2.jar:7.4.2]

at org.elasticsearch.xpack.monitoring.exporter.local.LocalBulk.lambda$doFlush$0(LocalBulk.java:108) [x-pack-monitoring-7.4.2.jar:7.4.2]

at org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:62) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.support.ContextPreservingActionListener.onResponse(ContextPreservingActionListener.java:43) [elasticsearch-7.4.2.jar:7.4.2] at org.elasticsearch.action.support.TransportAction$1.onResponse(TransportAction.java:70) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.support.TransportAction$1.onResponse(TransportAction.java:64) [elasticsearch-7.4.2.jar:7.4.2] at org.elasticsearch.action.support.ContextPreservingActionListener.onResponse(ContextPreservingActionListener.java:43) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.ActionListener.lambda$map$2(ActionListener.java:145) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:62) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.ActionListener$1.onResponse(ActionListener.java:62) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.bulk.TransportBulkAction$BulkOperation.doRun(TransportBulkAction.java:421) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.bulk.TransportBulkAction.executeBulk(TransportBulkAction.java:551) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.bulk.TransportBulkAction$1.onFailure(TransportBulkAction.java:287) [elasticsearch-7.4.2.jar:7.4.2] at org.elasticsearch.action.support.TransportAction$1.onFailure(TransportAction.java:79) [elasticsearch-7.4.2.jar:7.4.2]

at org.elasticsearch.action.support.ContextPreservingActionListener.onFailure(ContextPreservingActionListener.java:50) [elasticsearch-7.4.2.jar:7.4.2]

Cause of problem

[indices:admin/create]]; nested: IllegalArgumentException[Validation Failed: 1: this action would add [6] total shards, but this cluster currently has [2998]/[3000] maximum shards open;];

For elasticsearch7 and above, only 3000 shards are allowed by default, which is caused by the insufficient number of available shards in the cluster.

Solution:

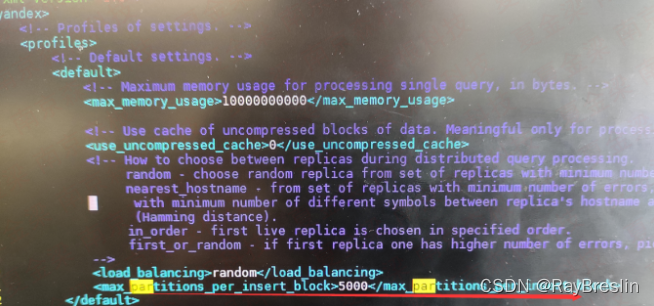

PUT /_cluster/settings

{

"transient": {

"cluster": {

"max_shards_per_node":10000

}

}

}