The reason for this problem is that the old metadata information was not deleted when hbase was reinstalled, so use zookeeper to delete the hbase metadata and restart hbase.

Solution:

Go to the zookeeper directory under bin

zkCli.sh -server localhost:2181

If the command is not found, you can change it to

. /zkCli.sh -server localhost:2181

![]()

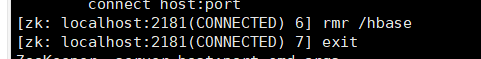

Then delete all hbase related files

Restart hbase

hbase shell

now you can create the sheet successfully!