today in the use of keras-gpu in jupyter notebook error, at first did not pay attention to the console output, only from jupyter see error messages. So, check the solution, roughly divided into two, one version back, two specified running equipment. Because I felt that it was not the version problem, I still used the latest version without going back, so I tried the second method to solve the problem, that is, the program was designed to run the device, and the program could run without error. I accidentally noticed the console output and found that the CPU was running?Then found that the name of the specified device error, resulting in the system can not find the device, so first query the name of the device and then specify the device, to solve the problem.

native environment:

- cudatoolkit = 10.1.243

- cudnn = 7.6.5

- tensorflow – gpu = 2.1.0

- keras – gpu = 2.3.1

Jupyter notebook print error:

...

UnknownError: Failed to get convolution algorithm. This is probably because cuDNN failed to initialize, so try looking to see if a warning log message was printed above.

[[node time_distributed_1/convolution (defined at C:\anaconda3\envs\keras\lib\site-packages\keras\backend\tensorflow_backend.py:3009) ]] [Op:__inference_keras_scratch_graph_1967]

Function call stack:

keras_scratch_graph

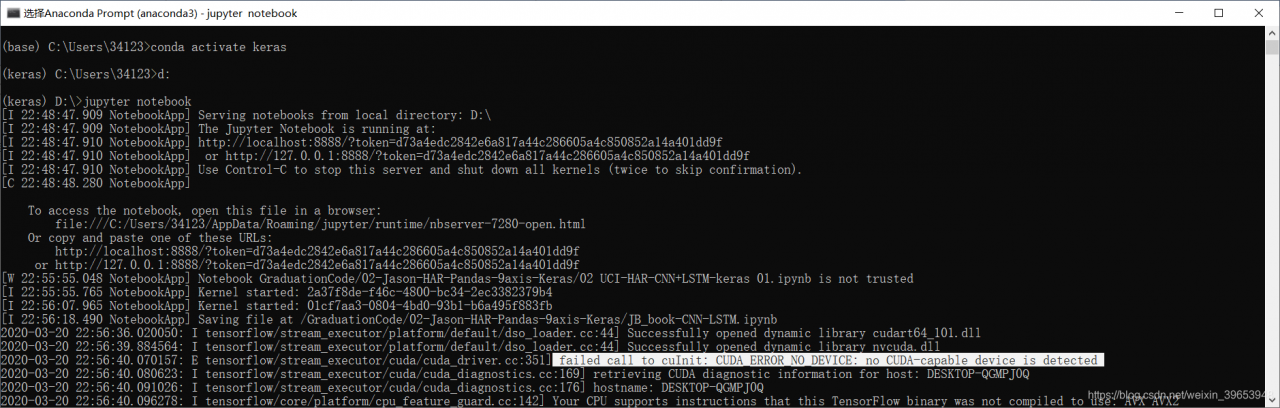

Jupyter notebook console print error: failed call to cuInit: CUDA_ERROR_NO_DEVICE: no cuda-capable device is detected

solution:

- to view native GPU/CPU information

from tensorflow.python.client import device_lib

device_lib.list_local_devices()

output:

[name: "/device:CPU:0"

device_type: "CPU"

memory_limit: 268435456

locality {

}

incarnation: 16677593686354176255,

name: "/device:GPU:0"

device_type: "GPU"

memory_limit: 1440405913

locality {

bus_id: 1

links {

}

}

incarnation: 787265797177696422

physical_device_desc: "device: 0, name: GeForce 940MX, pci bus id: 0000:01:00.0, compute capability: 5.0"]

- can see that the native device has GPU, so use the following statement to specify the name of the running device:

The attention! = The following must be the device name, each person’s device name may be different, do not copy, must first use the command query, then specify!

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '/device:GPU:0'

- to view the currently loaded device:

import tensorflow as tf

sess = tf.compat.v1.Session(config=tf.compat.v1.ConfigProto(log_device_placement=True))

output:

Device mapping:

/job:localhost/replica:0/task:0/device:GPU:0 -> device: 0, name: GeForce 940MX, pci bus id: 0000:01:00.0, compute capability: 5.0

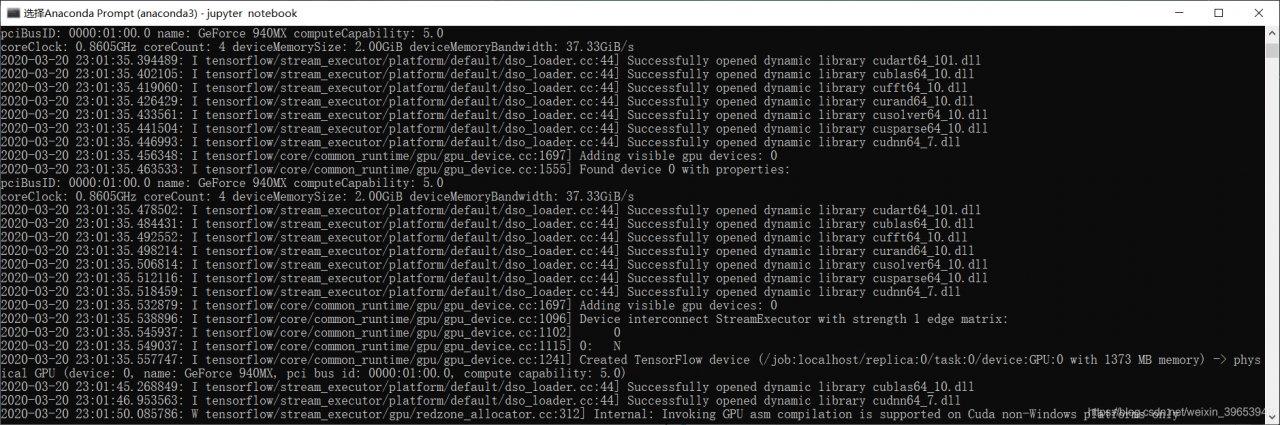

- console output similar to the following information explains that the code has been run on the GPU:

Read More:

- Resolved failed call to cuinit: CUDA_ ERROR_ NO_ DEVICE

- RuntimeError: cuda runtime error (100) : no CUDA-capable device is detected at /opt/conda/conda-bld/

- [MMCV]RuntimeError: CUDA error: no kernel image is available for execution on the device

- linux/tensorflow: failed call to cuDevicePrimaryCtxRetain: CUDA_ERROR_INVALID_DEVICE

- Tensorflow error in Windows: failed call to cuinit: CUDA_ ERROR_ UNKNOWN

- CUDA Error: no kernel image is available for execution on device

- FCOS No CUDA runtime is found, using CUDA_HOME=’/usr/local/cuda-10.0′

- failed call to cuInit: CUDA_ERROR_UNKNOWN: unknown error

- device no response, device descriptor read/64, error -71

- CUDA error: device-side assert triggered

- Error: cudaGetDevice() failed. Status: CUDA driver version is insufficient for CUDA runtime version

- Ubuntu cannot access USB device, failed to create a proxy device for the USB device

- RuntimeError: CUDA error: device-side assert triggered

- QInotifyFileSystemWatcherEngine::addPaths: inotify_add_Watch failed: there is no space on the device

- Failed to mount / cache (no such device)

- “DNET: failed to open device eth1” error resolution of nmap

- Failed to dlsym make_device: undefined symbol: make_device

- When ifconfig configures the network, “siocsifaddr: no such device” and “eth0: error while getting interface” appear flags:No such dev”

- Failed to add /run/systemd/ask-password to directory watch: No space left on device?

- /sbin/mount.vboxsf: mounting failed with the error: No such device