import org.apache.spark.mllib.feature.{Word2Vec, Word2VecModel}

import org.apache.spark.sql.{DataFrame, Row, SparkSession}

model save:

Link: http://spark.apache.org/docs/2.3.4/api/scala/index.html#org.apache.spark.mllib.feature.Word2VecModel

var model = Word2VecModel.load(spark.sparkContext, config.model_path)

model read:

Link: http://spark.apache.org/docs/2.3.4/api/scala/index.html#org.apache.spark.mllib.feature.Word2VecModel$

var model = Word2VecModel.load(spark.sparkContext, config.model_path)

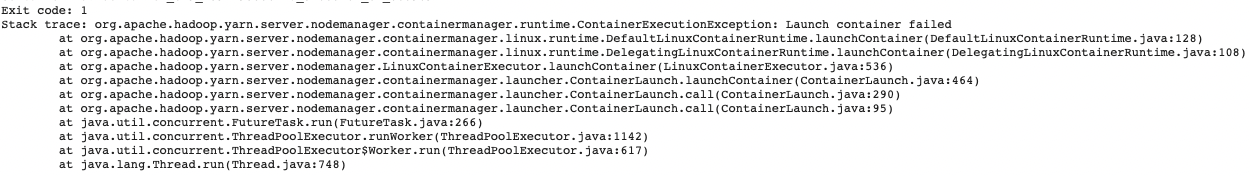

Read Error:

Exception in thread "main" java.lang.IllegalAccessError: tried to access method com.google.common.base.Stopwatch.<init>()V from class org.apache.hadoop.mapred.FileInputFormat

at org.apache.hadoop.mapred.FileInputFormat.getSplits(FileInputFormat.java:312)

at org.apache.spark.rdd.HadoopRDD.getPartitions(HadoopRDD.scala:200)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:35)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.RDD$$anonfun$take$1.apply(RDD.scala:1337)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.take(RDD.scala:1331)

at org.apache.spark.rdd.RDD$$anonfun$first$1.apply(RDD.scala:1372)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.first(RDD.scala:1371)

at org.apache.spark.mllib.util.Loader$.loadMetadata(modelSaveLoad.scala:129)

at org.apache.spark.mllib.feature.Word2VecModel$.load(Word2Vec.scala:699)

at job.ml.embeddingModel.graphEmbedding$.run(graphEmbedding.scala:40)

at job.ml.embeddingModel.graphEmbedding$.main(graphEmbedding.scala:24)

at job.ml.embeddingModel.graphEmbedding.main(graphEmbedding.scala)

POM file add

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>15.0</version>

</dependency>

Run OK again!