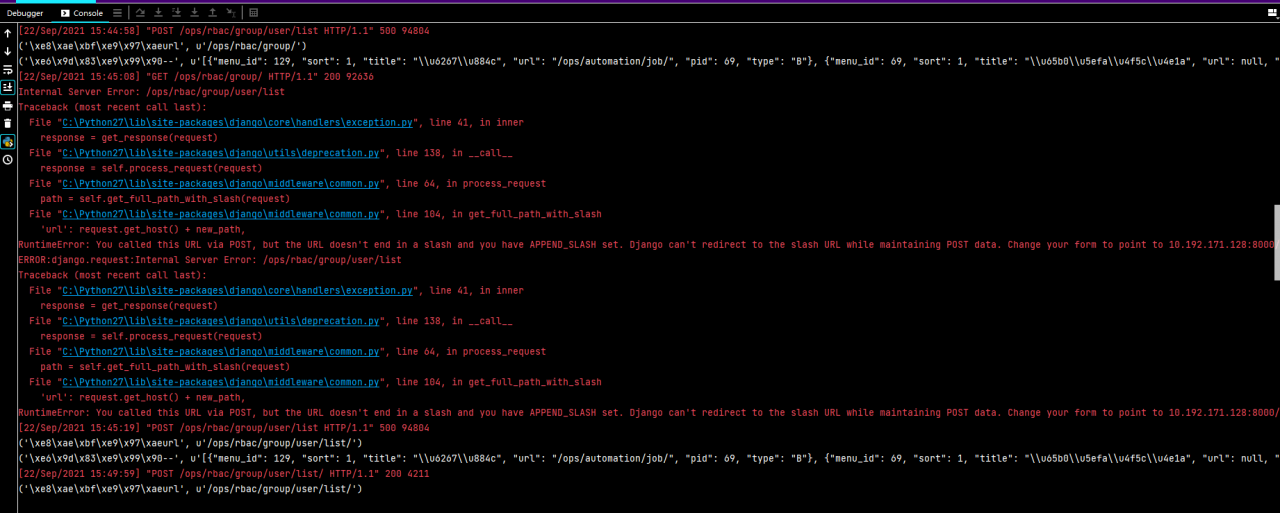

File "D:/Codes/code/Python Project/group_reid-master/group_reid-master/main_group_gcn_siamese_part_half_fulltest_sink.py", line 348, in train_gcn

loss.backward()

File "D:\Codes\Anaconda3\envs\pytorch_gpu\lib\site-packages\torch\tensor.py", line 185, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "D:\Codes\Anaconda3\envs\pytorch_gpu\lib\site-packages\torch\autograd\__init__.py", line 127, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: chunk expects `chunks` to be greater than 0, got: 0

Exception raised from chunk at ..\aten\src\ATen\native\TensorShape.cpp:496 (most recent call first):As shown in the figure, I always reported an error chunk of 0. At first, I was puzzled. There was no similar situation with me when looking for information on the Internet. I typed the error and found that an error was reported in the derivation of loss (the figure below). I thought that loss is a function called, and it is impossible to report such an error. So throw it directly on the server to debug. Due to different versions of pytorch, the error content is also different. We finally found the error in pytorch version 1.1.

loss.backward()It was caused by dimension mismatch during cutting:

env11_junk1 = env11.squeeze().unsqueeze(0).unsqueeze(0).repeat((5-x1_valid.shape[0]), parts, 1)

env22_junk2 = env22.squeeze().unsqueeze(0).unsqueeze(0).repeat((5-x2_valid.shape[0]), parts, 1)

env11 = env11.squeeze().unsqueeze(0).unsqueeze(0).repeat(x1_valid.shape[0], parts, 1)

env22 = env22.squeeze().unsqueeze(0).unsqueeze(0).repeat(x2_valid.shape[0], parts, 1)

# calculate within graph and inter graph message

h_k1 = torch.cat((self.W_x(x1[i, :sample_size1, :]), self.W_neib(x_neib1), self.W_relative(mu1), self.W_env(env11)), 2).unsqueeze(0)

h_k_junk1 = torch.cat((self.W_x(x1[i, sample_size1:, :]), self.W_x(x1[i, sample_size1:, :]), self.W_x(x1[i, sample_size1:, :]),self.W_env(env11_junk1)), 2).unsqueeze(0)

h_k2 = torch.cat((self.W_x(x2[i, :sample_size2, :]), self.W_neib(x_neib2), self.W_relative(mu2), self.W_env(env22)), 2).unsqueeze(0)

h_k_junk2 = torch.cat((self.W_x(x2[i, sample_size2:, :]), self.W_x(x2[i, sample_size2:, :]), self.W_x(x2[i, sample_size2:, :]),self.W_env(env22_junk2)), 2).unsqueeze(0) In my code (square and unsqueeze are redundant, just premute directly). I intended to copy the same first dimension as X1 in the first dimension, but in the actual data set, the first dimension of X1 may be 0. Therefore, if the parameter of repeat is 0, an error will be reported, which cannot be less than the original dim. Since the error reported is not obvious, I wasted half a day thinking about this problem. I hereby record it.