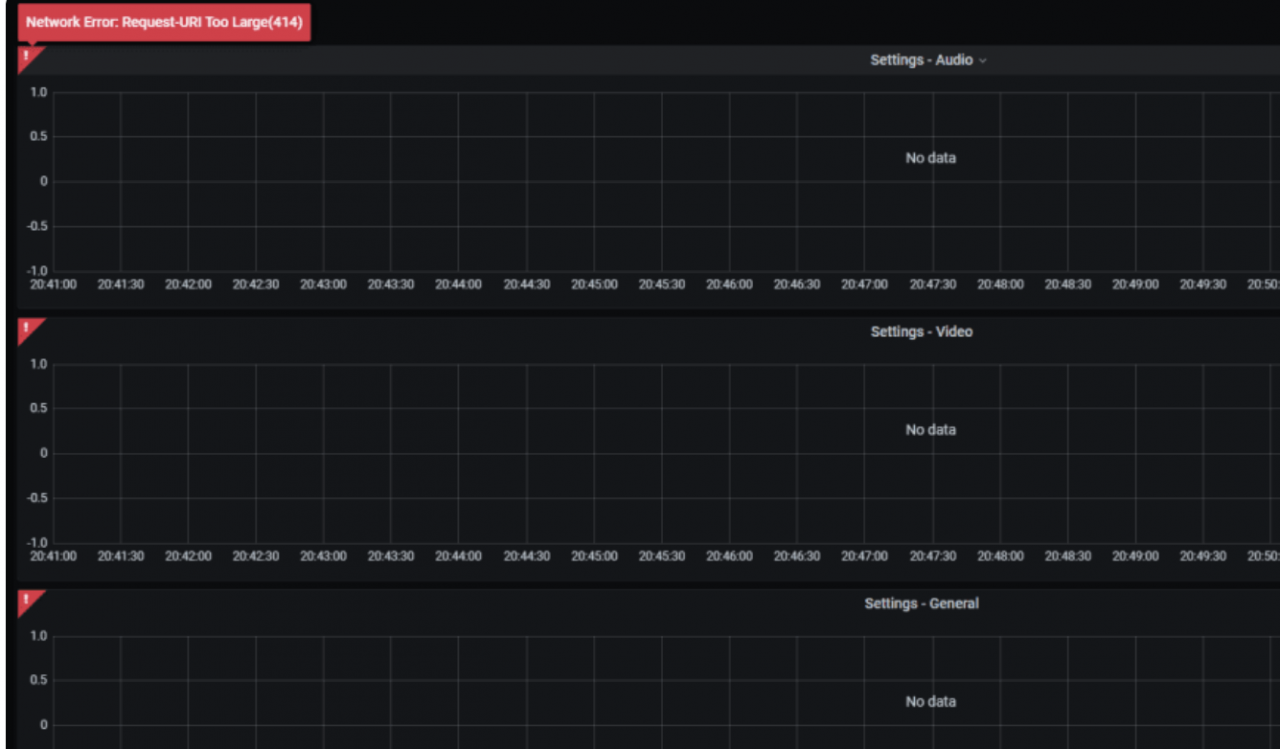

Error reporting original text

principal is [email protected]

Will use keytab

Commit Succeeded

21/08/23 10:20:14 INFO NewConsumer: subscribe:bc_test

Exception in thread "main" java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:49)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:108)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:58)

at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:88)

Caused by: org.apache.kafka.common.errors.SaslAuthenticationException: An error: (java.security.PrivilegedActionException: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - LOOKING_UP_SERVER)]) occurred when evaluating SASL token received from the Kafka Broker. Kafka Client will go to AUTHENTICATION_FAILED state.

Caused by: javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - LOOKING_UP_SERVER)]

at com.sun.security.sasl.gsskerb.GssKrb5Client.evaluateChallenge(GssKrb5Client.java:211)

at org.apache.kafka.common.security.authenticator.SaslClientAuthenticator$2.run(SaslClientAuthenticator.java:361)

at org.apache.kafka.common.security.authenticator.SaslClientAuthenticator$2.run(SaslClientAuthenticator.java:359)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.kafka.common.security.authenticator.SaslClientAuthenticator.createSaslToken(SaslClientAuthenticator.java:359)

at org.apache.kafka.common.security.authenticator.SaslClientAuthenticator.sendSaslClientToken(SaslClientAuthenticator.java:269)

at org.apache.kafka.common.security.authenticator.SaslClientAuthenticator.authenticate(SaslClientAuthenticator.java:206)

at org.apache.kafka.common.network.KafkaChannel.prepare(KafkaChannel.java:81)

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:486)

at org.apache.kafka.common.network.Selector.poll(Selector.java:424)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:460)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:261)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:233)

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:209)

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureCoordinatorReady(AbstractCoordinator.java:219)

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureCoordinatorReady(AbstractCoordinator.java:205)

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:279)

at org.apache.kafka.clients.consumer.KafkaConsumer.pollOnce(KafkaConsumer.java:1149)

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:1115)

at receive.NewConsumer.doWork(NewConsumer.java:102)

at receive.NewConsumer.main(NewConsumer.java:127)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.springframework.boot.loader.MainMethodRunner.run(MainMethodRunner.java:49)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:108)

at org.springframework.boot.loader.Launcher.launch(Launcher.java:58)

at org.springframework.boot.loader.JarLauncher.main(JarLauncher.java:88)

Caused by: GSSException: No valid credentials provided (Mechanism level: Server not found in Kerberos database (7) - LOOKING_UP_SERVER)

at sun.security.jgss.krb5.Krb5Context.initSecContext(Krb5Context.java:770)

at sun.security.jgss.GSSContextImpl.initSecContext(GSSContextImpl.java:248)

at sun.security.jgss.GSSContextImpl.initSecContext(GSSContextImpl.java:179)

at com.sun.security.sasl.gsskerb.GssKrb5Client.evaluateChallenge(GssKrb5Client.java:192)

... 29 more

Caused by: KrbException: Server not found in Kerberos database (7) - LOOKING_UP_SERVER

at sun.security.krb5.KrbTgsRep.<init>(KrbTgsRep.java:73)

at sun.security.krb5.KrbTgsReq.getReply(KrbTgsReq.java:251)

at sun.security.krb5.KrbTgsReq.sendAndGetCreds(KrbTgsReq.java:262)

at sun.security.krb5.internal.CredentialsUtil.serviceCreds(CredentialsUtil.java:308)

at sun.security.krb5.internal.CredentialsUtil.acquireServiceCreds(CredentialsUtil.java:126)

at sun.security.krb5.Credentials.acquireServiceCreds(Credentials.java:458)

at sun.security.jgss.krb5.Krb5Context.initSecContext(Krb5Context.java:693)

... 32 more

Caused by: KrbException: Identifier doesn't match expected value (906)

at sun.security.krb5.internal.KDCRep.init(KDCRep.java:140)

at sun.security.krb5.internal.TGSRep.init(TGSRep.java:65)

at sun.security.krb5.internal.TGSRep.<init>(TGSRep.java:60)

at sun.security.krb5.KrbTgsRep.<init>(KrbTgsRep.java:55)

... 38 more

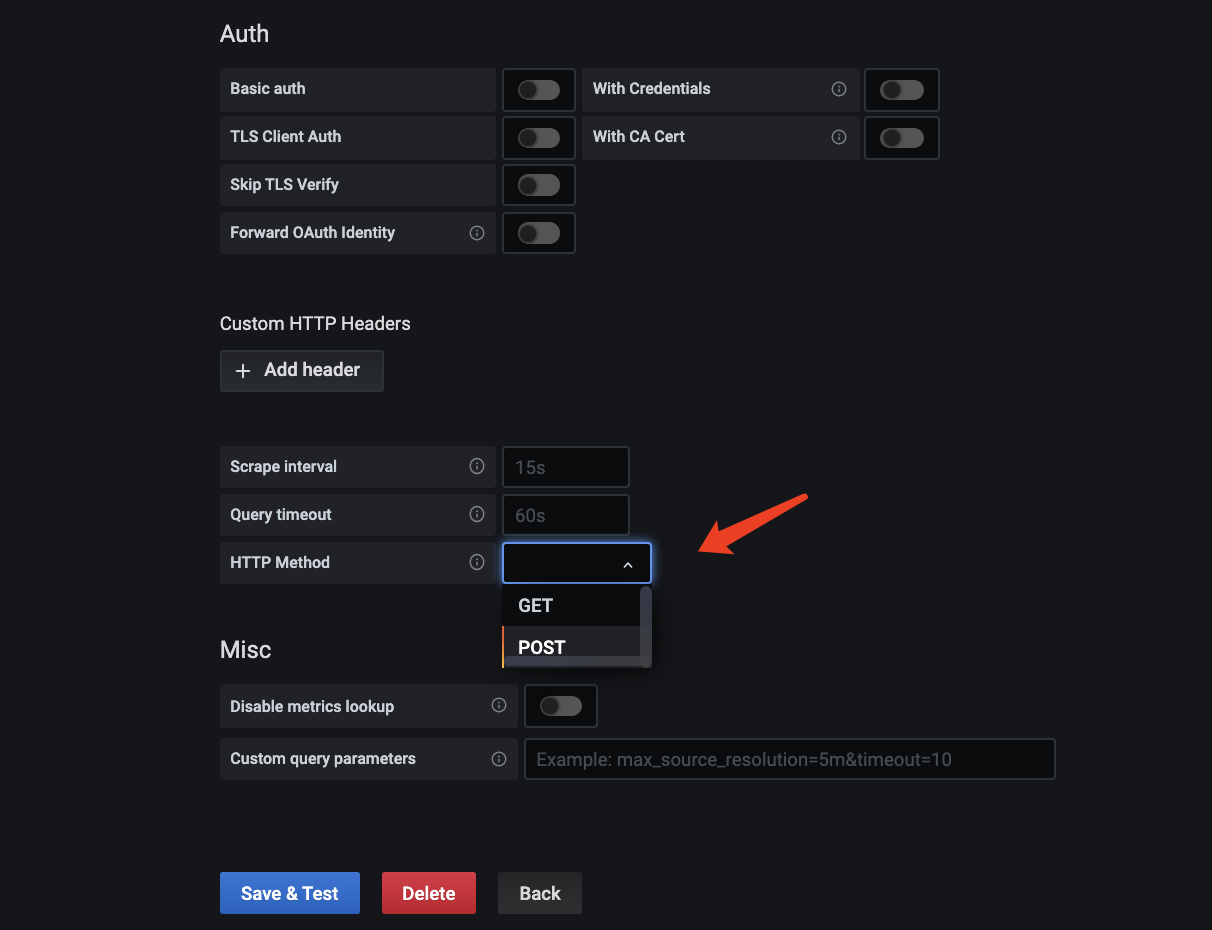

Solution:

After troubleshooting the error reported by connecting to Huawei big data platform, it was found that the Kerberos authentication passed, because the words will use KeyTab commit succeeded appeared, and there was an exception message when creating a consumer. The methods on the Internet have been tried, such as configuring hosts, checking the generated jaas.conf file, comparing krb5 files, etc

finally, replace Kafka’s dependent package with Huawei’s three packages (Kafka)_ 2.11-1.1.0.jar, kafka-clients-1.1.0.jar, zookeeper-3.5.1. Jar) passed!

Maven local package

Maven’s command to install jar package is:

MVN install: install file

– dfile = jar package location

– dgroupid = groupid in POM file

– dartifactid = artifactid in POM file

– dversion = version in POM file

– dpacking = jar

mvn install:install-file -Dfile=./kafka_2.11-1.1.0.jar -DgroupId=org.apache.kafka -DartifactId=kafka_2.11 -Dversion=1.1.0-hw -Dpackaging=jar

Maven introduction

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>1.1.0-hw</version>

</dependency>