0. Preface

Recently, I was doing tensorflow severing model deployment, and I took a yolov5 trained pt model for experiment. Everything was fine for the first few days, but today I encountered an error when creating the tensorflow severing container, the environment, commands, models, configuration files have not changed, and suddenly this error appeared.

1. Problem description

There was an error when creating the tensorflow searching container. The environment, commands, models, and configuration files did not change. Suddenly, this error occurred.

1. Error summary (F is easy to search):

E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

Failed to start server. Error: Unknown: 1 servable(s) did not become available: {{{name: yolov5_saved_model version: 1} due to error: Out of range: Read less bytes than requested}, }

2022-07-30 11:15:09.097717: I tensorflow_serving/core/basic_manager.cc:279] Unload all remaining servables in the manager.

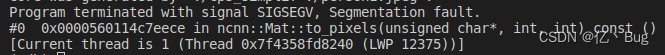

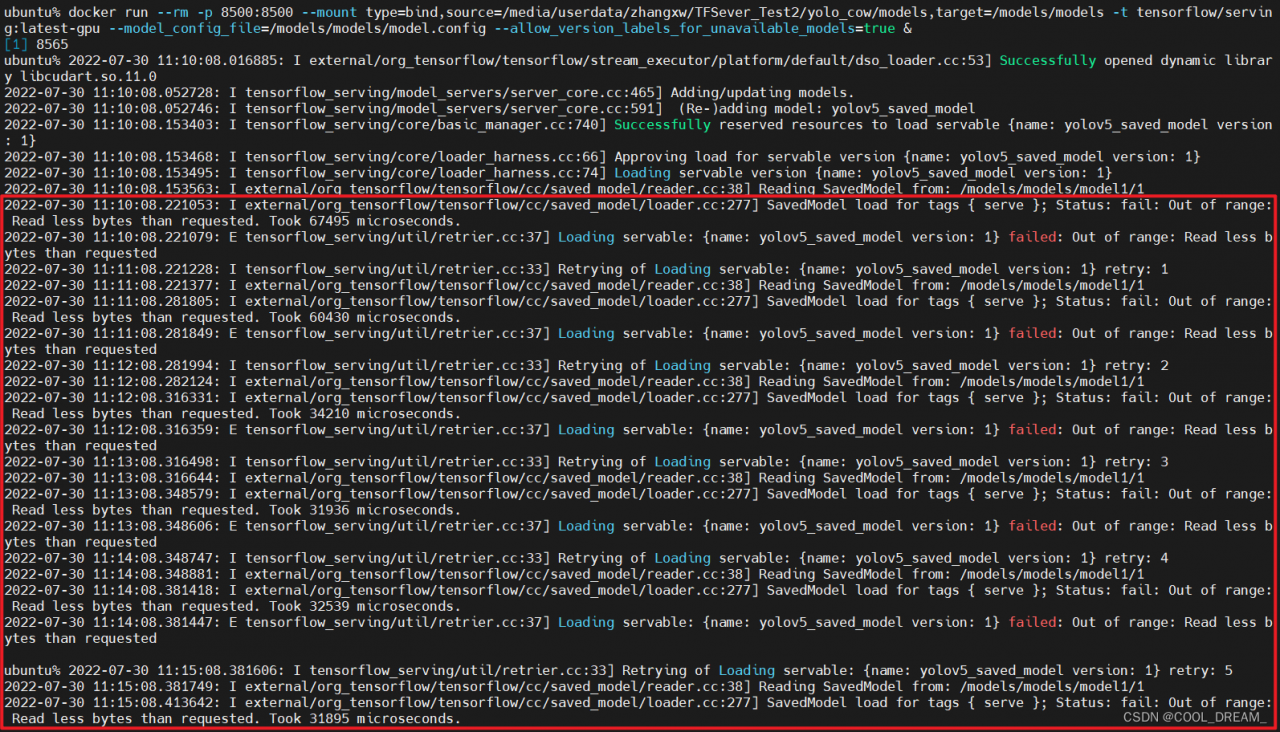

2. Error screenshot:

3. The complete error code is as follows:

(see Appendix)

2. Problem analysis:

The error is caused by the damage of the file. Specifically, I think of two possibilities: the interruption or error of the uploaded file, and the damage of the intact file due to disk problems.

Mine is the second kind

Reference https://github.com/tensorflow/tensorflow/issues/21544 Just found out. 👇

3. Solution:

Delete the model and configuration file and upload it again.

If there is no backup in advance, or you don’t know whether the file is damaged, you can re convert the model to generate a saved model model and create a new configuration file.

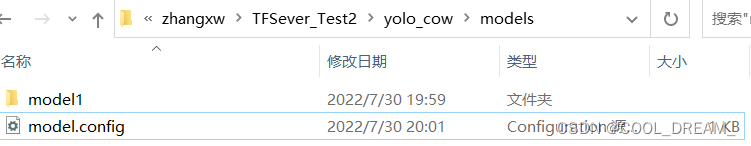

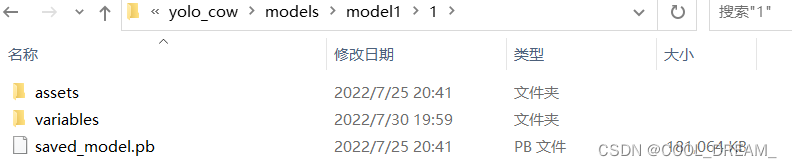

The following figure is the file structure and screenshot of the file I uploaded again.

models

|—-model1

|—- —-1

|—- —- —-assets

|—- —- —- —-variables

|—- —- —- —-variables. data-00000-of-00001

|—- —- —-variables. index

|—- —- —-saved_ model. pb

|—-model. config

reference resources

Similar problems:

https://github.com/tensorflow/tensorflow/issues/21544

https://bytemeta.vip/repo/Breta01/handwriting-ocr/issues/104

appendix

ubuntu% docker run --rm -p 8500:8500 --mount type=bind,source=/media/userdata/zhangxw/TFSever_Test2/yolo_cow/models,target=/models/models -t tensorflow/serving:latest-gpu --model_config_file=/models/models/model.config --allow_version_labels_for_unavailable_models=true &

[1] 8565

ubuntu% 2022-07-30 11:10:08.016885: I external/org_tensorflow/tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2022-07-30 11:10:08.052728: I tensorflow_serving/model_servers/server_core.cc:465] Adding/updating models.

2022-07-30 11:10:08.052746: I tensorflow_serving/model_servers/server_core.cc:591] (Re-)adding model: yolov5_saved_model

2022-07-30 11:10:08.153403: I tensorflow_serving/core/basic_manager.cc:740] Successfully reserved resources to load servable {name: yolov5_saved_model version: 1}

2022-07-30 11:10:08.153468: I tensorflow_serving/core/loader_harness.cc:66] Approving load for servable version {name: yolov5_saved_model version: 1}

2022-07-30 11:10:08.153495: I tensorflow_serving/core/loader_harness.cc:74] Loading servable version {name: yolov5_saved_model version: 1}

2022-07-30 11:10:08.153563: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:10:08.221053: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 67495 microseconds.

2022-07-30 11:10:08.221079: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

2022-07-30 11:11:08.221228: I tensorflow_serving/util/retrier.cc:33] Retrying of Loading servable: {name: yolov5_saved_model version: 1} retry: 1

2022-07-30 11:11:08.221377: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:11:08.281805: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 60430 microseconds.

2022-07-30 11:11:08.281849: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

2022-07-30 11:12:08.281994: I tensorflow_serving/util/retrier.cc:33] Retrying of Loading servable: {name: yolov5_saved_model version: 1} retry: 2

2022-07-30 11:12:08.282124: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:12:08.316331: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 34210 microseconds.

2022-07-30 11:12:08.316359: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

2022-07-30 11:13:08.316498: I tensorflow_serving/util/retrier.cc:33] Retrying of Loading servable: {name: yolov5_saved_model version: 1} retry: 3

2022-07-30 11:13:08.316644: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:13:08.348579: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 31936 microseconds.

2022-07-30 11:13:08.348606: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

2022-07-30 11:14:08.348747: I tensorflow_serving/util/retrier.cc:33] Retrying of Loading servable: {name: yolov5_saved_model version: 1} retry: 4

2022-07-30 11:14:08.348881: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:14:08.381418: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 32539 microseconds.

2022-07-30 11:14:08.381447: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

ubuntu% 2022-07-30 11:15:08.381606: I tensorflow_serving/util/retrier.cc:33] Retrying of Loading servable: {name: yolov5_saved_model version: 1} retry: 5

2022-07-30 11:15:08.381749: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:38] Reading SavedModel from: /models/models/model1/1

2022-07-30 11:15:08.413642: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:277] SavedModel load for tags { serve }; Status: fail: Out of range: Read less bytes than requested. Took 31895 microseconds.

2022-07-30 11:15:08.413673: E tensorflow_serving/util/retrier.cc:37] Loading servable: {name: yolov5_saved_model version: 1} failed: Out of range: Read less bytes than requested

2022-07-30 11:15:08.413680: I tensorflow_serving/util/retrier.cc:46] Retrying of Loading servable: {name: yolov5_saved_model version: 1} exhausted max_num_retries: 5

2022-07-30 11:15:08.413698: I tensorflow_serving/core/loader_harness.cc:155] Encountered an error for servable version {name: yolov5_saved_model version: 1}: Out of range: Read less bytes than requested

2022-07-30 11:15:08.413705: E tensorflow_serving/core/aspired_versions_manager.cc:388] Servable {name: yolov5_saved_model version: 1} cannot be loaded: Out of range: Read less bytes than requested

Failed to start server. Error: Unknown: 1 servable(s) did not become available: {{{name: yolov5_saved_model version: 1} due to error: Out of range: Read less bytes than requested}, }

2022-07-30 11:15:09.097717: I tensorflow_serving/core/basic_manager.cc:279] Unload all remaining servables in the manager.

[1] + exit 255 docker run --rm -p 8500:8500 --mount -t tensorflow/serving:latest-gpu

ubuntu%

```