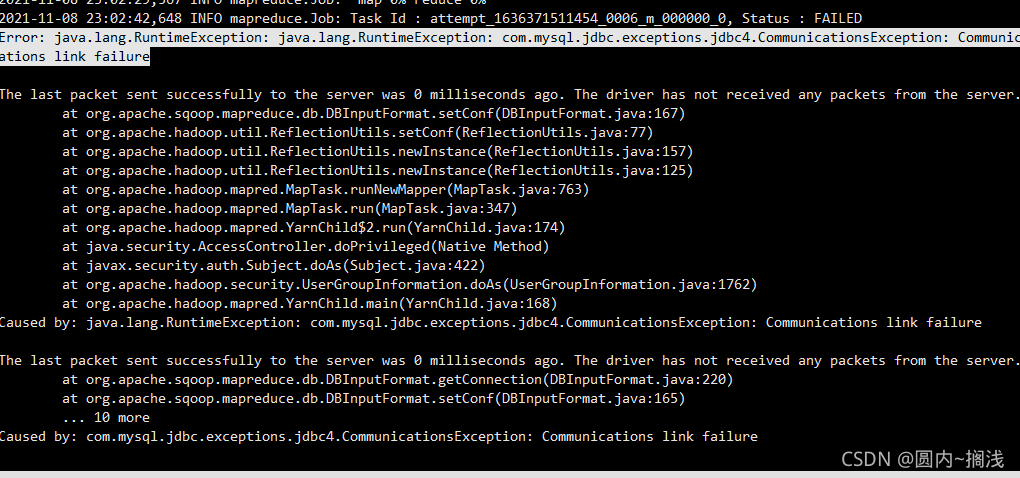

First, the error information is as follows:

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

java.io.IOException: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested resource type=[memory-mb] < 0 or greater than maximum allowed allocation. Requested resource=<memory:1536, vCores:1>, maximum allowed allocation=<memory:256, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:256, vCores:4>It can be seen from the error message that the main reason is that the maximum memory of MR is less than the requested content. Here, we can find it on the yarn site Configure RM data information in XML:

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2548</value>

<discription>Available memory per node, units MB</discription>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>128</value>

<discription>Minimum memory that can be requested for a single task, default 1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<discription>Maximum memory that can be requested for a single task, default 8192MB</discription>

</property>

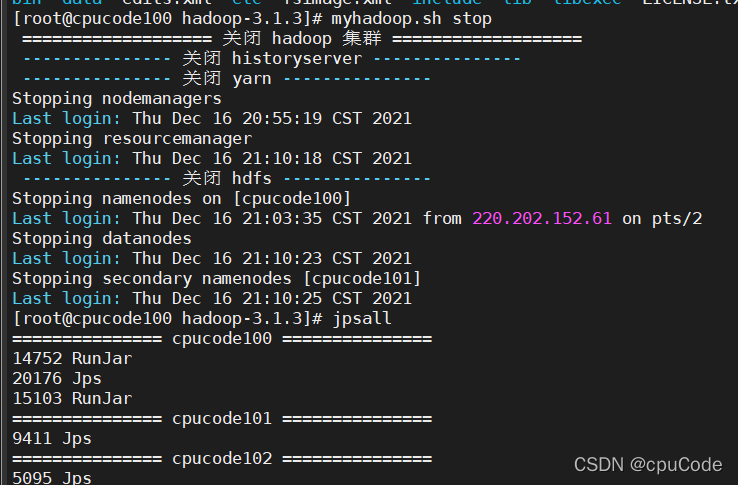

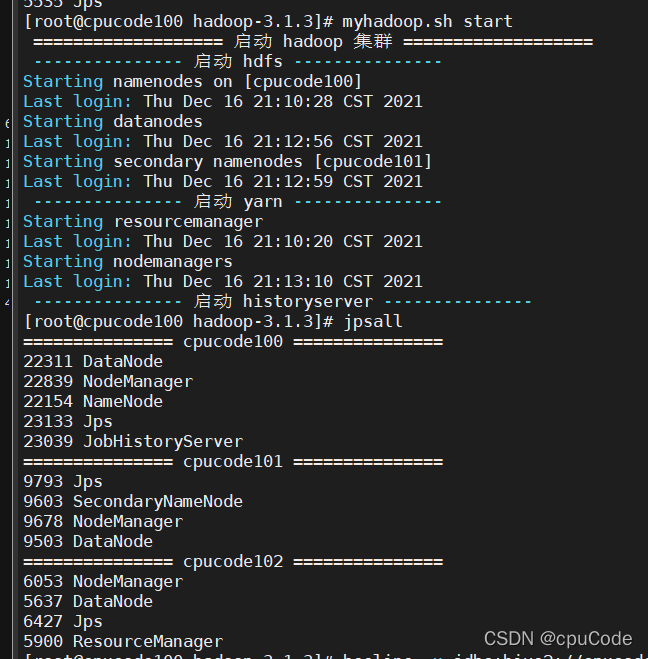

When we restart the Hadoop cluster and run the partition again, an error is reported:

Diagnostic Messages for this Task:

[2021-12-19 10:04:27.042]Container [pid=5821,containerID=container_1639879236798_0001_01_000005] is running 253159936B beyond the 'VIRTUAL' memory limit. Current usage: 92.0 MB of 1 GB physical memory used; 2.3 GB of 2.1 GB virtual memory used. Killing container.

Dump of the process-tree for container_1639879236798_0001_01_000005 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 5821 5820 5821 5821 (bash) 0 0 9797632 286 /bin/bash -c /opt/module/jdk1.8.0_161/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/atguigu/appcache/application_1639879236798_0001/container_1639879236798_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.17.42 42894 attempt_1639879236798_0001_m_000000_3 5 1>/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005/stdout 2>/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005/stderr

|- 5833 5821 5821 5821 (java) 338 16 2498220032 23276 /opt/module/jdk1.8.0_161/bin/java -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx820m -Djava.io.tmpdir=/opt/module/hadoop-3.1.3/data/nm-local-dir/usercache/atguigu/appcache/application_1639879236798_0001/container_1639879236798_0001_01_000005/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/opt/module/hadoop-3.1.3/logs/userlogs/application_1639879236798_0001/container_1639879236798_0001_01_000005 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 192.168.17.42 42894 attempt_1639879236798_0001_m_000000_3 5 It can be seen from the above information that the physical memory data is too small, but in fact our virtual memory is enough, so we can configure here not to check the physical memory:

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

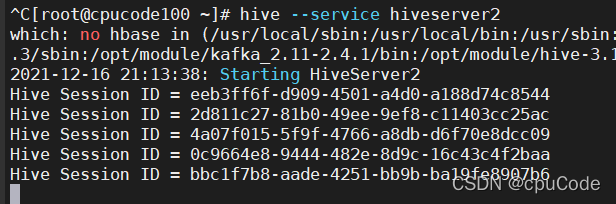

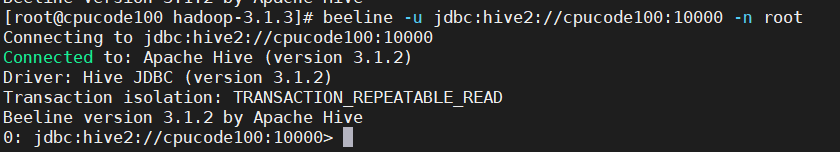

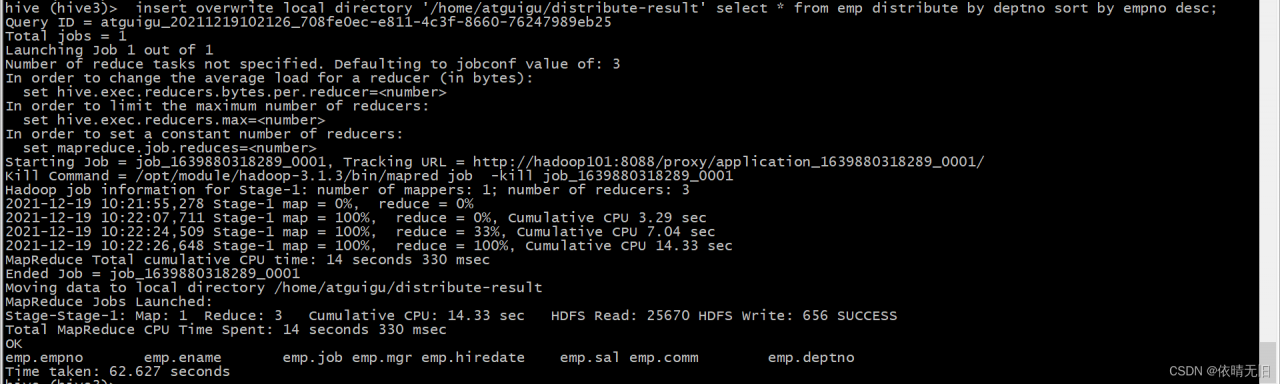

</property>Then restart the cluster: run SQL

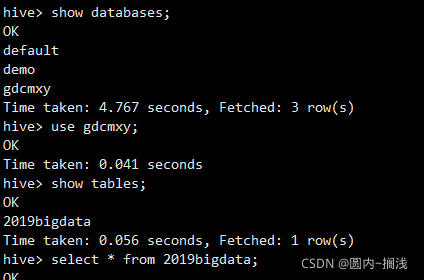

The results can be output normally.