1、Failed to get scan range, no queryable replica found in tablet

Error Message:

ERROR 1064 (HY000): errCode = 2, detailMessage = Failed to get scan range, no queryable replica found in tablet: 11018

MySQL [tpa]> select * from tpa.table1;

ERROR 1064 (HY000): errCode = 2, detailMessage = Failed to get scan range, no queryable replica found in tablet: 11018

MySQL [tpa]>

(1) Check the be cluster information and no problems are found

MySQL [tpa]> show backends \G

*************************** 1. row ***************************

BackendId: 11002

Cluster: default_cluster

IP: 10.17.12.158

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-08-13 09:46:23

LastHeartbeat: 2021-08-25 16:35:22

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 11

DataUsedCapacity: 2.389 KB

AvailCapacity: 2.273 GB

TotalCapacity: 49.090 GB

UsedPct: 95.37 %

MaxDiskUsedPct: 95.37 %

ErrMsg:

Version: 0.14.7-Unknown

Status: {"lastSuccessReportTabletsTime":"2021-08-25 16:34:55"}

*************************** 2. row ***************************

BackendId: 11001

Cluster: default_cluster

IP: 10.17.12.159

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-08-13 09:41:46

LastHeartbeat: 2021-08-25 16:35:22

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 15

DataUsedCapacity: 1.542 KB

AvailCapacity: 12.090 GB

TotalCapacity: 49.090 GB

UsedPct: 75.37 %

MaxDiskUsedPct: 75.37 %

ErrMsg:

Version: 0.14.7-Unknown

Status: {"lastSuccessReportTabletsTime":"2021-08-25 16:34:41"}

*************************** 3. row ***************************

BackendId: 10002

Cluster: default_cluster

IP: 10.17.12.160

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2021-08-25 15:57:11

LastHeartbeat: 2021-08-25 16:35:22

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 10

DataUsedCapacity: 3.084 KB

AvailCapacity: 1.902 GB

TotalCapacity: 49.090 GB

UsedPct: 96.13 %

MaxDiskUsedPct: 96.13 %

ErrMsg:

Version: 0.14.7-Unknown

Status: {"lastSuccessReportTabletsTime":"2021-08-25 16:35:13"}

3 rows in set (0.00 sec)

MySQL [tpa]>

(2) Error in select query command

MySQL [tpa]>desc table1;

+----------+-------------+------+-------+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------+-------------+------+-------+---------+-------+

| siteid | INT | Yes | true | 10 | |

| citycode | SMALLINT | Yes | true | NULL | |

| username | VARCHAR(32) | Yes | true | | |

| pv | BIGINT | Yes | false | 0 | SUM |

+----------+-------------+------+-------+---------+-------+

4 rows in set (0.00 sec)

MySQL [tpa]> select * from tpa.table1;

ERROR 1064 (HY000): errCode = 2, detailMessage = Failed to get scan range, no queryable replica found in tablet: 11018

MySQL [tpa]> select count(1) from table1 ;

ERROR 1064 (HY000): errCode = 2, detailMessage = Failed to get scan range, no queryable replica found in tablet: 11018

MySQL [tpa]>

2. Problem analysis and solution

(1) Problem analysis

in the first step, baidu received an error message and couldn’t find the corresponding error message. Didn’t everyone encounter the same error

in the second step, you can’t find a solution online, so you have to analyze it yourself. My English is very poor, but I can roughly guess that no queryable replica found in tablet roughly means that the corresponding queryable replica cannot be found in tablet. There may be a problem with the copy

the third step is to look up the replica information. I build the table according to the official document. The number of replicas is set to 1. The problem is that it is possible.

CREATE TABLE table1

(

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(siteid, citycode, username)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "1");

(2) Verify the conjecture

the first step is to create a table with 2 copies

MySQL [tpa]> CREATE TABLE t1

-> (

-> siteid INT DEFAULT '10',

-> citycode SMALLINT,

-> username VARCHAR(32) DEFAULT '',

-> pv BIGINT SUM DEFAULT '0'

-> )

-> AGGREGATE KEY(siteid, citycode, username)

-> DISTRIBUTED BY HASH(siteid) BUCKETS 10

-> PROPERTIES("replication_num" = "2");

Query OK, 0 rows affected (1.20 sec)

MySQL [tpa]> exit

Bye

Step 2: import data

[root@node3 ~]# curl --location-trusted -u test:test -H "label:t1_20170707" -H "column_separator:," -T table1_data http://node3:8030/api/tpa/t1/_stream_load

{

"TxnId": 428829,

"Label": "t1_20170707",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 5,

"NumberLoadedRows": 5,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 55,

"LoadTimeMs": 840,

"BeginTxnTimeMs": 1,

"StreamLoadPutTimeMs": 12,

"ReadDataTimeMs": 0,

"WriteDataTimeMs": 710,

"CommitAndPublishTimeMs": 116

}

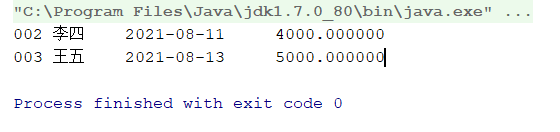

Step 3: execute the query and everything is normal.

[root@node3 ~]# mysql -h 10.17.12.160 -P 9030 -uroot -p123456

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 23

Server version: 5.1.0 Baidu Doris version 0.14.7-Unknown

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> use tpa;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MySQL [tpa]> select count(1) from t1;

+----------+

| count(1) |

+----------+

| 5 |

+----------+

1 row in set (1.01 sec)

MySQL [tpa]>

Preliminary conclusion: it may be due to the problem of the number of copies when creating the table, which needs further verification in the future