Project scenario:

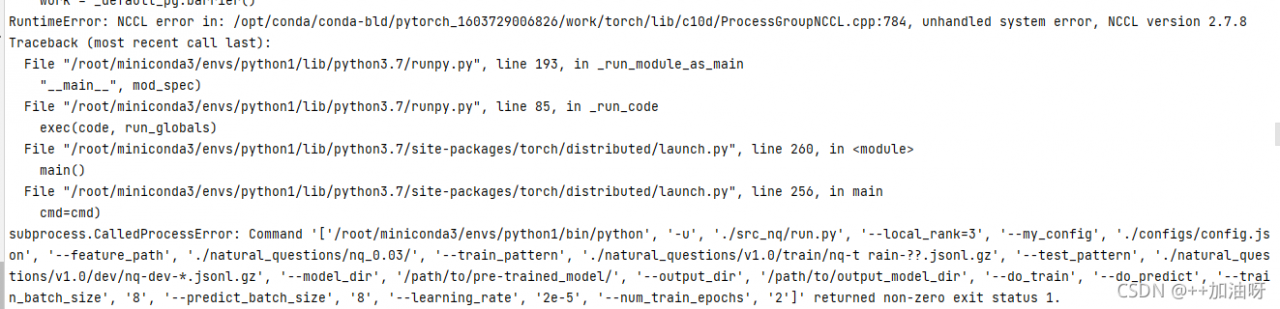

This problem is encountered in distributed training,

Problem description

Perhaps parallel operation is not started???(

Solution:

(1) First, check the server GPU related information. Enter the pytorch terminal to enter the code

python

torch.cuda.is_available()# to see if cuda is available.

torch.cuda.device_count()# to see the number of gpu's.

torch.cuda.get_device_name(0)# to see the gpu name, the device index starts from 0 by default.

torch.cuda.current_device()# return the current device index.

Ctrl+Z Exit

(2) cd enters the upper folder of the file to be run

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5 python -m torch.distributed.launch --nproc_per_node=6 #启动并行运算

Plus files to run and related configurations

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5 python -m torch.distributed.launch --nproc_per_node=6 src_nq/create_examples.py --vocab_file ./bert-base-uncased-vocab.txt \--input_pattern "./natural_questions/v1.0/train/nq-train-*.jsonl.gz" \--output_dir ./natural_questions/nq_0.03/\--do_lower_case \--num_threads 24 --include_unknowns 0.03 --max_seq_length 512 --doc_stride 128

Problem-solving!

Read More:

- RuntimeError: NCCL Error 2: unhandled system error [How to Solve]

- [ncclUnhandledCudaError] unhandled cuda error, NCCL version xx.x.x

- [Solved] mmdetection benchmark.py Error: RuntimeError: Distributed package doesn‘t have NCCL built in

- [Solved] bushi RuntimeError: version_ <= kMaxSupportedFileFormatVersion INTERNAL ASSERT FAILED at /pytorch/caffe2/s

- [Pytorch Error Solution] Pytorch distributed RuntimeError: Address already in use

- RuntimeError: Address already in use [How to Solve]

- RuntimeError: Failed to register operator torchvision::_new_empty_tensor_op. +torch&torchversion Version Matching

- [Solved] Pytorch c++ Error: Error checking compiler version for cl: [WinError 2] System cannot find the specified file.

- [Solved] RuntimeError: Error(s) in loading state_dict for BertForTokenClassification

- [Solved] DDP/DistributedDataParallel Error: RuntimeError: Address already in use

- [Solved] RuntimeError: a view of a leaf Variable that requires grad is being used in an in-place

- [Solved] Pytorch Error: RuntimeError: Error(s) in loading state_dict for Network: size mismatch

- [Solved] RuntimeError: Error(s) in loading state_dict for Net:

- [Solved] PyTorch Caught RuntimeError in DataLoader worker process 0和invalid argument 0: Sizes of tensors mus

- Autograd error in Python: runtimeerror: grad can be implicitly created only for scalar outputs

- [Solved] RuntimeError: Error(s) in loading state dict for YOLOX:

- [Solved] python tqdm raise RuntimeError(“cannot join current thread“) RuntimeError: cannot join current thr

- [Solved] RuntimeError: cublas runtime error : resource allocation failed at

- How to Solve Error: RuntimeError CUDA out of memory

- [Solved] RuntimeError: CUDA error: CUBLAS_STATUS_EXECUTION_FAILED when calling `cublasSgemm