preface

Scalar is a tensor of order 0 (a number), which is 1 * 1;

vector is a tensor of order 1, which is 1 * n;

tensor can give the relationship between all coordinates, which is n * n.

So it is usually said that tensor (n * n) is reshaped into vector (1 * n). In fact, there is no big change in the reshaping process.

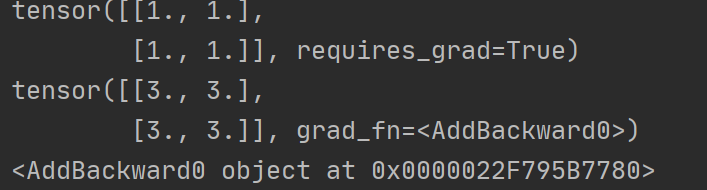

import torch

x=torch.ones(2,2,requires_grad=True)

print(x)

y=x+2

print(y)

#If a tensor is not created by the user, it has the grad_fn attribute. The grad_fn attribute holds a reference to the Function that created the tensor

print(y.grad_fn)

y.backward()

print(x.grad)Running results

You can see that y is a tensor, which cannot use backward (), and needs to be converted to scalar,

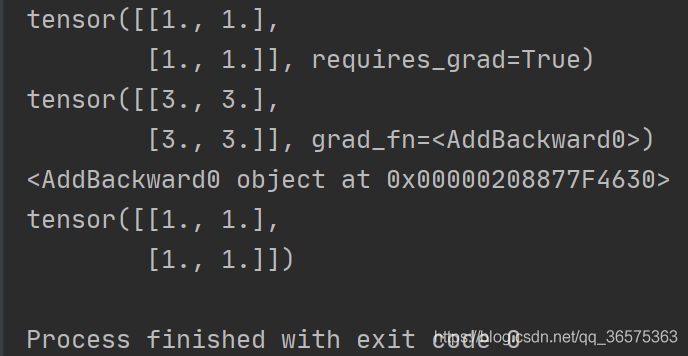

Of course, tensor is also OK, that is, you need to change a code:

z.backward( torch.ones_ like(x))

Our return value is not a scalar, so we need to input a tensor of the same size as the parameter. Here we use ones_ The like function generates a tensor from X. In my opinion, because we want to obtain the derivative of X, the function y must be a value obtained, that is, a scalar. Then we start to find the partial derivatives of X and y, respectively.

After modification

import torch

x=torch.ones(2,2,requires_grad=True)

print(x)

y=x+2

print(y)

#If a tensor is not created by the user, it has the grad_fn attribute. The grad_fn attribute holds a reference to the Function that created the tensor

print(y.grad_fn)

y.backward(torch.ones_like(x))

print(x.grad)Running results

Read More:

- [Solved] RuntimeError: a view of a leaf Variable that requires grad is being used in an in-place

- [Solved] Pytorch Tensor to numpy error: RuntimeError: Can‘t call numpy() on Tensor that requires grad.报错

- [Solved] RuntimeError: a view of a leaf Variable that requires grad is being used in an in-place operation

- [Solved] Pytorch Error: RuntimeError: expected scalar type Double but found Float

- [Solved] ValueError: only one element tensors can be converted to Python scalars

- [Solved] RuntimeError: expected scalar type Long but found Float

- Pytorch Error: runtimeerror: expected scalar type double but found float

- Python errors: valueerror: if using all scalar values, you must pass an index (four solutions)

- Pychar can’t connect to Python console, but it can run. Py file, and Anaconda’s command line can run Python command

- You can run the Ansible Playbook in Python by hand

- [Solved] RuntimeError: DefaultCPUAllocator: not enough memory: you tried to allocate 1105920 bytes.

- pytorch DDP Accelerate Error: [W reducer.cpp:362] Warning: Grad strides do not match bucket view strides.

- [Solved] RuntimeError: Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the

- Python RuntimeError: Expected 4-dimensional input for 4-dimensional weight [32, 1, 5, 5]

- [Solved] Runtime error: expected scalar type Float but found Double

- [Solved] Python matplotlib Error: RuntimeError: In set_size: Could not set the fontsize…

- Python Error: SyntaxError: (unicode error) ‘unicodeescape‘ codec can‘t decode bytes in position 2-3:

- [Solved] PyQt: RuntimeError: wrapped C/C++ object has been deleted & has no attribute of flush in python

- RuntimeError: stack expects each tensor to be equal size [How to Solve]

- [Solved] python tqdm raise RuntimeError(“cannot join current thread“) RuntimeError: cannot join current thr