How to Solve pytorch loss.backward() Error

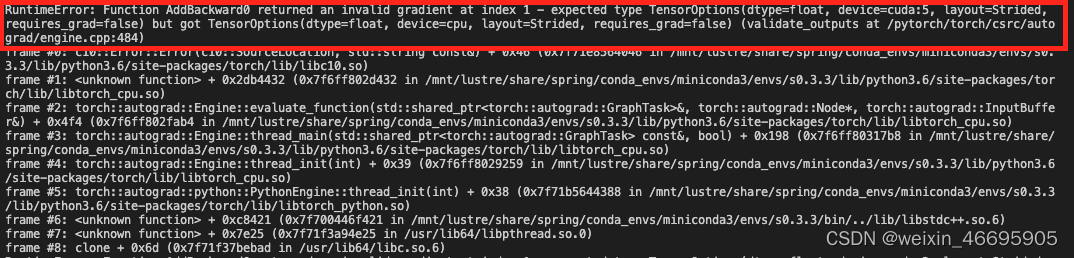

The specific errors are as follows:

In fact, the error message makes it clear that the error is caused by device inconsistency in the backpropagation process.

In the code, we first load all input, target, model, and loss to the GPU via .to(device). But when calculating the loss, we initialize a loss ourselves

loss_1 = torch.tensor(0.0)

This way by default loss_1 is loaded on the CPU.

The final execution loss_seg = loss + loss_1

results in the above error for loss_seg.backward().

Modification method:

Modify

loss_1 = torch.tensor(0.0)

to

loss_1 = torch.tensor(0.0).to(device)

or

loss_1 = torch.tensor(0.0).cuda()

Read More:

- [Solved] RuntimeError: function ALSQPlusBackward returned a gradient different than None at position 3, but t

- [Solved] Pytorch error: RuntimeError: one of the variables needed for gradient computation

- Pytorch torch.cuda.FloatTensor Error: RuntimeError: one of the variables needed for gradient computation has…

- [Solved] bushi RuntimeError: version_ <= kMaxSupportedFileFormatVersion INTERNAL ASSERT FAILED at /pytorch/caffe2/s

- [Solved] RuntimeError: one of the variables needed for gradient computation has been modified by an inplace

- [Solved] pytorc Error: RuntimeError: one of the variables needed for gradient computation has been modified by an

- [Solved] PyTorch Caught RuntimeError in DataLoader worker process 0和invalid argument 0: Sizes of tensors mus

- Pytorch Error: runtimeerror: expected scalar type double but found float

- [Solved] RuntimeError: gather(): Expected dtype int64 for index

- [Solved] Pytorch Error: RuntimeError: expected scalar type Double but found Float

- pytorch: RuntimeError CUDA error device-side assert triggered

- [Solved] RuntimeError : PyTorch was compiled without NumPy support

- [Pytorch Error Solution] Pytorch distributed RuntimeError: Address already in use

- Pytorch Error: RuntimeError: value cannot be converted to type float without overflow: (0.00655336,-0.00

- [Solved] RuntimeError: scatter(): Expected dtype int64 for index

- RuntimeError: stack expects each tensor to be equal size, but got [x] at entry 0 and [x] at entry 1

- pytorch RuntimeError: Error(s) in loading state_ Dict for dataparall… Import model error solution

- [Solved] PyTorch Load Model Error: Missing key(s) RuntimeError: Error(s) in loading state_dict for

- [Solved] Pytorch Error: RuntimeError: Error(s) in loading state_dict for Network: size mismatch

- [Solved] RuntimeError: cublas runtime error : resource allocation failed at