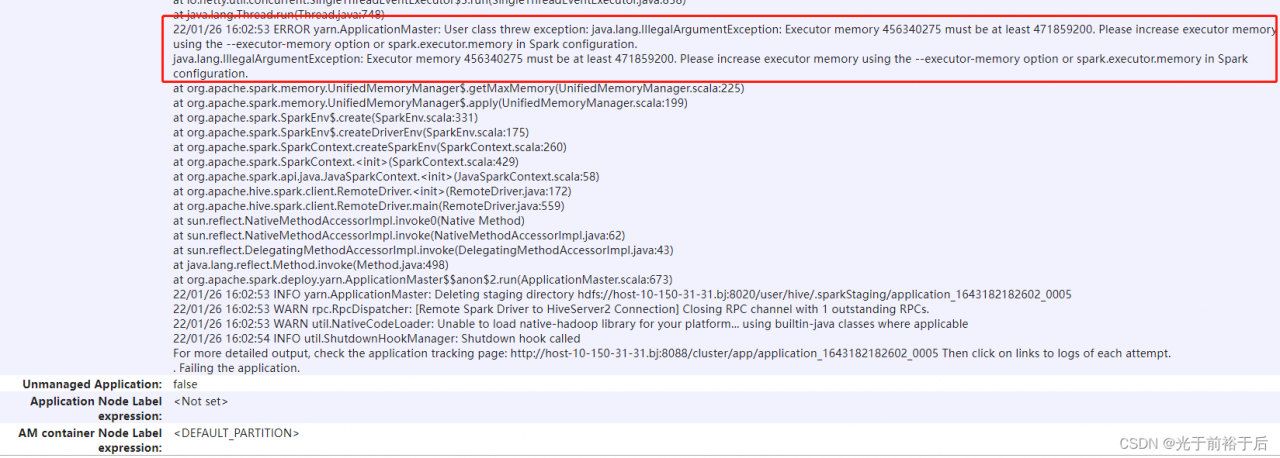

Error Messages:

Failed to monitor Job[-1] with exception ‘java.lang.IllegalStateException(Connection to remote Spark driver was lost)’ Last known state = SENT

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask. Unable to send message SyncJobRequest{job=org.apache.hadoop.hive.ql.exec.spark.status.impl.RemoteSparkJobStatus$GetAppIDJob@7805478c} because the Remote Spark Driver - HiveServer2 connection has been closed.

The real cause of this problem requires going to Yarn and looking at the Application’s detailed logs at

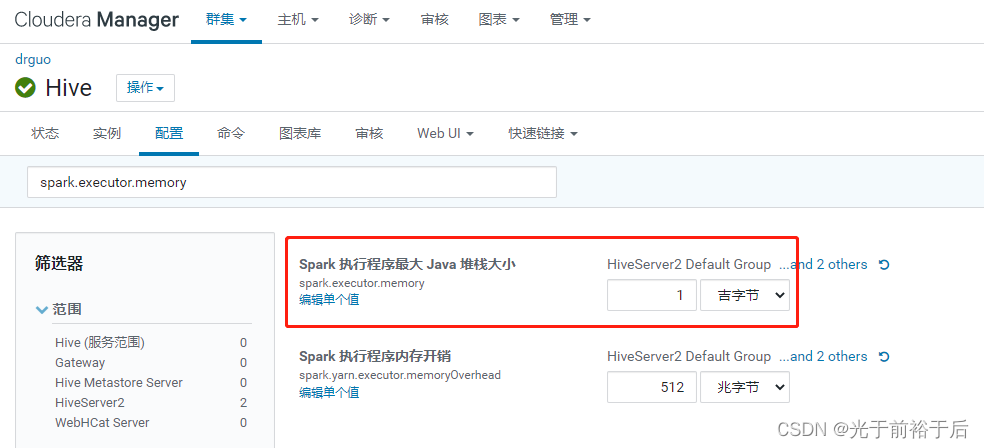

It turns out that the executor-memory is too small and needs to be modified in the hive configuration page

Save the changes and restart the relevant components, the problem is solved.

Read More:

- How to Solve classnotfoundexception error in spark without Hadoop runtime

- [Solved] Rocketmq remote connection error: sendDefaultimpl call timeout

- [Solved] JAVA Connect rabbitMQ Error: An unexpected connection driver error occured

- [Solved] Spring jdbctemplate Error: ‘Java. SQL. Driver’ for property ‘driver’: no

- [Solved] ssm Error: Error querying database. Cause: org.apache.ibatis.executor.ExecutorException: Executor was closed

- [Solved] Hive Run SQL error: mapreduce failed to initiate a task

- [Solved] failed on connection exception: java.net.ConnectException: Connection denied

- Hive operation TMP file viewing content error [How to Solve]

- [Solved] Hadoop failed on connection exception: java.net.ConnectException: Connection refused

- hive Run Error: Error: Java heap space [How to Solve]

- [Solved] Cannot find class: com.mysql.jdbc.Driver

- [Solved] Error setting driver on UnpooledDataSource. Cause: java.lang.ClassNotFoundException: Cannot find cla

- I/O error while reading input message; nested exception is java.io.IOException: Stream closed

- [Solved] Multithreading uses jsch to obtain a session for connection error: session.connect: java.net.socketexception: connection reset

- [Solved] docker Error response from daemon driver failed programming external connectivity on endpoint lamp

- How to Solve Error: No suitable driver found for

- [Solved] Error response from daemon: driver failed programming external connectivity on endpoint mymysql

- Reason: failed to determine a suitable driver class [Solved]

- [Solved] hadoop Error: 9000 failed on connection exception java.net.ConnectException Denied to Access

- Error querying database.Cause: java.sql.SQLException: Error setting driver on UnpooledDataSource.