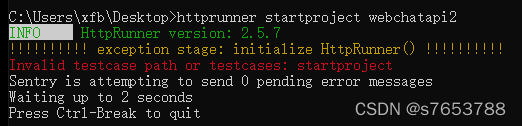

1. Error information

(python37) H:\emd>python setup.py install

running install

running bdist_egg

running egg_info

creating emd.egg-info

writing emd.egg-info\PKG-INFO

writing dependency_links to emd.egg-info\dependency_links.txt

writing top-level names to emd.egg-info\top_level.txt

writing manifest file 'emd.egg-info\SOURCES.txt'

D:\Anaconda_app\envs\python37\lib\site-packages\torch\utils\cpp_extension.py:370: UserWarning: Attempted to use ninja as the BuildExtension backend but we

could not find ninja.. Falling back to using the slow distutils backend.

warnings.warn(msg.format('we could not find ninja.'))

reading manifest file 'emd.egg-info\SOURCES.txt'

writing manifest file 'emd.egg-info\SOURCES.txt'

installing library code to build\bdist.win-amd64\egg

running install_lib

running build_ext

D:\Anaconda_app\envs\python37\lib\site-packages\torch\utils\cpp_extension.py:305: UserWarning: Error checking compiler version for cl: [WinError 2] The system finds The specified file was not found.

warnings.warn(f'Error checking compiler version for {compiler}: {error}')

building 'emd' extension

creating build

creating build\temp.win-amd64-3.7

……

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(427): note: See the instantiation of "OptionalBase<at::Tensor>" to the class template being compiled.

References

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\ATen/core/TensorBody.h(734): note: See the class template instantiating "c10:: optional<at::Tensor>

" reference

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(395): warning C4624: "c10::trivially_ copyable_optimization_optional_base

<T>": the destructor has been implicitly defined as "deleted"

with

[

T=at::Tensor

]

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(476): warning C4814: “c10::optional<at::Tensor>::contained_val”: In C++

14, "constexpr" will not mean "constant"; please consider specifying "constant" explicitly

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(477): error C2556: “at::Tensor &c10::optional<at::Tensor>::contained_val

(void) const &”: Overloaded functions with “const at::Tensor &c10::optional<at::Tensor>::contained_val(void) const &”It just differs in the return type

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(471): note: See the declaration of "c10::optional<at::Tensor>::contained_val"

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(477): error C2373: “c10::optional<at::Tensor>::contained_val”: Redefinition.

Different type modifiers

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(471): note: see "c10::optional<at:: Tensor>::contained_val" declaration

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(476): warning C4814: "c10::optional< int64_t>::contained_val": in C++14

In C++14, "constexpr" will not mean "constant"; please consider specifying "constant" explicitly

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\ATen/core/TensorBody.h(774): note: see instantiating "c10:: optional<int64_t>" for the

References

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(477): error C2556: "int64_t &c10:: optional<int64_t>::contained_val(void)

const &": overloaded function is the same as "const int64_t &c10::optional<int64_t>::contained_val(void) const & " only differs in the return type

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(471): note: see "c10::optional<int64_t t>::contained_val" declaration

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(477): error C2373: "c10::optional< int64_t>::contained_val": redefinition; different

Same type modifier

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(471): note: see "c10::optional< int64_t t>::contained_val" declaration

D:\Anaconda_app\envs\python37\lib\site-packages\torch\include\c10/util/Optional.h(477): fatal error C1003: error count exceeds 100; compilation is being stopped

error: command 'D:\\Program Files (x86)\\Microsoft Visual Studio 14.0\\VC\\BIN\\x86_amd64\\cl.exe' failed with exit status 2

2. Error analysis

Analysis: From the above error message, we get the information

D:\Anaconda_app\envs\python37\lib\site-packages\torch\utils\cpp_extension.py:370: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja… Falling back to using the slow distutils backend.

D:\Anaconda_app\envs\python37\lib\site-packages\torch\utils\cpp_extension.py:305: UserWarning: Error checking compiler version for cl: [WinError 2] The system could not find the

warnings.warn(f’Error checking compiler version for {compiler}: {error}’)

1) I need to use the ninja package to compile, but I don’t have ninja installed, so I need to install ninja first (pip install ninja)

2) Can’t find the cl.exe that meets the required version, according to the given D:\Anaconda_app\envs\python37\lib\site-packages\torch\utils\cpp_extension.py:305:

Jump to source code

try:

if sys.platform.startswith('linux'):

minimum_required_version = MINIMUM_GCC_VERSION

versionstr = subprocess.check_output([compiler, '-dumpfullversion', '-dumpversion'])

version = versionstr.decode().strip().split('.')

else:

minimum_required_version = MINIMUM_MSVC_VERSION

compiler_info = subprocess.check_output(compiler, stderr=subprocess.STDOUT)

match = re.search(r'(\d+)\.(\d+)\.(\d+)', compiler_info.decode().strip())

version = (0, 0, 0) if match is None else match.groups()

except Exception:

_, error, _ = sys.exc_info()

warnings.warn(f'Error checking compiler version for {compiler}: {error}')

return False

I am not on the Linux platform, but on the win platform, so I found the version requirements of cl.exe:

MINIMUM_MSVC_VERSION = (19, 0, 24215)

MSVC is a compiler called “cl.exe”. It is a compiler specially developed by Microsoft for vs.

The above statement states that the minimum version of MSVC requires MSVC 19.0.24215

3. Knowledge to be understood

_MSC_Ver is the built-in macro of MSVC compiler, which defines the version of the compiler

MS is short for Microsoft

C MSc is Microsoft’s C compiler

short for ver version.

So _MSC_Ver means: the version of Microsoft’s C compiler.

MSC 1.0 _MSC_VER == 100

MSC 2.0 _MSC_VER == 200

MSC 3.0 _MSC_VER == 300

MSC 4.0 _MSC_VER == 400

MSC 5.0 _MSC_VER == 500

MSC 6.0 _MSC_VER == 600

MSC 7.0 _MSC_VER == 700

MSVC++ 1.0 _MSC_VER == 800

MSVC++ 2.0 _MSC_VER == 900

MSVC++ 4.0 _MSC_VER == 1000 (Developer Studio 4.0)

MSVC++ 4.2 _MSC_VER == 1020 (Developer Studio 4.2)

MSVC++ 5.0 _MSC_VER == 1100 (Visual Studio 97 version 5.0)

MSVC++ 6.0 _MSC_VER == 1200 (Visual Studio 6.0 version 6.0)

MSVC++ 7.0 _MSC_VER == 1300 (Visual Studio .NET 2002 version 7.0)

MSVC++ 7.1 _MSC_VER == 1310 (Visual Studio .NET 2003 version 7.1)

MSVC++ 8.0 _MSC_VER == 1400 (Visual Studio 2005 version 8.0)

MSVC++ 9.0 _MSC_VER == 1500 (Visual Studio 2008 version 9.0)

MSVC++ 10.0 _MSC_VER == 1600 (Visual Studio 2010 version 10.0)

MSVC++ 11.0 _MSC_VER == 1700 (Visual Studio 2012 version 11.0)

MSVC++ 12.0 _MSC_VER == 1800 (Visual Studio 2013 version 12.0)

MSVC++ 14.0 _MSC_VER == 1900 (Visual Studio 2015 version 14.0)

MSVC++ 14.1 _MSC_VER == 1910 (Visual Studio 2017 version 15.0)

MSVC++ 14.11 _MSC_VER == 1911 (Visual Studio 2017 version 15.3)

MSVC++ 14.12 _MSC_VER == 1912 (Visual Studio 2017 version 15.5)

MSVC++ 14.13 _MSC_VER == 1913 (Visual Studio 2017 version 15.6)

MSVC++ 14.14 _MSC_VER == 1914 (Visual Studio 2017 version 15.7)

MSVC++ 14.15 _MSC_VER == 1915 (Visual Studio 2017 version 15.8)

MSVC++ 14.16 _MSC_VER == 1916 (Visual Studio 2017 version 15.9)

MSVC++ 14.2 _MSC_VER == 1920 (Visual Studio 2019 Version 16.0)

MSVC++ 14.21 _MSC_VER == 1921 (Visual Studio 2019 Version 16.1)

MSVC++ 14.22 _MSC_VER == 1922 (Visual Studio 2019 Version 16.2)

For example, MSVC + + 14.0 indicates that the version of Visual C + + is 14.0, and visual studio 2015 in parentheses indicates that the VC + + is included in Microsoft development tool visual studio 2015.

4. Review problems and Solutions

1) Ninja has been installed

2) compared with the table in the third part, I need to install vs2017 at least, but the vs version in the computer is 2015. Uninstall and reinstall. (some small partners can reinstall directly without uninstallation. They are used to uninstalling and reinstalling)

vs2015 complete uninstallation: use totaluninstaller to completely uninstall visual studio 2013 and 2015

vs2017 installation: vs2017 download address and installation tutorial (illustration)

The vs2017 download method in the above link is highly recommended. There is a simplified Chinese version of the installation boot program, which is very convenient~